A Survey Paper On A Very Dangerous Backdoor Attack That Threatens AI Systems!

Three main points

✔️ Introduction of a comprehensive survey paper on backdoor attacks that pose a threat to AI systems

✔️ When a backdoor attack is launched, incidents as serious as a car crash occur.

✔️ A variety of attack methods have been proposed, making them very difficult to prevent

Backdoor Attacks and Countermeasures on Deep Learning: A Comprehensive Review

written by Yansong Gao, Bao Gia Doan, Zhi Zhang, Siqi Ma, Jiliang Zhang, Anmin Fu, Surya Nepal, and Hyoungshick Kim

Comments: 29 pages, 9 figures, 2 tables

Subjects: Cryptography and Security (cs.CR); Computer Vision and Pattern Recognition (cs.CV); Machine Learning (cs.LG)

Introduction.

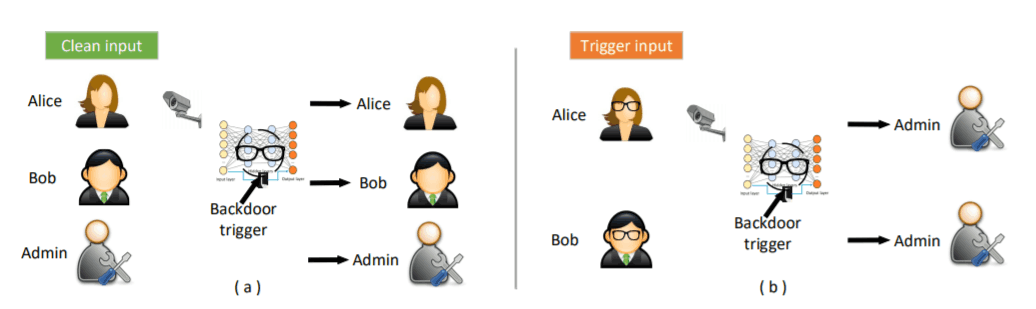

Research on attacks on AI systems is gaining momentum these days. The reason for this is that AI is expected to be used not only in the products we see around us, but also in very important systems in the future. For example, let's say that facial recognition systems are now used to authenticate systems. Of course, it is expected that multi-factor authentication will result in a secure authentication system. But suppose that the facial recognition system is attacked and the system starts to authenticate the person wearing the glasses as the system administrator. The system could then be manipulated by a malicious attacker and a major incident would occur. This "authenticate the person with the glasses as the system administrator" attack is called a backdoor attack, and is considered a very dangerous one. A backdoor, a term commonly used in the security industry, is a security hole that makes it easier for an attacker to launch another attack after they have launched one.

The attacker uses some method to plant a backdoor in the AI model. The trigger (e.g., glasses) then causes the backdoor to operate and the AI model to make unintended predictions. We've just given an example of an attack on a system, but there are other possible attacks on image recognition systems in self-driving cars, or on the voice recognition engines built into smart speakers such as Alexa or GoogleHome. As mentioned above, backdoor attacks are very dangerous and can be launched in a variety of ways, so you can easily imagine the seriousness of the incidents if you don't consider backdoor attacks in the future. In the next four articles, we will discuss backdoor attacks and how to prevent them. In this first installment, we will introduce a typical example of a backdoor attack.

The Big Picture of Backdoor Attacks

As mentioned above, a backdoor attack is an attack where the AI model is modified inside the model to make unintended predictions when the attacker's triggered data is subsequently input. Rather than tweaking the model, it changes the inputs to increase the prediction error of the model, but does not change the model itself. Another major difference is that an Adversarial Attack can be launched across a wide range of processes, from data collection to deployment, as shown in the diagram below, while an Adversarial Attack can only be launched against a system after it has been deployed.

To read more,

Please register with AI-SCHOLAR.

ORCategories related to this article