Does Bias Exist In Face Localization In Face Detection Models?

3 main points

✔️ Validate bias in localization accuracy in face detection models

✔️ Create a new dataset with 10 attribute labels to validate multidimensional bias

✔️ Check bias in gender, age, and skin tone in all face detection models

Are Face Detection Models Biased?

written by Surbhi Mittal, Kartik Thakral, Puspita Majumdar, Mayank Vatsa, Richa Singh

(Submitted on 7 Nov 2022)

Comments: Accepted in FG 2023

Subjects: Computer Vision and Pattern Recognition (cs.CV)

code:

The images used in this article are from the paper, the introductory slides, or were created based on them.

summary

Machine learning is used in a variety of services, but bias in machine learning models is a significant problem. This is because bias disadvantages people with certain attributes and leads to unfair results. In particular, accuracy differences based on gender or race can lead to "discrimination" in face recognition, facial recognition, facial attribute prediction, and face detection, all of which deal with biometric female information. In fact, in 2020, companies that provided face-related technologies stopped providing their systems one after another when it was discovered that the accuracy of face recognition systems in public use differed depending on race. Since then, research on bias in face-related technologies has been active.

While face recognition and face recognition, which have been the focus of much attention and are now being put to practical use, have been well researched, face detection has not been well studied. However, since face detection technology has applications in all face-related technology pipelines, it can be assumed that understanding biases in face detection technology will help reveal the existence of biases in various face-related technologies.

Some studies on bias in face detection have investigated bias as a binary classification between the "Face" and "Non-Face" classes, but there has been no study on the most important aspect of face detection, i.e., localization. Therefore, this paper investigates bias in terms of the accuracy of face region localization, which has not been verified so far.

To study bias from multiple angles, this paper exposes a new dataset with attribute data that is missing from the existing database. In addition to face location data, we have a dataset " F2LA" labeled with 10 attribute data for each face. We then use this dataset to examine the bias in the detection accuracy of existing face detection models concerning gender, skin tone, and age, as well as confounding factors other than these demographic factors that may influence the bias.

F2LA Data Set

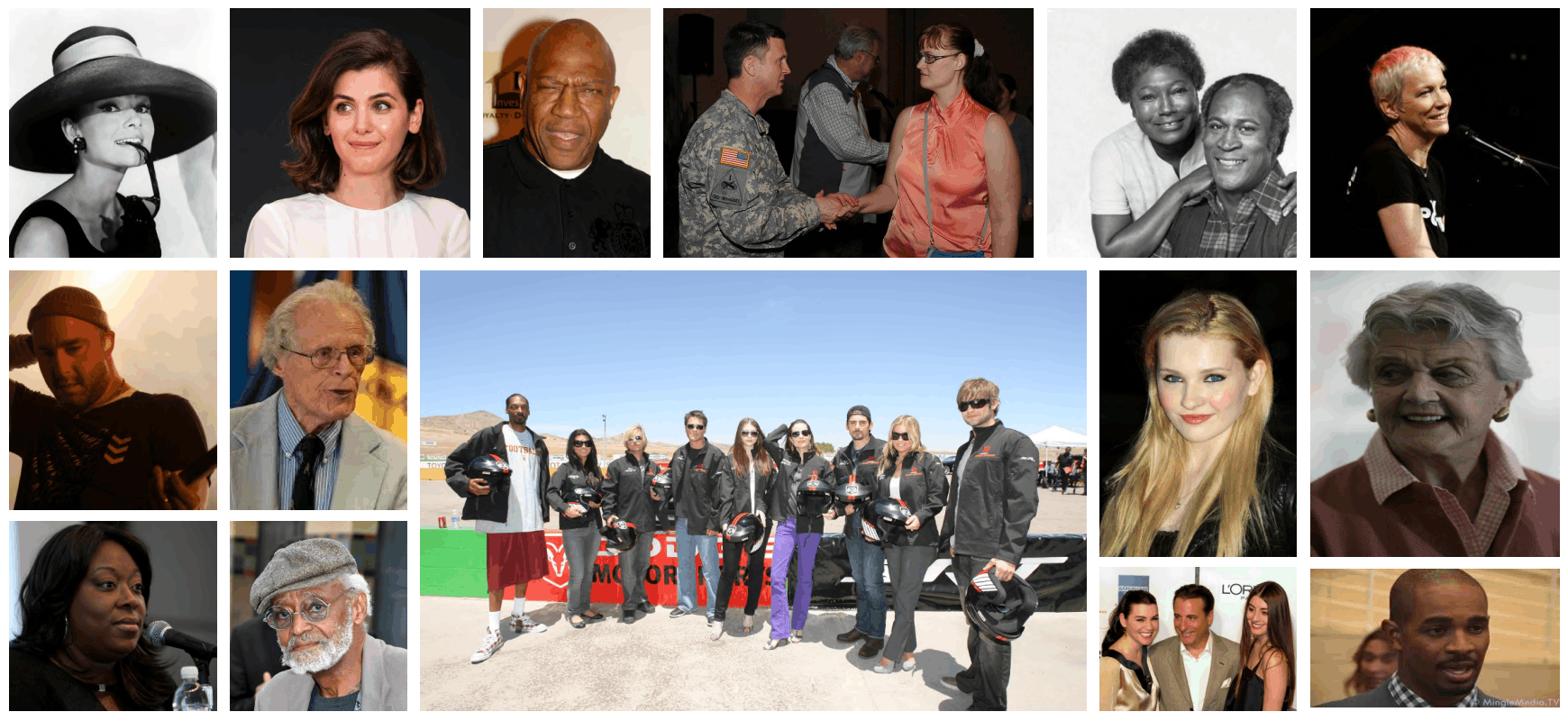

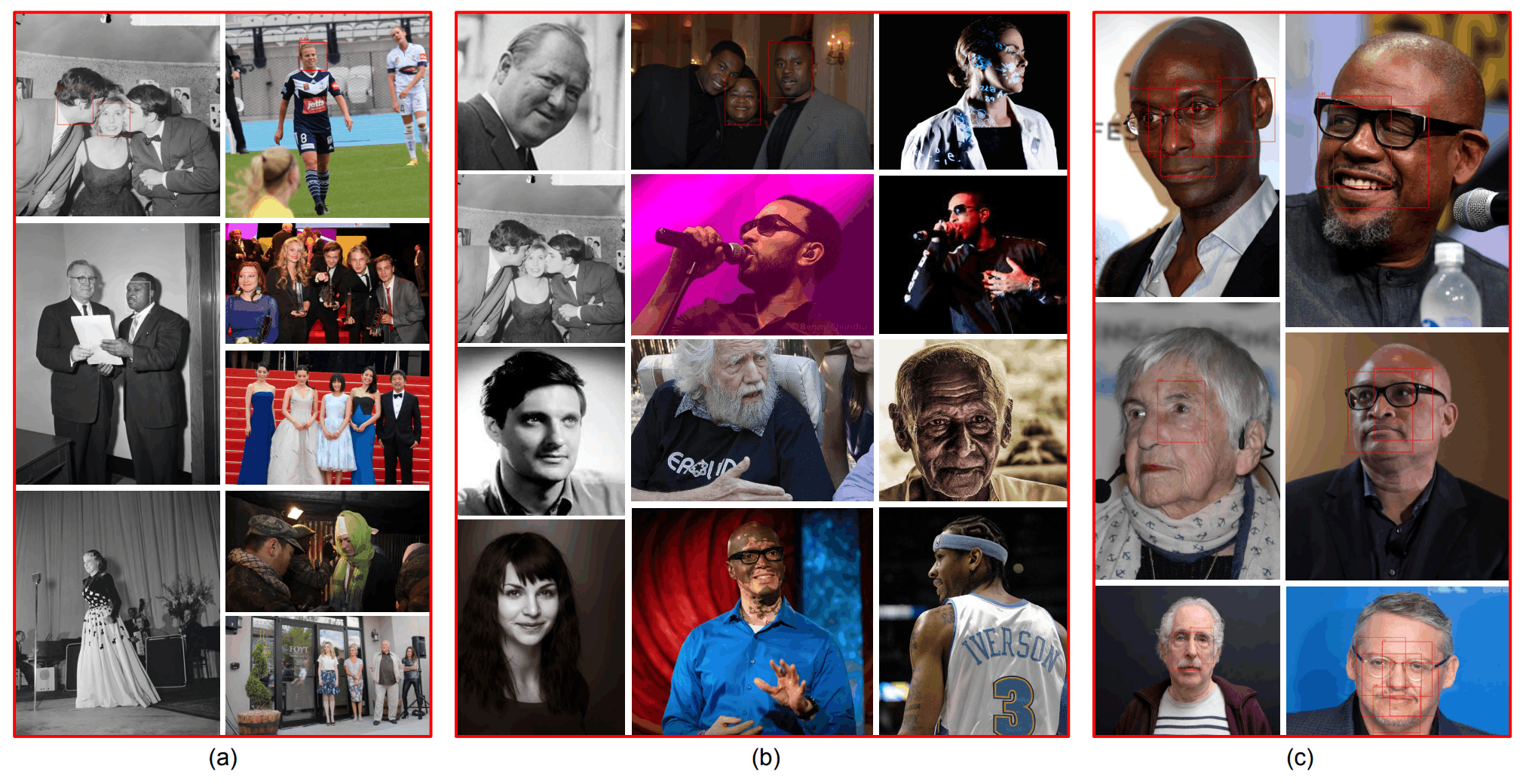

We have created a dataset called Fair Face Localization with Attributes (F2LA). This dataset contains 1,200 images of 1, 774 faces, with location and attribute information (10 attributes) assigned to each face. The images are collected from the Internet under a CC-BY license. The figure below is a sample of the collected images.

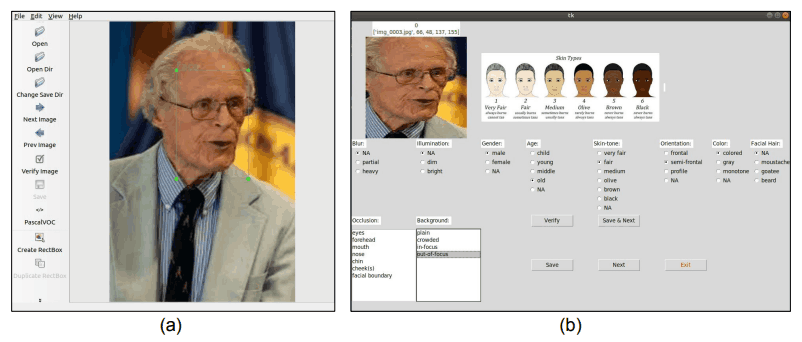

The tools are used to locate the face and assign attribute information as shown in the figure below. It is annotated by a person who has been involved in research on face-related technologies. In the figure below, (a) the position of the face is identified and (b) the attribute information is added.

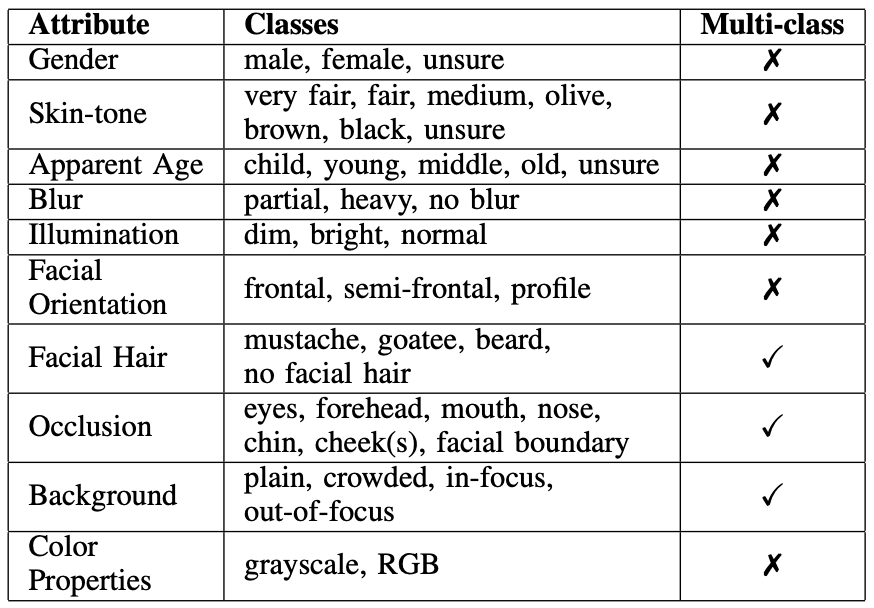

Attribute information to be assigned is as shown in the Attribute table below. From top to bottom, Gender, Skin-tone, Apparent Age, Blur,Illumination, Facial Orientation, Facial Hair, Hidden Area(Background, and color Properties of the image are assigned as attribute information.

Also, the type of each attribute information is shown in the Classes in the table. Incidentally, Fitzpatrick scale is used for skin tone (Skin-tone), and unsure is mainly used for grayscale. Note that Muti-Class indicates whether a particular face can belong to multiple Classes with the same attribute. The "✔︎" indicates that the face can belong to more than one Class.

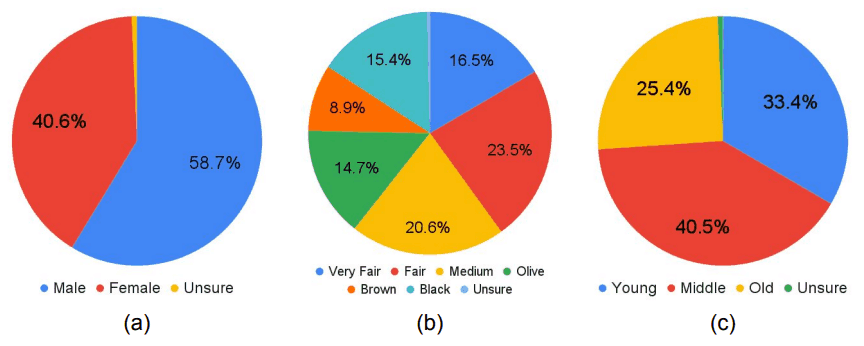

For performance evaluation, the 1,200 images containing 1,774 faces were divided into 1,000 images containing 1,486 faces (training data ) and 200 images containing 288 faces (test data ). The test data are adjusted to be as equal as possible, especially in terms of gender, skin tone, and apparent age. The distribution of these attributes in the test data is shown in the figure below.

experiment

First, we evaluate the performance of a typical face detection model that has been pre-trained. Next, to confirm the performance improvement of the F2LA face detection model, we evaluate the results of fine-tuning the F2LAtraining data and on a subset of the F2LA training data adjusted for gender, skin tone, and age balance.

MTCNN, BlazeFace, DSFD, and RetinaFace are used as pre-trained face detection models.BlazeFace uses models provided by Google's MediaPipeas pre-trained models.

The IoU (Intersection over Union) of the Bounding Box (the frame surrounding the face) is used to evaluate performance. In other words, we use the degree of overlap between the face bounding box predicted by the face detection model and the correct face bounding box. A perfect overlap results in IoU=1.0. When calculating accuracy, a threshold value is set for IoU. For example, if the threshold is set to IoU=0.5, it means "If IoU=0.5 or more, the face is considered to be correctly detected. Then, under this threshold condition, the system evaluates what percentage of faces were detected.

The table below shows the results of evaluating performance on F2LA test data against pre-trained face detection models (MTCNN, BlazeFace, DSFD, RetinaFace). t represents the I oU threshold. Accuracy is shown when the overlap (IoU) of the frame surrounding the face (0.5 or more) is considered a successful detection and when the overlap (IoU) of the frame surrounding the face (0.6 or more) is considered a successful detection.

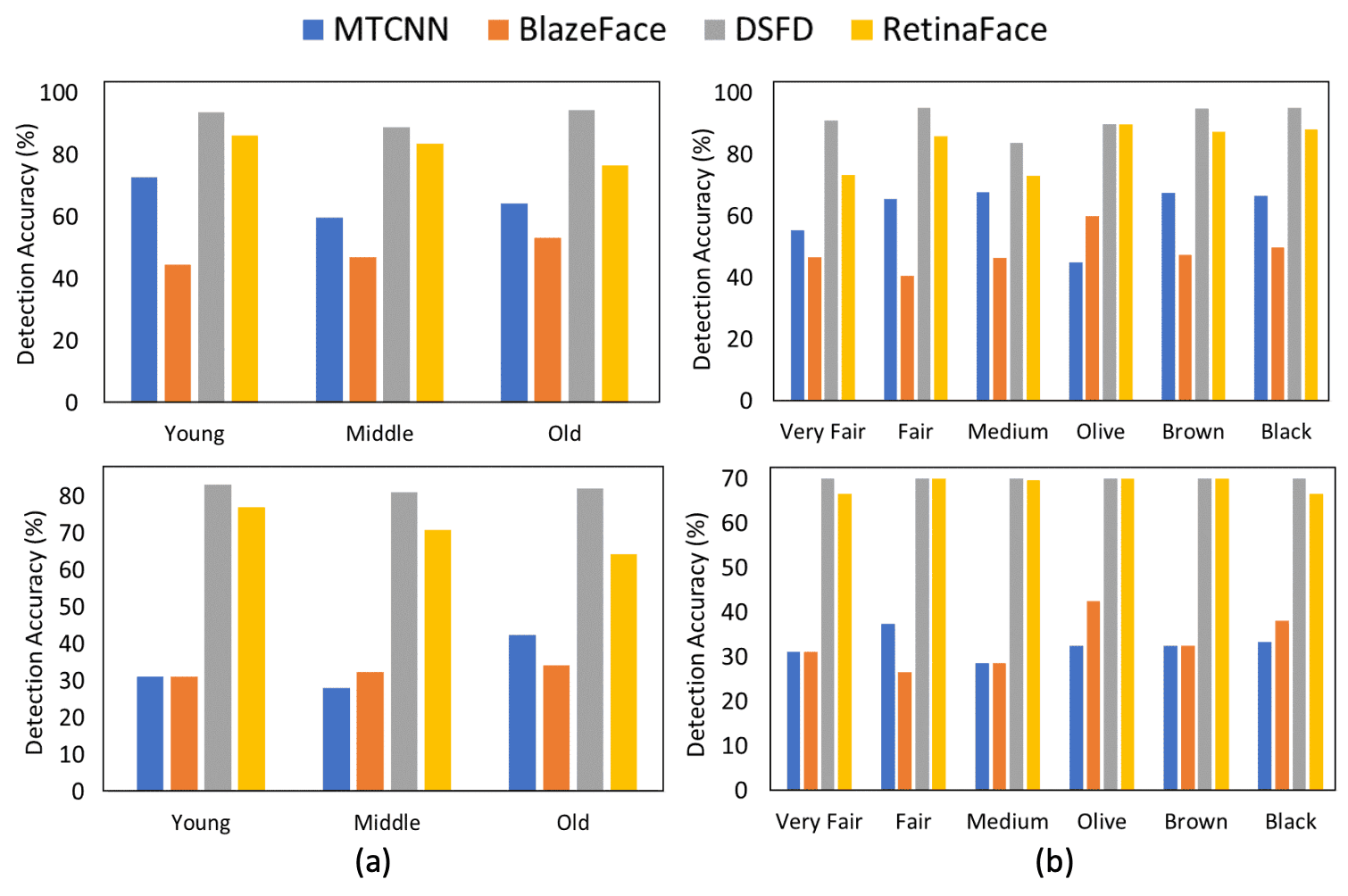

For both t=0.5 and 0.6, there is a significant difference in detection accuracy between Male and Female, with Disparity at 6.34% and 2.40% for MTCNN and RetinaFace, respectively, for t=0.5. Across all models, males (Male) are consistently less accurately detected. The results for age and skin tone are also shown in the figure below. The top row shows the case t=0.6 and the bottom row shows the case t=0.7. Again, we can see the difference in performance for each Class.

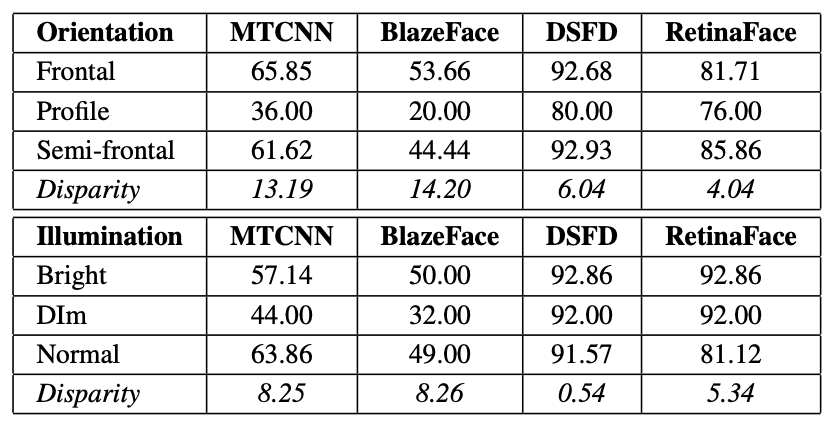

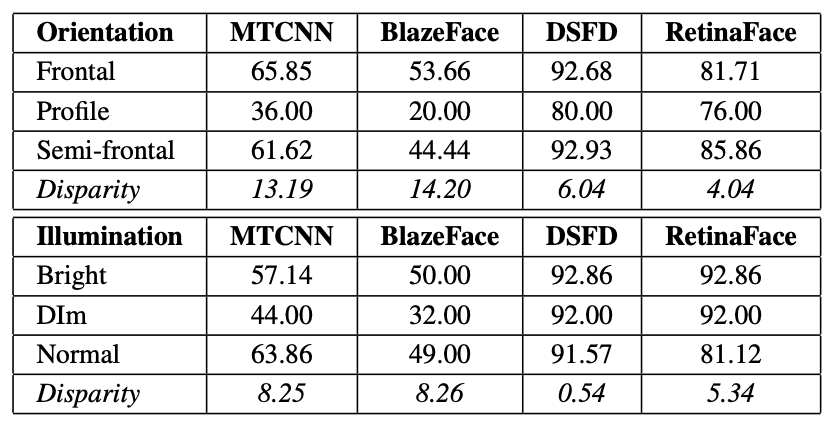

In addition, the figure below shows performance versus face orientation (Orientation) and illumination conditions (Illumination). It can be seen that the performance of a frontal face (Frontal) in high illumination (Bright) is better than that of a profile face (Profile) in dim illumination (Dim). Again, we can see that the performance differences are roughly consistent across Classes.

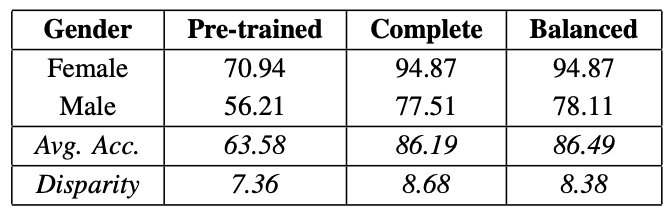

Next, using the F2LA training data, each trained model is fine-tuned and the detection accuracy for each gender is examined in the Complete table below. The F2LA training data was then used to prepare a subset of the F2LA training data with the balance between male (Male) and female(Female) adjusted, and fine-tuned to produce the Balanced model.

As can be seen from the table, fine-tuning significantly improves performance. However, differences in performance by gender (Gender) still occur. This seems to be the same trend for skin tone and age.

Factors that may influence bias

Several other cases have been identified regarding factors that affect the performance of the face detection model. The following figure shows a sample of images in which faces were not detected correctly by MTCNN, BlazeFace, and RetinaFace. (a) with BlazeFace, (b) with MTCNN, and (c) with RetinaFace.

We can see thatbothBlazeFace andMTCNNdo not detect well, especially when the subjects belong to the old (old) and male (male) categories. Also, it can be seen that they do not work well for grayscale images. Furthermore, BlazeFace does not seem to do well when the face size is small. On the other hand, RetinaFace does not seem to do well when the face size is large.

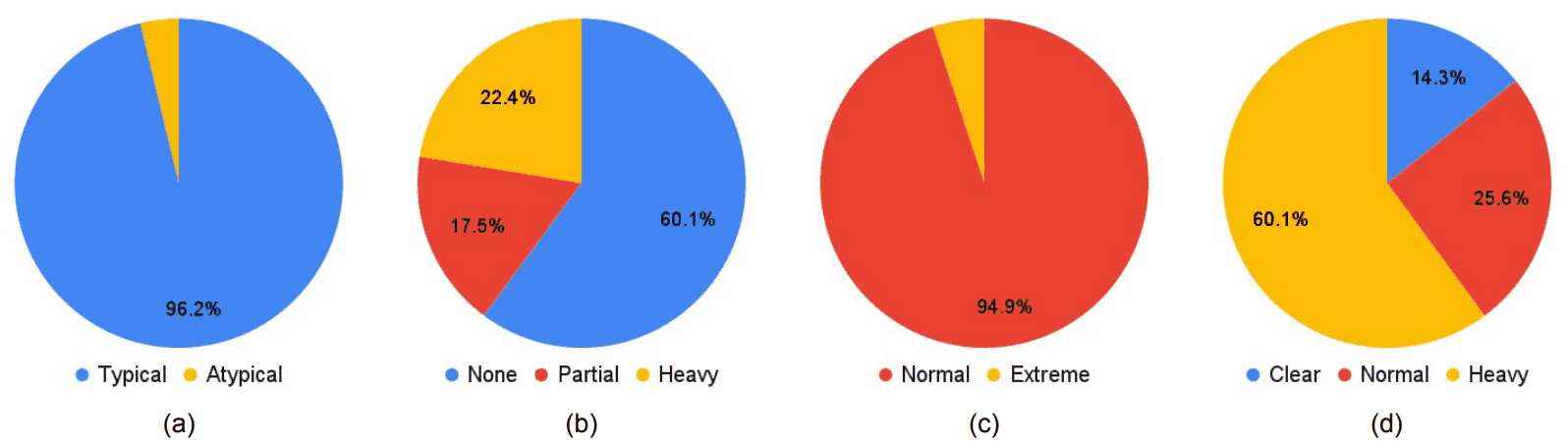

In addition, the model performance bias is discussed in terms of the impact of WIDER FACE, which was used for pre-training. As mentioned above, MTCNN, DSFD, and RetinaFace are pre-trained with WIDER FACE, which includes attributes such as face orientation (typical, atypical), hidden areas (none, partial, heavy), illumination (normal, The training data is distributed as shown in the figure below. (a) indicates the orientation of the face, (b) the hidden area, (c) the illumination, and (d) the blurriness.

As can be seen from the pie chart, WIDER FACE is biased toward Typical face orientation and Normal lighting. And, as can be seen from the table below, which was shown earlier, WIDER FACE shows relatively high performance for the attributes (Frontal and Normal in the table below)that were included in WIDER FACE for the F2LA test data as well. In other words, as expected, we can assume that the bias in the training data is affecting the performance.

In addition, visual inspection of the faces detected by DSFD and RetinaFace showed that they appeared to be detected without problems for both genders. In reality, however, as shown in the table below, there were significant differences in performance by gender.

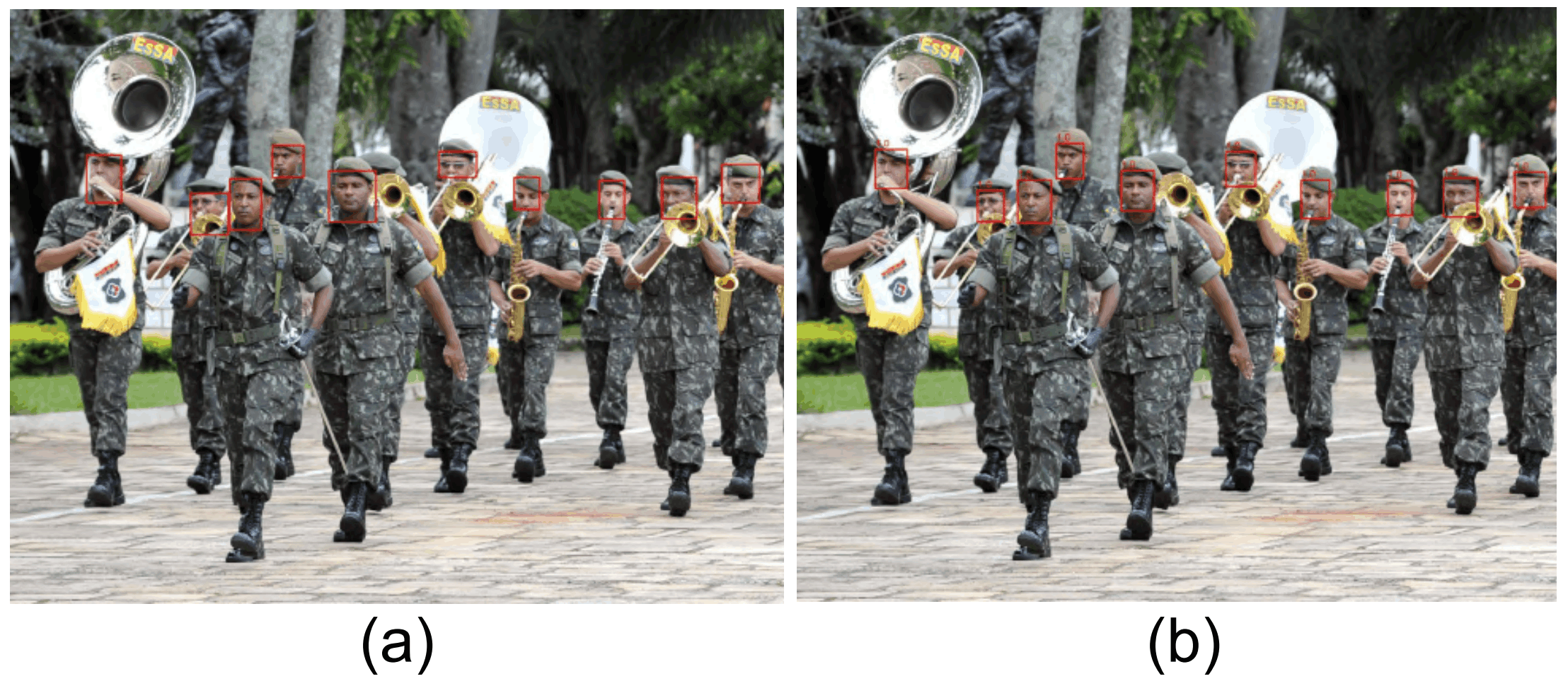

One of the reasons for this is that while the annotation had a larger frame surrounding the face, the frame predicted by the model was smaller, resulting in a smaller IoU. The smaller the object to be detected, the larger this effect becomes. In this case, the images containing men included many small faces, as shown in the figure below. This is probably one of the reasons for the difference in performance by gender. Figure (a) below shows the correct frame and(b) the predicted frame.

summary

This paper investigates whether and when bias exists in face detection models using deep learning.

In general, bias in models using deep learning is attributed not only to demographic factors such as race, gender, and skin tone but also to non-demographic factors (face orientation, face size, lighting, image quality, etc.), so a comprehensive analysis of bias requires a comprehensive analysis of existing data sets lacking A variety of attribute data will need to be added to the data set.

Therefore, in this paper, we first publish a new dataset called FairFace Localization with Attributes (F2LA). In this dataset, we not only provide face location information but also 10 different attribute data for each face. This allows us to examine not only the bias of demographic factors but also the confounding factors of non-demographic factors.

Although the analysis of bias factors in this paper is honestly not sufficient, the publication of F2LA with its diverse attribute data is expected to accelerate the study of bias in face detection.

Categories related to this article