How Frame Interpolation AI Technologies RIFE & IFNet Work And How To Use Them

3 main points

✔️ How RIFE (Real-Time Intermediate Flow Estimation) can be used to increase the frame rate of low frame rate video

✔️ Neural Networks andIFNet (Intermediate Flow Network) Approach to improve the quality of intermediate frame generation by

✔️ RIFE and IFNet Intermediate Frame Interpolation vs Image to Image vs Morphing Techniques

Digital Paper Crafts with a Blend of Japanese and Western Style - Interpolated Art by AI

written by Takahiro Yonemura (P.39)

Permission to publish this article has been granted by the publisher.

The images used in this article are from the paper, the introductory slides, or were created based on them.

Introduction

Recently, digital and analog are beginning to merge at an accelerated pace. We are in an age where AI technology can dramatically improve quality as long as analog materials can be digitized.

For example, a moving model of the paper craft software (Paper Dragon) [1] that is treated in the discussion is time-locked to create a movie at about 3 fps (frames per second). However, no matter how detailed the continuous shooting is, the video looks choppy. However, using the evolving AI technology RIFE (Real-Time Intermediate Flow Estimation), it is now possible to convert (interpolate) this 3fps video to even a 24fps video.

What do you think? AI technology is not only being used to generate images, but is also being applied as a new method in the field of interpolation of materials.

And AI technology itself, like a tool, uses neural networks to improve quality. Here, RIFE utilizes Intermediate Flow Network (IFNet) to dramatically improve the quality of intermediate frames for interpolation.

In this article, we will explain the working principles of RIFE and IFNet and provide specific examples of converting (interpolating) low frame rate video to high frame rate video.

Generation of intermediate frames by RIFE

The RIFE-utilizing software (Flowframes) addressed in the discussion is a real-time intermediate flow estimation algorithm for video frame interpolation. Conventionally, the optical flow of frames in both directions is estimated, and then scaled and inverted to approximate the intermediate flow. However, this method has the problem of introducing artifacts (errors and distortions).

For improvement, RIFE uses a neural network called IFNet to directly and finely estimate intermediate flows and performs them at high speed. Experiments in the referenced paper [2] show that RIFE reaches high performance on multiple benchmarks and performs 4 to 27 times faster than traditional methods.

These are processed in the following steps to generate intermediate frames.

Acquisition of input frames

Two consecutive frames  and

and  are entered to start the process.

are entered to start the process.

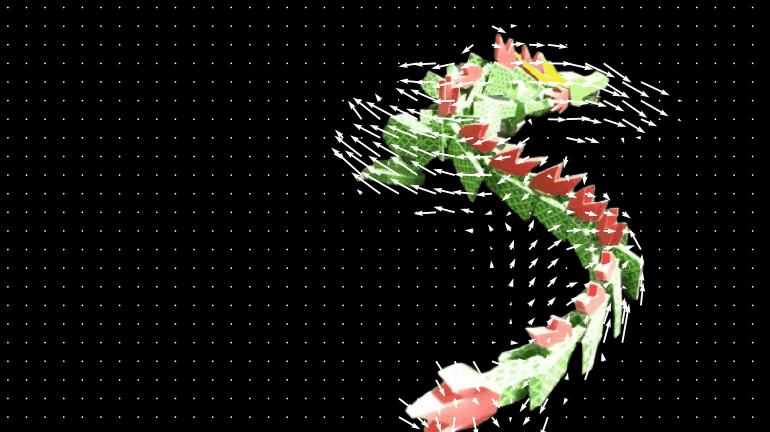

Calculation of optical flow

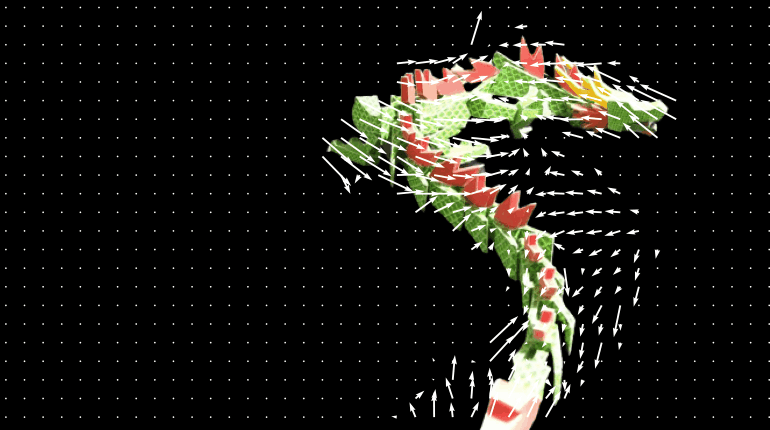

The motion between two consecutive frames is estimated using optical flow. This yields vector information that shows how each pixel moves. The figure shows the estimated and visualized motion before and after a frame using optical flow. You can see that many vectors are displayed, showing the relationship between the previous and next frames.

Estimation of intermediate flows (IFNet)

| Based on the optical flow information, we also use IFNet to estimate the intermediate flows between frames |

Frame warping

Based on the estimated flow, warp each frame in the opposite direction to obtain the images ![]() and

and ![]() at the intermediate positions.

at the intermediate positions.

Fusion map generation

Using the fusion map  , the warped frames are fused together to produce the final intermediate frame

, the warped frames are fused together to produce the final intermediate frame  . These are the contents shown in the following equations.

. These are the contents shown in the following equations.

Generation of intermediate frames

Warped frame calculation

This method is generally faster than other methods and allows for high-quality intermediate frame generation.

Warp Meaning

Warping means transforming pixels in an image or video. Specifically, it means to deform an image or frame by moving pixels from their original position to a new position. It is like pulling or pushing a paper picture to change its shape.

What is a fusion map?

Fusion maps are not a type of AI; they are weighted maps created by AI and are a tool-like entity used by AI, like IFNet.

The role of the fusion map is to determine how well the pixels are fused together. It is a weighting information that determines how much information to incorporate from the warped frame (Warped Frame A or Warped Frame B) after warping each pixel.

For example, 70% of a pixel is obtained from Warped Frame A, 30% from Warped Frame B, and so on. These weighting information is used to fuse the two frames and generate the intermediate frame.

Fusion mapping is a method of fusing data from different sources to create a new image.

Image to Image" is similar to image generation AI in that it combines data from different sources to create a new image. However, the role and meaning of the generative AI itself and the tools it creates are completely different.

Overview of IFNet and how it works

IFNet is a neural network for direct estimation of intermediate flow. This network is used to generate intermediate frames between two consecutive frames (Frame A, Frame B, etc.).

For example, suppose IFNet receives consecutive input frames (Frame A and Frame B) and an interval of time (t). It then estimates the intermediate flow (pixel motion) and fusion map (M) between the frames. Then, using the estimated intermediate flow, the input frames are warped (deformed) to produce intermediate frames by means of the fusion map (which determines how much information each pixel takes in from which frame).

Course-to-Find Strategies

The generation process uses a method called course-to-fine, in which a rough flow is estimated at low resolution, and then the flow is modified in detail as the resolution is increased.

IFNet is trained using a large number of image frames and Privileged Distillation (PRD). As a result, even a certain amount of unknown data can be correctly recognized.

RIFE and IFNet behavior vs Image to Image

Each has different techniques and methods, as well as different objectives.

RIFE and IFNet cooperate to generate intermediate frames. Among other things, the goal is to improve the smoothness of video, which is what this technology focuses on.

Image to Image by Image Generation AI is "specialized for image transformation". It uses GAN (Generative Adversarial Network) and diffusion models, as typical examples, to generate new images from input images. This technology is used to generate a variety of images by transforming styles and changing attributes (objects, people, emotions, etc.).

Intermediate frame interpolation vs. morphing techniques for RIFE and IFNet

The major differences are method and accuracy.

Intermediate frame interpolation combines optical flow (RIFE) and neural networks (IFNet, Fusion Map) to produce overwhelmingly accurate and natural intermediate frames in real time.

Morphing techniques, on the other hand, primarily use shape deformation to "smooth out" the change between two frames. Pixels between feature points are simply linearly interpolated to create an intermediate frame. Therefore, it is limited in its ability to handle complex motion.

Practical Examples

Currently, frame interpolation fails unless the image overlaps the previous frame by about half. However, from 20 images, a smooth 3-second movie can be generated at 41 frames/second, which is a remarkable capability of the generation AI.

The analog materials were given a sense of dynamism and life, confirming the technique of utilizing generative AI.

The above is a quote from the discussion, but this AI technique is not a panacea either: for continuous images with large intervals between two frames, the interpolation is incomplete. This is because the changes in the position and shape of the object are so large that the number of reference objects decreases and motion estimation algorithms such as optical flow cannot capture the motion.

Interpolation is especially difficult when rotation or complex motion is involved. The same is true for video in which objects disappear and appear between frames.

However, a frame interpolation technology called DAIN (Depth-Aware Video Frame Interpolation) that takes depth information into account is now available. We can expect further evolution in the future.

Summary

I have discussed AI technologies related to quality improvement that are behind the scenes in this article. I will conclude with a quote and a video of an actual example of the "idea of non-fluctuation" that the treated discussion demonstrates.

The dynamic paper crafts were created using the original software "Paper Dragon," and after being crafted, assembled, and photographed, the frames were interpolated in a digital environment. The author is convinced that new expressive techniques will be created in the future through the collaboration of digital-analog-digital.

Digital Paper Crafts for "Japanese-Western Blend" - Interpolated Art by AI

Reference Explanatory Documents

[1] Takahiro Yonemura and Kohei Furukawa, Paper Craft Made with "Paper Dragon" Software, NICOGRAPH2012,115-118,2012.

[2] Huang, Zhewei and Zhang, Tianyuan and Heng, Wen and Shi, Boxin and Zhou, Shuchang, Real-Time Intermediate Flow Estimation for Video Frame Interpolation, Proceedings of the European Conference on Computer Vision (ECCV), 2022.

Categories related to this article

![[OmniGen] All Image-](https://aisholar.s3.ap-northeast-1.amazonaws.com/media/September2024/omnigen-520x300.png)