What Does Your Conversation Partner See In You? New Technology For Gaze Estimation In Video Chat And In Person!

3 main points

✔️ From the recorded video of the conversation, the relationship between the speakers and the camera Proposed "ICE Framework" for estimating "interpersonal gaze" without prior information

✔️ For both video chat and face-to-face conversation situations

✔️ Applying the ICE Framework to two studies, "The Relationship Between Deception and Gaze" and "The Relationship Between Communicative Ability and Eye Contact," to test its usefulness

Are you really looking at me? A Feature-Extraction Framework for Estimating Interpersonal Eye Gaze from Conventional Video

written by Minh Tran, Taylan Sen, Kurtis Haut, Mohammad Rafayet Ali, Mohammed Ehsan Hoque

(Submitted on 21 Jun 2019 (v1), last revised 17 Jan 2020 (this version, v2))

Comments: Accepted at IEEE Transactions on Affective Computing

Subjects: Computer Vision and Pattern Recognition (cs.CV); Human-Computer Interaction (cs.HC)

Introduction

There have been many studies on eye-gaze estimation, and in this article, we will introduce a paper that proposes a method for estimating the "interpersonal gaze" in conversations. In this article, we will introduce a method for estimating the interpersonal gaze during a conversation.

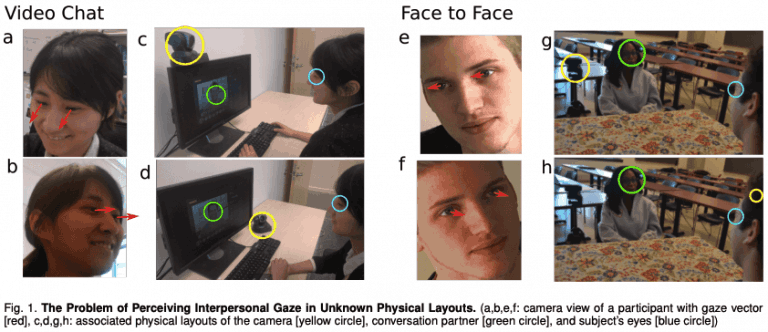

For example, in the figure below, a~d is an example of a video chat and e~h is an example of a face-to-face conversation. c, d, g, and h, the blue circles are the person whose gaze is being estimated, the green circles are the person with whom the conversation is taking place, and the yellow circles are the cameras. Also, a, b, e, and f are the images of the face of the person to be estimated, circled in blue, captured by the camera in the yellow circle.

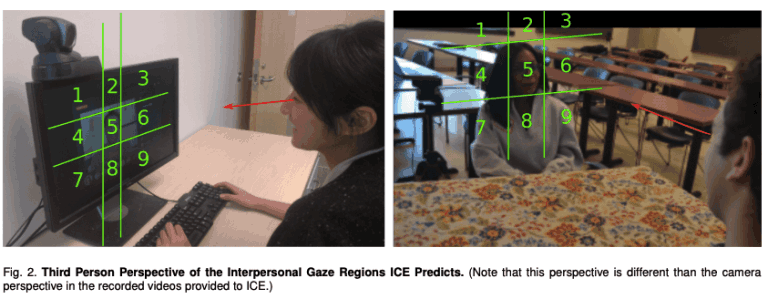

As shown in the figure below, we can estimate where the target of the gaze estimation is looking at the person at a given moment (in this study, the area of 1 to 9).

Existing methods require prior information about the physical layout of the person to be estimated, such as the position of the person's eyes, the positional relationship of the camera, and the positional relationship between the person and the conversation partner, and they are not able to obtain interpersonal gazes directly from video images. Moreover, it is very labor-intensive to obtain and calibrate these various pieces of prior information each time we perform an estimation.

In addition, there is an increasing number of opportunities to use video chatting as well as face-to-face conversations, but the performance of existing methods for estimating interpersonal gaze in video chatting as well as face-to-face conversations are inadequate or unknown, making it difficult to adapt to various situations.

To read more,

Please register with AI-SCHOLAR.

ORCategories related to this article

![[Swin Transformer] T](https://aisholar.s3.ap-northeast-1.amazonaws.com/media/February2024/swin_transformer-520x300.png)