NAS Surpassing EfficientNet! A New Method To Progressively Narrow The Search Space Of Model Architecture "Design Network Space Design"

3 main points

✔️ The method called "Design Network Space Design" was developed to improve the traditional NAS method to gradually narrow the search space of the model architecture.

✔️ The model obtained from "Design Network Space Design", RegNet, performs as well as EfficientNet and has a fast inference time under the simple regularization method (Up to 5 times faster).

✔️ Found that Depth saturates at about 60 layers and that Inverted Bottleneck and Depthwise Convolution worsen the performance.

Designing Network Design Spaces

written by Ilija Radosavovic, Raj Prateek Kosaraju, Ross Girshick, Kaiming He, Piotr Dolla ́r

(Submitted on 30 Mar 2020)

Comments: Accepted at CVPR 2020

Subjects: Computer Vision and Pattern Recognition(cs.CV); Machine Learning(cs.LG)

Code

Introduction

Improving the performance of image recognition

In recent years, image recognition has developed rapidly. The leading models

- AlexNet (2012) showing the effectiveness of the Conv. layer through ReLU and Dropout

- VGG (2015), which was used in 3x3 Conv. layers only and improved performance by increasing the depth (16 - 22 layers)

- ResNet (2016), which solves the gradient loss problem in principle through residual connections.

etc. are well known.

These studies have led to significant improvements in accuracy. Not only that, factors such as convolutional layers, image size of the input, depth, residual connection, and attention mechanism (Attention) were found to be effective in improving the model performance.

However, there is a major weakness in the development of these models. That is, as the search space of a model architecture increases in complexity, it becomes more and more difficult to optimize within the search space. In response to this trend, the Neural Architecture Search (NAS) was proposed to automate tuning by using reinforcement learning to automatically find the one best model in the search space set by the developer. Using this NAS, a task-independent meta-architecture was proposed in SpineNet (SpineNet, an AI-discovered backbone model with outstanding detection accuracy ) and EfficientNet, which became SOTA in the 2019 image classification task by scaling the model in terms of depth, width, and resolution.

However, even though NAS is excellent as a method, there is a drawback to it. That is that what results from NAS is a single model and is dependent on the hardware on which the training was done. Another drawback is that the principles involved in designing the model architecture are difficult to understand.

Proposal methodology

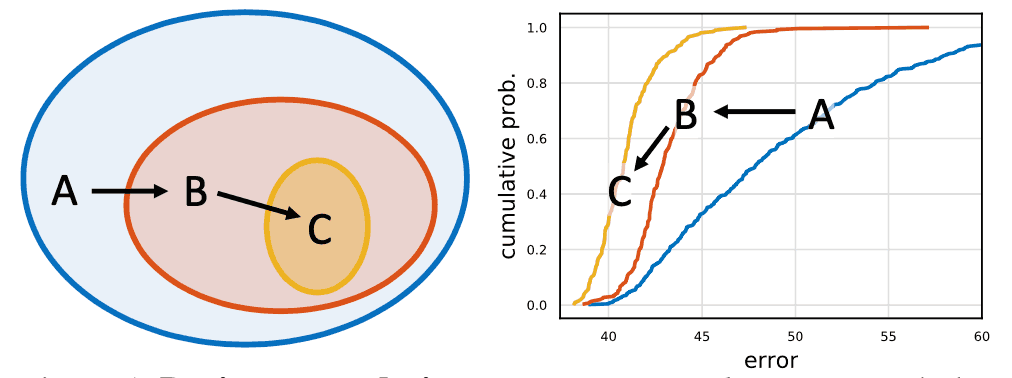

The author have proposed "Design Network Space Design", which narrows down the candidate space of model architecture to solve these problems. The final result of reinforcement learning is not a single model, but a set of models (a search space of the model structure , Network Design Space), which is gradually narrowed by comparing it with the original Network Design Space.

As a metric to compare Network Design Space, we use a method proposed by Ilija Radosavovic, the First Author of this paper, in 2019. This method is an extension of the traditional method of learning parameters after tuning and comparing the minimum values (Point Estimate), and compares models by performing random sampling on the model you want to tune and comparing the distribution of the results (Distribution Estimate). Intuitively, we understand that this is because the distribution of models is more robust and informative than a single model.

This method of comparing Dsitribution Estimate is not only done once, but also averaged multiple times (Empirical Bootstrap) to make it more reliable.

To read more,

Please register with AI-SCHOLAR.

ORCategories related to this article

![[Swin Transformer] T](https://aisholar.s3.ap-northeast-1.amazonaws.com/media/February2024/swin_transformer-520x300.png)