Does CNN Really Like Textures?

3 main points

✔️ Findings on texture bias

✔️ Basically, CNNs have a texture bias.

✔️ It also turns out that it's not that we don't have shape information.

The Origins and Prevalence of Texture Bias in Convolutional Neural Networks

written by Katherine L. Hermann, Ting Chen, Simon Kornblith

(Submitted on 20 Nov 2019 (v1), last revised 29 Jun 2020 (this version, v2))

Comments: Published by arXiv

Subjects: Computer Vision and Pattern Recognition (cs.CV); Machine Learning (cs.LG); Neurons and Cognition (q-bio.NC)

Introduction

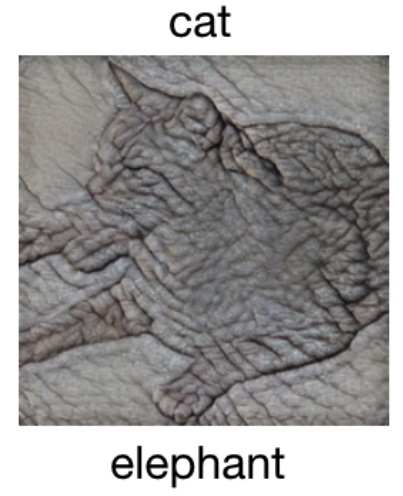

Convolutional Neural Networks (CNNs) have been at the forefront of performance in various fields such as image classification and object detection. Their performance has been so high that they have been able to outperform humans in the field of research. Interestingly, even though CNN was invented to mimic human visual processes, it differs from the human vision in a number of ways. A typical example is that humans prefer to shape information in the classification problem, whereas CNNs prefer texture information. The image below shows a cat's shape information with an elephant's texture information. Humans prefer the shape information and judge it like a cat, while CNN prefers the texture information and judges it as an elephant.

Also, the term texture bias is used to refer to a preference for texture over shape, and shape bias is used to refer to a preference for shape over texture.

It is also said that these texture biases are responsible for the phenomenon of the adversarial examples problem. So the reason we are susceptible to small perturbations is that we prefer texture information. It could also be said that the preference for textures indicates an inductive bias (the hypothesis that machine learning methods employ for generalization is out of sync with real-world conditions). It's not surprising that there is an inductive bias because CNNs prefer textures even for tasks where shape information is important, so it's not surprising that there is an inductive bias.

To read more,

Please register with AI-SCHOLAR.

ORCategories related to this article

![[Swin Transformer] T](https://aisholar.s3.ap-northeast-1.amazonaws.com/media/February2024/swin_transformer-520x300.png)