Does The LLM Have Awareness?

3 main points

✔️ A paper discussing the question of whether LLMs are conscious

✔️ Explaining one by one the evidence for saying they are or are not conscious

✔️ Currently, we cannot say they are conscious, but we cannot deny that this possibility will increase in the future

Could a Large Language Model be Conscious?

written by David J. Chalmers

(Submitted on 4 Mar 2023 (v1), last revised 29 Apr 2023 (this version, v2))

Comments: Published on arxiv.

Subjects: Artificial Intelligence (cs.AI); Computation and Language (cs.CL); Machine Learning (cs.LG)

code:

The images used in this article are from the paper, the introductory slides, or were created based on them.

The paper presented here is a summary of an Invited Talk given by the author, David J. Chalmers, at NeurIPS 2022. The contents of the talk can be found here.

introduction

In June 2022, Google engineer Blake Lemoine claimed that Google's AI, LaMDA, was conscious; Google did not accept this claim, insisted that there was no evidence that LaMDA had developed consciousness, and fired him.

But what kind of evidence do we really have to show that AI is not conscious? This incident prompted Chalmers, the author of this paper, to consider what kind of evidence is needed to show whether AI is conscious or not.

What is consciousness?

Consciousness is a subjective experience. When a being is conscious, it is having a subjective experience of seeing, feeling, and thinking. Bats perceive the world through ultrasound. We humans do not know what these experiences are, but we can still say that bats have subjective experiences and are conscious. On the other hand, something like a plastic bottle does not have a subjective experience.

Consciousness is also an aggregate of many elements. These include sensory experiences related to perception, affective experiences related to emotion, cognitive experiences related to thought and reasoning, agentic experiences related to action, and self-consciousness, the awareness of oneself. These are all elements of consciousness.

Consciousness also has nothing to do with having human-level intelligence. Many people share the perception that non-human animals also have consciousness.

The difficulty with the consciousness issue is that there is no operational definition that provides a criterion for consciousness. With humans, linguistic descriptions can be used as a criterion for consciousness, and with animals, behavior can be used as a criterion. However, when dealing with AI, it is difficult. We usually use benchmarks to evaluate the performance of AI, but what kind of benchmarks should we use to measure consciousness?

And why is consciousness important in the first place? The author says that while consciousness does not guarantee the acquisition of special new abilities, it may lead to the acquisition of distinctive functions such as reasoning, attention, and self-awareness.

Consciousness is also morally and ethically important. It is also related to how we humans treat conscious beings. It also leads to the question of whether we should create a conscious AI.

In this section, we introduced various aspects of what consciousness is and what it means to make an AI conscious. He says that the roadmap described in this paper describes the conditions for creating a conscious AI, and should be separated from the question of whether it should be so.

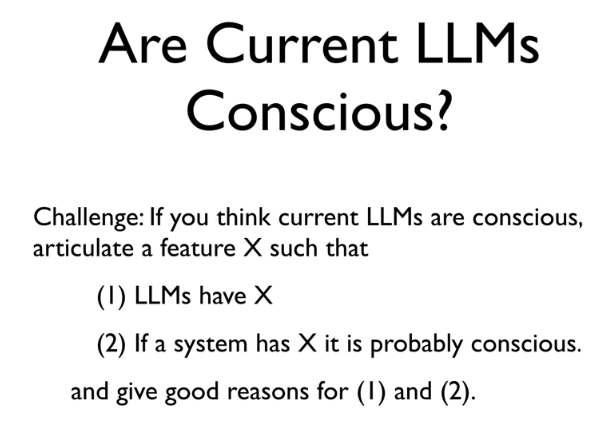

What is the evidence to show that the Large Language Model (LLM) has consciousness?

As shown in the picture here, if a system has an X, let us consider an X that could be the basis for indicating that it has consciousness. The following four candidates are listed.

X = Self-Report

LaMDA behaved as if it were capable of self-reporting. (Blake Lemoine's claim that LaMDA is conscious is based in large part on this item.)

However, as many have pointed out, LaMDA can easily exhibit the opposite behavior, as if it were a handful. This would make the self-report item a weak basis for indicating that LaMDA is aware of the current situation.

Another problem is that LaMDA uses a large amount of textual information about consciousness for learning.

X = Seems-Conscious

The fact that LLM behaves as if it has consciousness may be one of the candidates for X. However, it is widely known that people misidentify things that do not have consciousness as having consciousness. However, it is widely known that people misidentify things that do not have consciousness as having consciousness, and this X would be weak as a basis.

X = Conversational Ability

Today's LLMs demonstrate the ability to think and reason consistently in conversation and possess advanced conversational skills. Turing positioned this conversational ability as a characteristic of thinking. It is expected that this ability will continue to develop in the future.

X = General Intelligence

Conversation can be a sign of deeper, more general intelligence. Today's LLMs are beginning to demonstrate domain-general abilities. Coding, poetry, game-playing, question answering, and many other abilities are being realized. Some consider this domain-general use of information to be central to evidence of consciousness.

These are just a few of the items listed above, but the author says that at present there is no strong enough evidence to believe that LLMs are conscious.

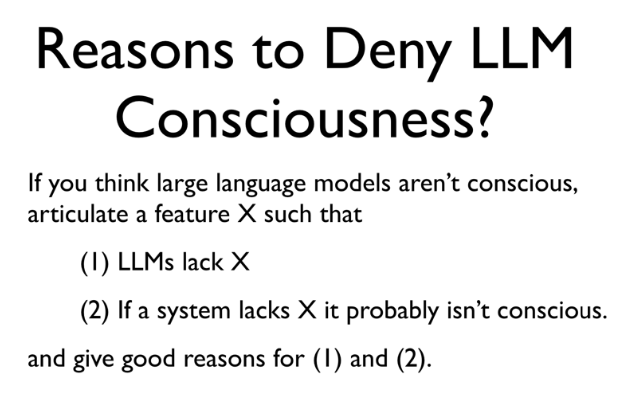

What evidence do we have to show that the Large Language Model (LLM) is not conscious?

Conversely, what kind of evidence could be considered to show that the system is not conscious? If a system lacks X, as shown in the picture here, let us consider X that could be evidence to show that it does not have consciousness. The following six candidates are listed.

X = Biology

If this were taken as evidence that AI is not conscious, the argument would be settled, but the author leaves this point out of the discussion.

X = Sense and Embodiment

Many argue that current LLMs do not have perceptual processing, so they cannot experience sensation, and they do not have bodies, so they cannot act. grounding (linking meaning to real-world experiences) in order for AI to acquire meaning, understanding, and consciousness. More recently, many researchers have argued that perceptual grounding is necessary for LLM to have a robust understanding of things.

On the other hand, the author of this paper argues that sensation and body are not necessarily necessary for consciousness and understanding. Even beings without them can have consciousness (even if it is limited consciousness).

Recently, LLMs have been extended to handle non-verbal information, such as visual information (Deepmind's Flamingo) and actions in real and virtual worlds (Google's SayCan), and future LLMs will be able to overcome the Sense and Embodiment.

X = World-Models and Self-Models

Many have argued that LLM only performs statistical text processing, not the kind of processing that allows us to gain meaning and understanding from a model of the world as we have it.

In contrast, the author states that it is important to separate training and runtime processing: LLM is optimized to minimize word prediction errors, but this does not mean that LLM cannot do other processing besides word prediction. Minimizing word prediction errors requires a variety of other processing, some of which may be world model processing. Just as in the case of natural selection in biological evolution, various functions such as seeing, flying, and acquiring world models were acquired as a result of evolution to maximize adaptation.

With respect to self-models, the current LLM is inconsistent in its own processing and reasoning; self-models are an important aspect of being aware of oneself, which is also important as an element of consciousness.

X = Recurrent Processing

Many theories of consciousness say that recursive processing is important. Current LLMs are based on feed-forward processing and thus do not have memory processing such as internal states that are retained over time.

On the other hand, some believe that recursive processing may not be necessary for consciousness.

X = Global Workspace

Currently, the leading theory of consciousness in cognitive neuroscience is the global workspace theory. It is the idea that there is a workspace with limited capacity that aggregates information from other unconscious modules, and that what enters this workspace rises to consciousness.

Currently, several studies have been proposed to extend LLM to various modalities, and in these models, a low-dimensional space is used as a space to integrate information for each modality. This is considered to be the equivalent of a global workspace. Some people are already discussing such models in relation to consciousness.

X = Unified Agency

Currently, LLM does not exhibit the behavior of a single integrated personality. It uses all personalities depending on the situation. Many argue that a unified personality is important for consciousness, and in that regard, it is used as evidence that the current LLM is not conscious.

On the other hand, there are opposing views. Some people have multiple personalities, and LLM can be thought of as an ecosystem of multi-personal agents.

A constructive argument would be that it is technically possible to have only one personality in the LLM.

There are already examples of actual, albeit partial, attempts to introduce the Xs pointed out above into LLM, and it is quite possible that systems that incorporate these Xs in a robust manner will actually appear in the next few decades.

summary

It is conceivable that AI will reach mouse-level intelligence, if not human-level intelligence, in the next decade, and if it gets to that point, the author says, AI may have the potential to become conscious. While there are many challenges and objections to whether or not AI should be conscious, overcoming the challenges listed above will be the path to achieving a conscious AI. The final question is: If all these challenges are overcome, will all people admit that AI has consciousness? If not, what is missing? If not, what is missing?

The author concludes by stating, once again, that this paper is not advocating that AI should be conscious. The roadmap of issues presented here are points that must be addressed if we want AI to be conscious, and points that should be avoided if we do not want AI to be conscious. The important thing is to pay attention to these points so as not to unintentionally create a conscious AI.

The question of whether or not AI should be conscious is one that will be actively debated in the future, and it is important for us to make our position clear. We hope that this article is the first step toward that end.

Categories related to this article

![Libra] A New Multimo](https://aisholar.s3.ap-northeast-1.amazonaws.com/media/February2025/libra-520x300.png)