Skin Cancer From Mobile Devices! A Proposal For An Automated Skin Cancer Classification Model Utilizing A Large Data Set, CNN!

3 main points

✔️ Skin cancer has a significantly lower survival rate in the late stages than in the early stages, so there is a particular need for early detection that can be done by the patients themselves.

✔️ In this study, we propose a deep learning model for automatic skin cancer classification based on a large dataset and CNN.

✔️ The results report that the proposed model achieves classification performance equal to or better than that of dermatologists.

Dermatologist-level classification of skin cancer with deep neural networks

written by Andre Esteva, Brett Kuprel, Roberto A. Novoa, Justin Ko, Susan M. Swetter, Helen M. Blau, Sebastian Thrun

(Submitted on 25 Jan 2017)

Comments: nature

The images used in this article are from the paper, the introductory slides, or were created based on them.

backdrop

Is it possible to develop a model to automatically identify skin cancers with diverse symptoms?

In this study, we aim to construct an image classification model for the automatic classification of skin cancer using a large amount of image data and a deep convolutional neural network (CNN).

Skin cancer is one of the most common malignancies. The disease is diagnosed visually, with a clinical examination followed by dermatoscopic analysis, biopsy, and histopathological examination; however, the appearance of skin lesions varies widely, making automated image-based classification difficult. In this context, in this study, a CNN was trained based on transfer learning using a dataset of 129450 clinical images consisting of 2032 different diseases to validate the classification accuracy: specifically, biopsy-proven use cases - keratinocyte carcinoma and benign seborrheic keratosis, and Twenty-one certified dermatologists and classification performance was tested on malignant melanoma and benign nevi: the first case was for the identification of common cancers and the second for the identification of more serious skin cancers. The model achieved performance comparable to that of humans in classification accuracy. Considering its implementation on mobile devices, it is expected to make a significant contribution to improving the prognosis of skin cancer in the future.

What is skin cancer?

First, an overview of skin cancer is given.

Skin cancer refers to all cancers of the skin and is characterized by an initially slow but gradually accelerating pathological progression. It is also suggested that the cause of the disease is mainly due to the effects of ultraviolet light and that there is a possibility of genetic factors. Melanoma, a type of skin cancer, accounts for 75% of skin cancer-related deaths in the United States. The estimated 5-year survival rate of melanoma - a measure of the probability of survival after disease - is as low as 14% when detected in the late stages, making early detection - early detection - critical.

research purpose

In this study, we aim to construct a model that automatically performs image classification based on a learning model constructed from a skin cancer dataset.

Early detection of skin cancer is important because the survival rate is significantly lower when skin cancer is detected in the late stages than in the early stages - therefore, tools are needed to enable patients to diagnose skin cancer themselves outside of the medical examination. On the other hand, in previous studies on such image classification, the variation in image elements - zoom, angle, illumination, etc. - has been a challenge, making it difficult to perform the classification task with high accuracy. In this study, we aim to build a model for early detection of cancer from skin images by utilizing a large dataset and a simple image classification model: specifically, melanoma classification based on CNN, dermoscopy - an inspection tool to observe skin conditions -The image classification model will be constructed for melanoma classification and carcinoma classification using In this model, we used 1,410,000 pre-trained images and learned by transfer learning, taking into account the effect of the variation of each image. From this model, we will develop a disease segmentation algorithm that maps each disease to a learning class, and aim to construct a system that can automatically detect skin cancer.

technique

In this section, we describe the proposed method in this study.

The construction of an automated skin cancer classification model in this study is characterized by two features: training on a large dataset labeled by medical experts; and model construction by transfer learning.

data-set

In this section, we describe the dataset utilized as the proposed method.

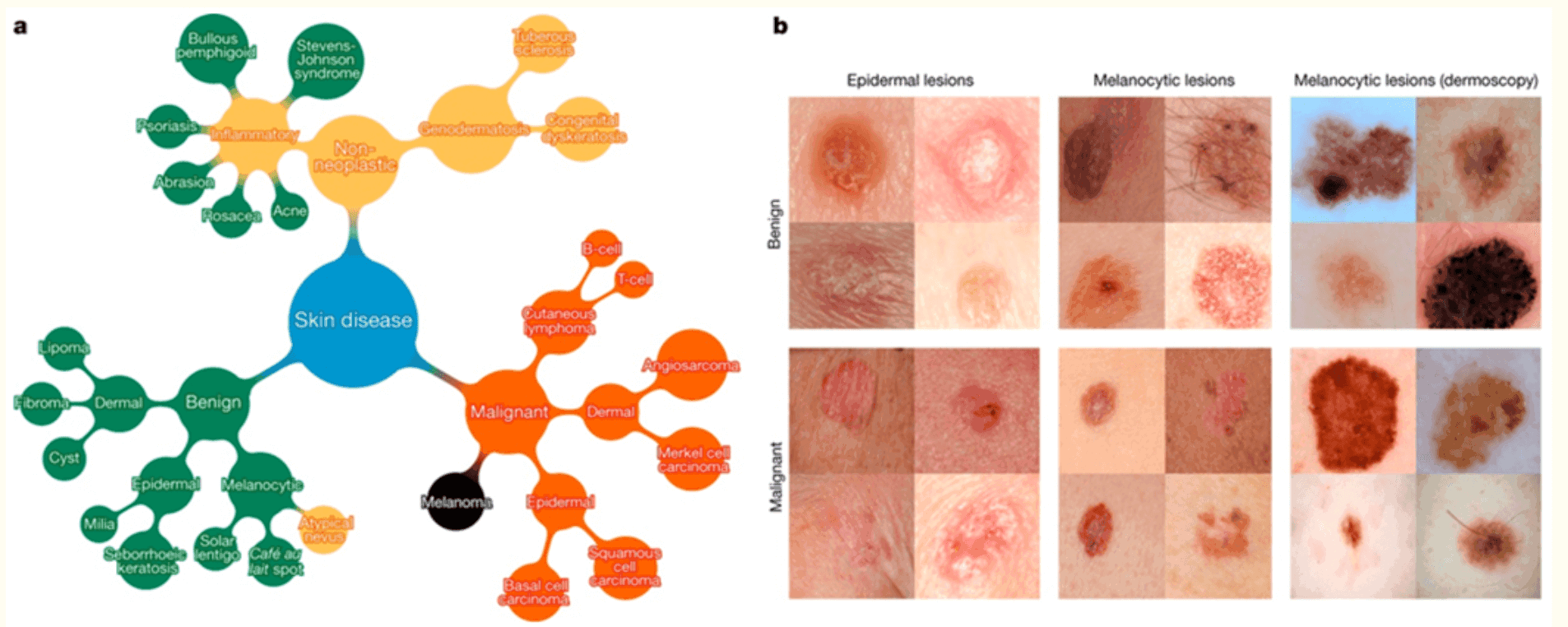

In this study, we utilize 1.41 million pre-trained images and take into account the image variability - i.g. zoom and brightness. The dataset consists of dermatologist-labeled images and is a tree-structured taxonomy of 2,032 diseases, with individual diseases forming leaf nodes - see figure below.

The target label, melanocytic lesions, also includes the most serious skin cancers, malignant melanomas, and benign nevi; epidermal lesions include malignant basal cell carcinomas, squamous cell carcinomas, intraepithelial carcinomas, premalignant actinic keratoses, and benign seborrheic keratoses. These diseases are defined as a subset of a taxonomy organized clinically and visually by medical experts.

proposed model

In this section, we describe the model for image classification.

The model building in this study leverages transition learning based on Inception v3: approximately 1.28 million images from the 2014 ImageNet Large Scale Visual Recognition Challenge6 -1000 object categories). -using the GoogleNet Inception v3 CNN, which was pre-trained by GoogleNet - see figure below.

The CNN was trained with 757 disease classes and the dataset was partitioned into 127,463 training and validation images and 1,942 biopsy-labeled test images.

result

In this section, we describe the evaluation. In our evaluation, we use 9-fold cross-validation to verify the image classification accuracy and the effectiveness of the proposed model.

Accuracy of skin cancer classification

Here we describe an evaluation of the accuracy of skin cancer classification.

First, three classes of diseases - benign lesions, malignant lesions, first-level nodes for non-neoplastic lesions, and nodes directly connected to skin diseases in the tree diagram - were validated. As a result, the proposed model achieved an overall accuracy of 72.1 % - the average of the inferential accuracy for the individual diseases; whereas the classification accuracy of the two comparative specialists was 65.6 % and 66.0 %. We then validated the proposed model on a 9-class disease split in each class of diseases - second-level nodes, disease groups connected to the first node: the results showed that the proposed model achieved 55.4 %, while the two dermatologists achieved 53.3 % and 55.0 % % accuracy was achieved. These results also confirmed that the proposed model achieved higher accuracy than the directly trained CNN when trained on disease categories finer than 3 or 9 classes. The images in the validation set were labeled by dermatologists, but not confirmed by biopsy, suggesting that the CNNs learn relevant information in these diseases.

Classification of epidermal and melanocytic lesions

Next, we discuss validation against the classification of epidermal and melanocytic lesions.

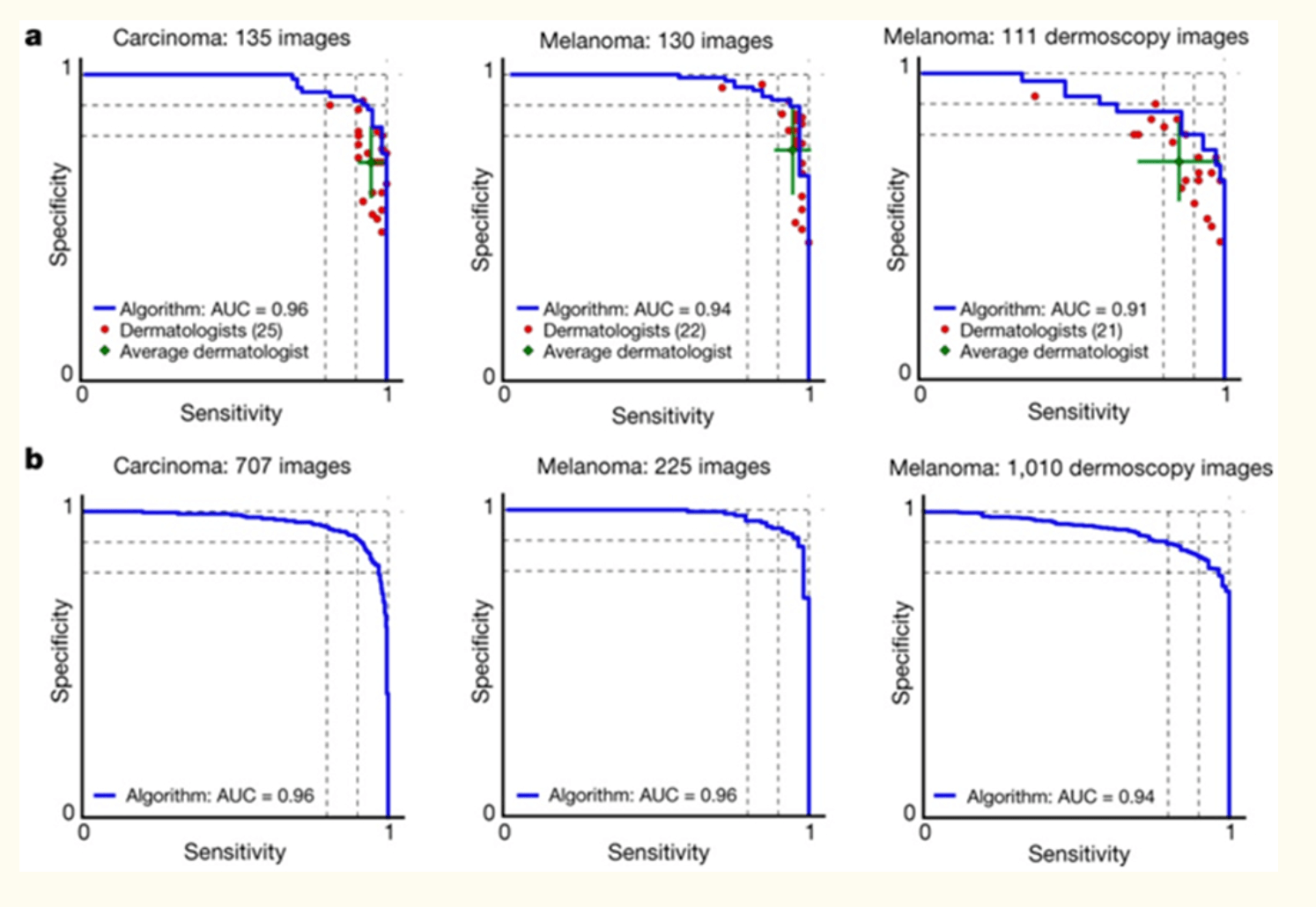

In the classification of epidermal and melanocytic lesions, the classification accuracy of CNN and 21 certified dermatologists for the disease was compared - see figure below. For each image, dermatologists assessed classification accuracy by answering the following question: do you biopsy/treat the lesion or reassure the patient? The red dots indicate the sensitivity and specificity of one dermatologist.

The CNN was trained with 757 disease classes and the dataset was partitioned into 127,463 training and validation images and 1,942 biopsy-labeled test images.

From this, the CNN outperformed the dermatologists in sensitivity and specificity - the area under the curve (AUC) in each case was more than 91%. Also, when comparing the sample data set to the full data set, the change in AUC was <0.03, suggesting that the results were more reliable in the larger data set.

CNN features

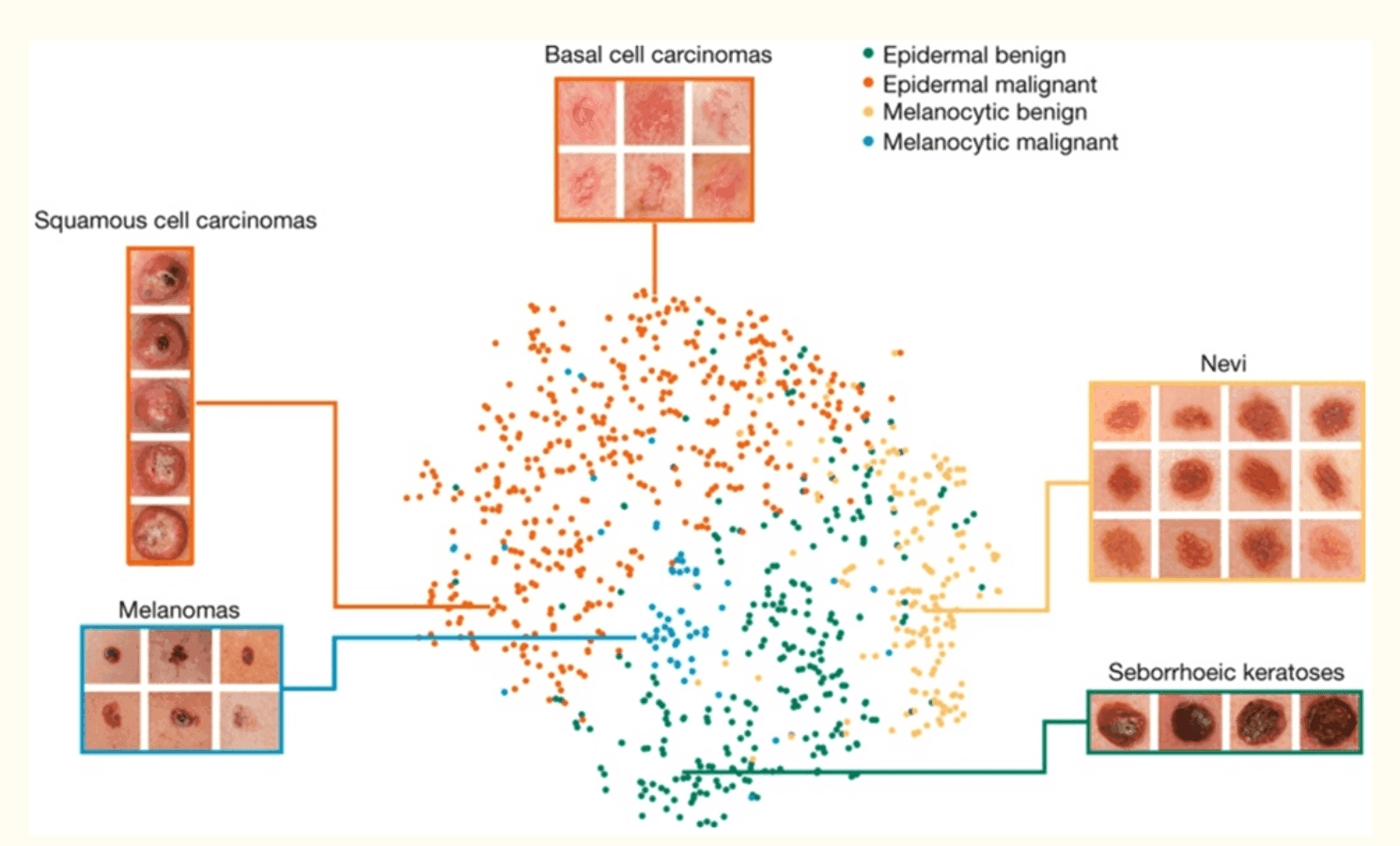

Here, we investigated the learning features in CNNs using t-SNE-t-distributed Stochastic Neighbor Embedding- see figure below.

The results showed that each point was a 2D skin lesion image projected from the 2048 dimensional output of the final hidden layer of the CNN, confirming clusters of points of the same clinical class. Melanomas were clustered centrally; and nevi were clustered on the left and right sides, respectively, resulting in a central-to-contrast result. Similarly, seborrheic keratoses were characterized by clusters symmetrically with malignant ones.

consideration

In this study, we constructed an algorithm to automatically determine skin cancer using skin images and image classification models. Specifically, we used a CNN-Inception v3-trained for skin lesion classification to perform three diagnostic tasks: keratinocyte carcinoma classification, melanoma classification, and melanoma classification using a dermatoscopy -The results of the validation study showed that the performance of the system was equal to or better than the classification accuracy of 21 dermatologists.

The features of our method are fast classification and high scalability. The model used in this study is a simple model consisting of a single layer of CNN, but it is expected to be easy to introduce into clinical practice because it does not require complex processing. In addition, the present study is expected to have a significant clinical impact by expanding the scope of primary care practice and augmenting the clinical decision-making of dermatologists, since skin images are easily obtained using mobile cameras due to the widespread use of mobile devices. The conventional medical examination is mainly based on visual or endoscopic observation by a dermatologist and often depends on the experience of the specialist. Therefore, as shown in the present model, it is thought that even inexperienced physicians can improve the accuracy of diagnosis by using deep learning to classify images of skin lesions with the same or higher accuracy as that of dermatologists. In addition, the ability for patients to identify skin cancer on their own will likely increase access to medical care before the onset of disease and significantly improve prognosis.

On the other hand, one of the issues is the implementation in the clinical field. In this study, we aim to realize a method to automatically detect skin cancer from skin images in a clinical setting, so it is necessary to consider whether the method is useful in clinical settings. In this study, a simple model is used, but there may be a drawback in processing speed considering the current performance of mobile terminals. To solve this problem, we can introduce the technology that is utilized in edge computing.

Categories related to this article

![DrHouse] Diagnostic](https://aisholar.s3.ap-northeast-1.amazonaws.com/media/February2025/drhouse-520x300.png)

![SA-FedLoRA] Communic](https://aisholar.s3.ap-northeast-1.amazonaws.com/media/October2024/sa-fedlora-520x300.png)

![[SpliceBERT] A BERT](https://aisholar.s3.ap-northeast-1.amazonaws.com/media/July2024/splicebert-520x300.png)

![[IGModel] Methodolog](https://aisholar.s3.ap-northeast-1.amazonaws.com/media/July2024/igmodel-520x300.png)