A Major Risk In Giant Language Models? ! Here's An Important Paper That Shows The Vulnerability Of Information Leaking From Giant Language Models!

3 main points

✔️ Even a model with high generalization performance like GPT series can memorize training data, which leads to information leakage.

✔️ We have actually attacked GPT-2 and succeeded in extracting the training data with up to 67% accuracy.

✔️ The larger the model, the higher its ability to store training data, and this is an important paper that throws a stone at the recent trend of increasing the size of models.

Extracting Training Data from Large Language Models

written by Nicholas Carlini,Florian Tramer,Eric Wallace,Matthew Jagielski,Ariel Herbert-Voss,Katherine Lee,Adam Roberts,Tom Brown,Dawn Song,Ulfar Erlingsson,Alina Oprea,Colin Raffel

(Submitted on 14 Dec 2020)

Comments: Accepted to arXiv

Subjects: Cryptography and Security (cs.CR); Computation and Language (cs.CL); Machine Learning (cs.LG)

First of all

Recently, giant language models such as GPT-3 (175 billion parameters) developed by OpenAI and Switch Transformer (1.6 trillion parameters) developed by Google have been attracting a lot of attention in the AI world. One of the reasons for this is that giant language models have the ability to generate natural sentences that are indistinguishable from human-made sentences; GPT-2 was suspended from the public domain because it generated sentences that were so natural that they were likely to be abused. OpenAI has also released an API てゃt allows users to submit natural sentences and get the results of GPT-3. A number of amazing apps have been created using that API. The following is one example, where you describe a Google design in natural text and throw it at the API, and it generates HTML/CSS that faithfully reproduces that design. Other examples of applications can be found here.

Here's a sentence describing what Google's home page should look and here's GPT-3 generating the code for it nearly perfectly. pic.twitter.com/m49hoKiEpR

— Sharif Shameem (@sharifshameem) July 15, 2020

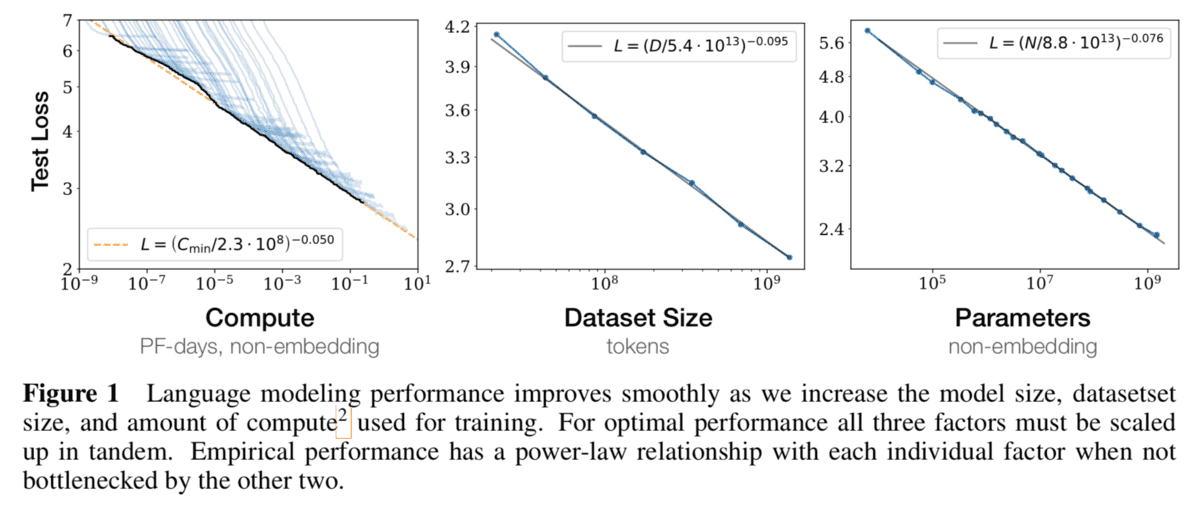

OpenAI has published a paper showing that a scaling law holds for such Transformer-based large models. This scaling law says that the accuracy is determined by only three variables: the model size, the data size to be trained, and the budget for training. For example, the following figure shows that the accuracy of the model increases linearly on a logarithmic graph when the model size is increased while the data size and the budget to be trained are fixed. Moreover, surprisingly, there is currently no limit to this scaling law. This means that as the model size increases, the accuracy of the model follows this line without any upper bound. This suggests that the accuracy of AI models boils down to a simple question of how large a model you can handle anyway.

Against this background, the giant model has attracted a great deal of attention in various fields. However, there is one concern that arises here. The giant model is trained on a large amount of data, and if the data contains personal information or information that should not be disclosed to the outside world, the giant model will store such information as it is, and as a result, information leakage may occur.

The fact that the training data is stored as means that the model is overfitting. In other words, it indicates that the model has low generalization performance. Since the accuracy of the giant model is very high and the generalization performance is high, the possibility of this kind of memory was considered to be low in previous studies. However, in the paper presented here, we show that training data is stored similarly in giant models and that the larger the model size, the more training data is stored. This means that the larger the model, the more likely it is that personal information and other data will be extracted. In this paper, we succeeded in extracting a part of the training data by attacking GPT-2. This is a very important paper that shows that there is a big risk in today's huge models.

To read more,

Please register with AI-SCHOLAR.

ORCategories related to this article