A Castle In The Sky! Automatically Convert The Airspace In The Video Offline With High Accuracy!

3 main points

✔️ A new framework for compositing sky regions in real-time in the style of your choice

✔️ No information from inertial sensors such as gyros is used, but only image information is used for compositing completely

✔️ Applicable to both online and offline applications

Castle in the Sky: Dynamic Sky Replacement and Harmonization in Videos

written by Zhengxia Zou

(Submitted on 22 Oct 2020)

Comments: Project website: this https URL

Subjects: Computer Vision and Pattern Recognition (cs.CV)

overview

The sky has a huge impact on images and videos.

For example, many people may think that if they go to shoot in a tropical ocean and it's overcast or raining, it's not very appealing. If it's a tropical sea, many people may find clear, sunny skies and seas attractive. On the other hand, you might want to take images and videos of the wild atmosphere in a storm on a normal sunny or cloudy day.

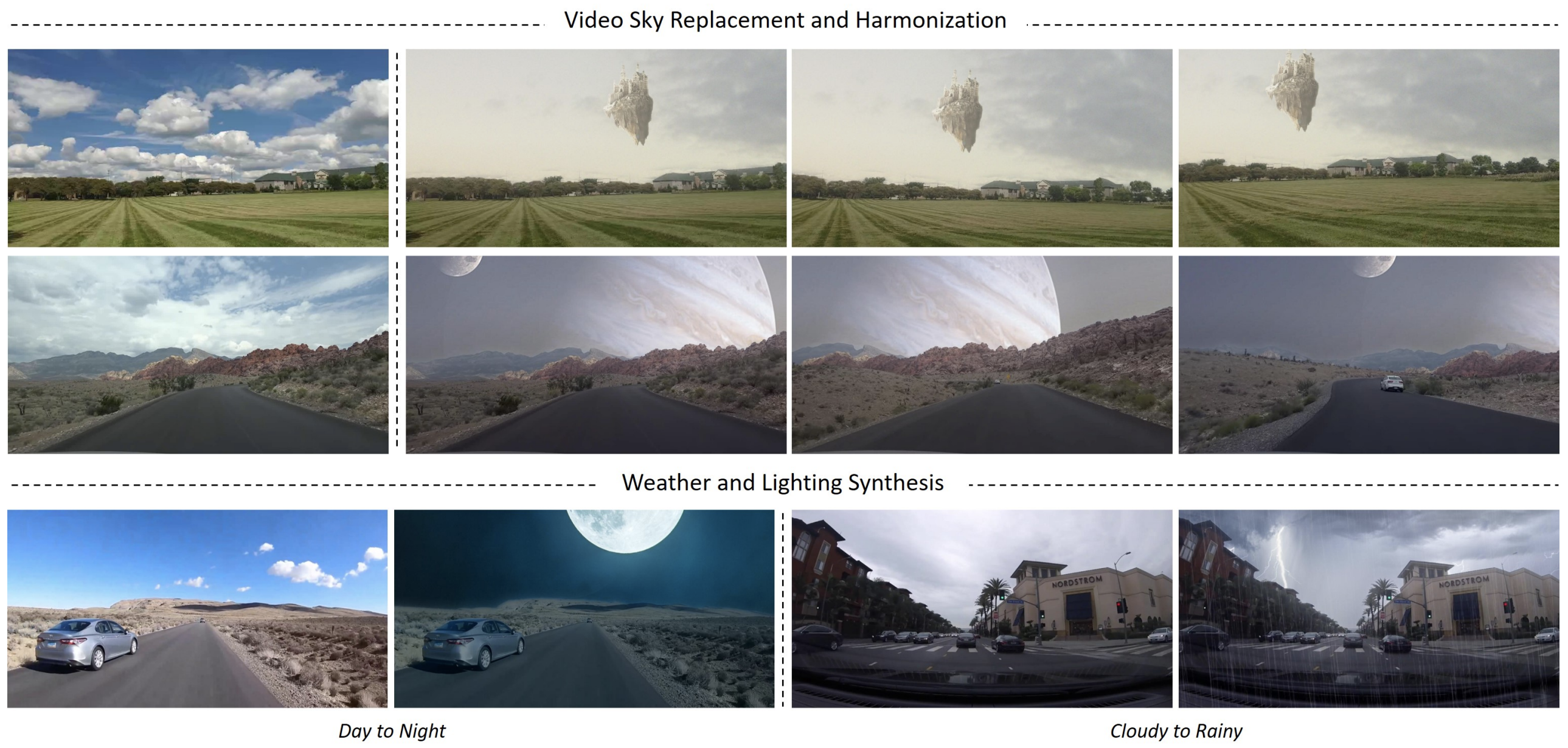

In this paper, we propose a new framework to replace the sky region with another style of the image in real-time, as shown in the figure below. It is also applied to weather transformations, for example.

This sample video shows a castle in a clear blue sky, or a full moon translates into a beautiful night sky or a stormy sky with lightning striking.

These techniques are often used in creative advertising and film production. The development of computer vision and augmented reality (AR) over the past few years has made it possible to edit the sky in popular editing software such as MeituPic and Photoshop.

However, these require a person to edit each frame, adjusting the settings, and It takes skill and time. So there is still room for improvement in terms of automation. Particularly in film production, a lot of work goes into editing the footage in order to create a unique worldview, such as space, in many scenes.

On the other hand, there are some that allow you to edit the sky interactively in real-time. For example, a smartphone app called StarWalk2 allows you to hold your camera up to the sky and map planets, constellations, and other celestial objects in the direction you hold your camera in, in real-time.

(Source: Google Play )

In addition, Fakeye, announced by the Vietnam National University in 2020, can synthesize AR objects in the sky in real-time.

(出典: Fakeye: Sky Augmentation with Real-time Sky Segmentation and Texture Blending)

(出典: Fakeye: Sky Augmentation with Real-time Sky Segmentation and Texture Blending)

Unlike the image editing software I mentioned earlier, both of these software allow you to edit the sky in real-time as it is, without having to edit each frame.

However, these technologies require cameras with built-in inertial measurement sensors, such as gyroscopes, and are limited by hardware. Therefore, they cannot be used in devices where inertial measurement is not possible or in offline situations. Therefore, in this paper, we propose a more useful framework that allows us to edit the sky in real-time on a complete video basis without any sensor information.

To read more,

Please register with AI-SCHOLAR.

ORCategories related to this article