A Multi-agent Framework Is Now Available For Any Task By Customizing The Workflow!

3 main points

✔️ Propose AutoGen, a multi-agent framework that allows customization of a series of workflows by combining humans, LLMs, and tools

✔️ Developers can freely extend the functionality of each backend to handle a variety of tasks

✔️ Existing Experimental comparison with models demonstrates the effectiveness of AutoGen

AutoGen: Enabling Next-Gen LLM Applications via Multi-Agent Conversation Framework

written by Qingyun Wu, Gagan Bansal, Jieyu Zhang, Yiran Wu, Shaokun Zhang, Erkang Zhu, Beibin Li, Li Jiang, Xiaoyun Zhang, Chi Wang

(Submitted on 16 Aug 2023)

Comments: Published on arxiv.

Subjects: Artificial Intelligence (cs.AI); Computation and Language (cs.CL)

code:

The images used in this article are from the paper, the introductory slides, or were created based on them.

Introduction

The emergence of large-scale language models (LLMs) such as GPT-4 has not only led to AI applications with outstanding performance in coding, question answering, and chatbots, but has also drawn attention to the task-solving capabilities of AI agents generated by LLMs.

However, the single agent used in many existing studies has the problem that while it can efficiently perform simple tasks, it is difficult to perform complex tasks.

In recent years, multi-agent systems have become the mainstream solution to this problem, in which agentsperform tasks throughnatural languageconversations witheach other or with humans, but the complex workflow has become very difficult to design, implement, and optimize.

Against this background, this paper describes a paper that proposes AutoGenwhich is a multi-agent framework that allows developers to freely extend the functionality of each backend.

AutoGen Framework

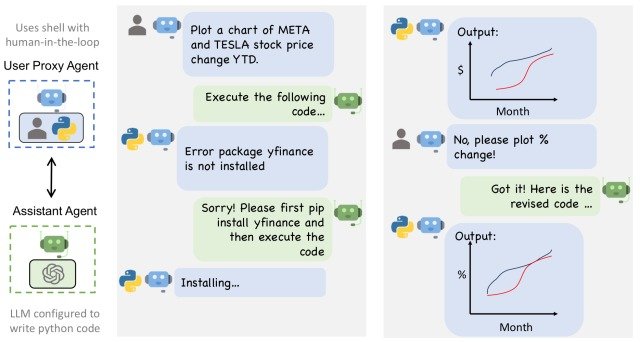

AutoGen is a framework that allows the construction of various multi-agent systems including humans, LLMs, and tools, as in the task resolution between two agents as shown in the figure below.

For AutoGen to build a complex multi-agent system, two things need to be defined

- Define a set of conversable agents with specific capabilities and roles

- Interaction between agents, i.e., defining how an agent responds when it receives a message from another agent

In addition, when building a multi-agent system with AutoGen's built-in agent functionality, you can either use AutoGen's built-in agents directly or develop customized agents based on them.

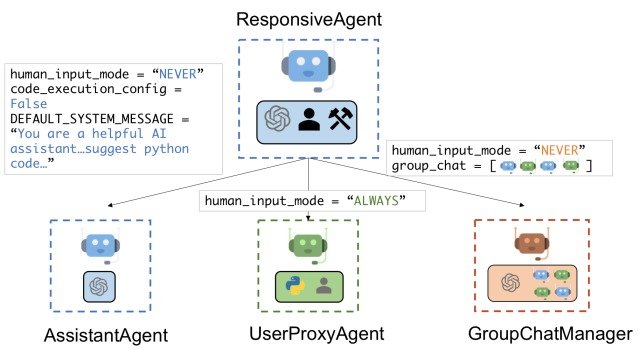

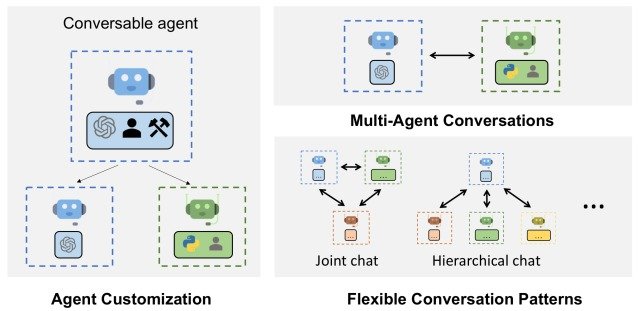

The figure below provides an overview of AutoGen's embedded agents.

As you can see from the LLM, human, and tool symbols under each agent, ResponsiveAgent is the default agent, and you can freely combine LLM, human, and tool.

AssistantAgent is designed to act as an AI assistant and responds to messages using LLM, but does not require human input or code execution.

The UserProxyAgent is designed to act as an agent for human surrogates, requiring human input at each turn of interaction by default, and responding to messages by a human or a tool.

GroupChatManager uses LLM to manage AutoGen's conversational agent groups to respond to messages in group chats.

This embedded agent design allows AutoGen to be customized with any combination of LLMs, humans, and tools as shown in the figure below (Agent Customization), to enable conversations between agents to solve tasks (Multi-AgentConversations) and support many complex agent conversations (Flexible Conversation Patterns).

Applications of AutoGen

In order to demonstrate the effectiveness of AutoGen, this paper uses a variety of tasks to validate it.

Math Problem Solving

Mathematics is a fundamental subject in academia, and LLM can be used for a variety of applications, including instruction by personalized agents and research support

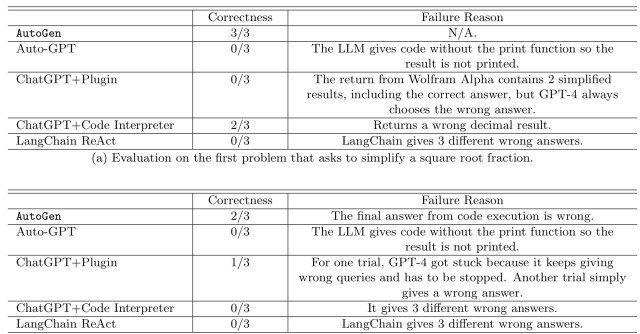

Therefore, in this experiment, we conducted a comparison experiment using five models ( AutoGen, Auto-GPT, ChatGPT+Plugin, ChatGPT+Code Interpreter, and LangChain ReAct ) and the MATH dataset, a data set of mathematical problems.

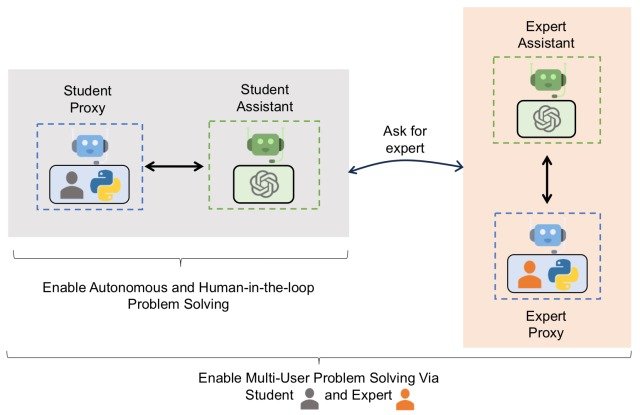

The AutoGen setup used to solve the math problem is shown in the figure below.

In this workflow, the Student andStudent Assistant work together to solve the problem, and only when the Student Assistant is not satisfied with his/her answer can he/she ask for help from another agent assigned as Expert Assistant. The workflow is such that the Student and Student Assistant work together to solve the problem.

The correct response rates for the two patterns of math questions in the MATH dataset are shown in the figure below. (Each was tested three times for each question.)

As can be seen from the figure, AutoGen had the highest percentage of correct answersamong all models, demonstrating its effectiveness for math problems.

Multi-agent Coding

Next, we conducted a multi-agent coding test focused on OptiGuide, a framework that excels at writing appropriate code for user questions.

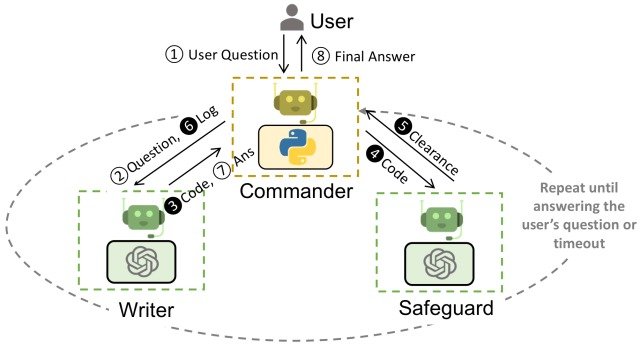

OptiGuide is a framework that uses LLM and external tools to effectively address user questions related to the application, and in this experiment we designed the workflow shown in the figure below.

In this workflow, first the Commander receives the user's question, and then it converses with the Writer andSafeguard.

The Writer then creates the answer and code, Safefuard secures it (i.e., does not leak information, does not use malicious code, etc.), and the Commander executes the code.

If there is a problem with code execution, etc., the process in the shaded area of the diagram is repeated until the problem is resolved.

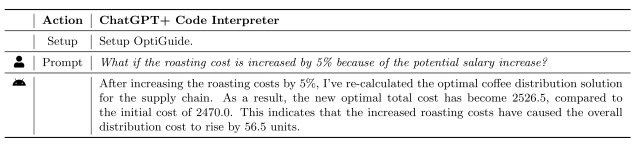

This experiment compared AutoGen andChatGPT+Code Interpreter, asking each model, "What if the roasting cost is increased by 5% because of the potential salary increase? The experiment compares AutoGen and ChatGPT+Code Interpreter.

The results of ChatGPT+CodeInterpreter are shown in the figure below.

As a result, ChatGPT+CodeInterpreter is unable to execute code with private packages or customized dependencies (such as Gurobi), which interrupts the workflow.

The AutoGen results, on the other hand, are shown in the figure below.

In contrast to ChatGPT+CodeInterpreter, the response by AutoGen is more streamlined and autonomous, integrating multiple agents to address issues, resulting in a more stable workflow.

This stable workflow allows for reuse in other applications and the composition of larger applications, and this experiment demonstrates AutoGen's superior coding performance.

Conversational Chess

Chess is arguably the most popular board game in the world, and to demonstrate the effectiveness of AutoGen in this experiment, we designed Conversational Chess, a new chess game that supports natural language interfaces.

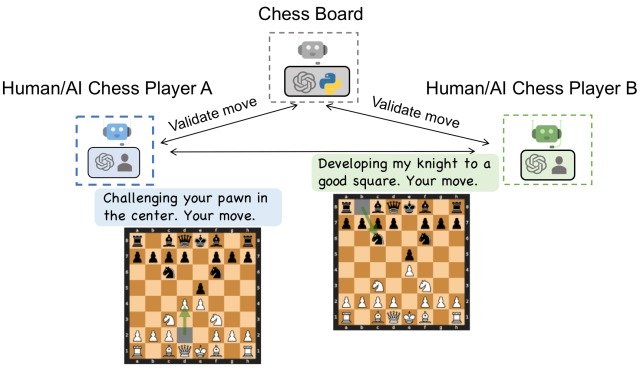

The workflow of AutoGen in Conversational Chess is shown in the figure below.

Each player is either a human or an agent generated by AutoGen ( Human/Chess Player ), and the agent set up on the Chass Board manages the rules of the game and supports the players by providing information about the board.

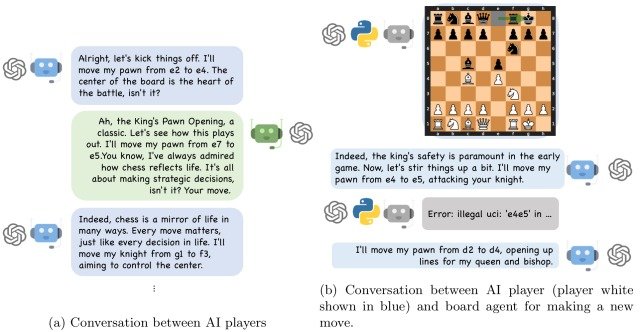

In this experiment, a match was played between two agents and the scene shown below was observed.

As with many of the aforementioned agent systems, this workflow allowed each player to share information and knowledge through conversation, complementing each other's abilities and allowing them to choose better moves.

Summary

How was it? In this article, we explained the paper that proposed AutoGen, a multi-agent framework that enables the creation of agents with various roles by customizing a series of workflows that combine humans, LLMs, and tools, and allows developers to freely extend the functionality of each backend. The paper explained about

While it is possible to customize AutoGen for different tasks, as in the experiments conducted in this paper,

- How many agents to include

- How to assign agent roles and capabilities

- Whether to automate certain parts of the workflow

There are also issues such as application-dependent configurations such as the following, and future progress will be closely watched.

The details of the AutoGen architecture and experimental results presented here can be found in this paper for those interested.

Categories related to this article