Machine Learning Without Centralized Data Management? Coalition Learning To Predict Oxygen Dosage For COVID-19 Infected Patients!

3 main points

✔️ Federated Learning (FL) is a method for machine learning while keeping data confidential

✔️ In this paper, FL is used to estimate the oxygen requirements of COVID-19 patients without sharing data across multiple medical institutions

✔️ Achieved an AUC of 0.92, improving the average AUC by 16% over learning in a single medical institution

Federated learning for predicting clinical outcomes in patients with COVID-19

written by Ittai Dayan, Holger R. Roth, Aoxiao Zhong, Ahmed Harouni, Amilcare Gentili, Anas Z. Abidin, Andrew Liu, Anthony Beardsworth Costa, Bradford J. Wood, Chien-Sung Tsai, Chih-Hung Wang, Chun-Nan Hsu, C. K. Lee, Peiying Ruan, Daguang Xu, Dufan Wu, Eddie Huang, Felipe Campos Kitamura, Griffin Lacey, Gustavo César de Antônio Corradi, Gustavo Nino, Hao-Hsin Shin, Hirofumi Obinata, Hui Ren, Jason C. Crane, Jesse Tetreault, Jiahui Guan, John W. Garrett, Joshua D. Kaggie, Jung Gil Park, Keith Dreyer, Krishna Juluru, Kristopher Kersten, Marcio Aloisio Bezerra Cavalcanti Rockenbach, Marius George Linguraru, Masoom A. Haider, Meena AbdelMaseeh, Nicola Rieke, Pablo F. Damasceno, Pedro Mario Cruz e Silva, Pochuan Wang, Sheng Xu, Shuichi Kawano, Sira Sriswasdi, Soo Young Park, Thomas M. Grist, Varun Buch, Watsamon Jantarabenjakul, Weichung Wang, Won Young Tak, Xiang Li, Xihong Lin, Young Joon Kwon, Abood Quraini, Andrew Feng, Andrew N. Priest, Baris Turkbey, Benjamin Glicksberg, Bernardo Bizzo, Byung Seok Kim, Carlos Tor-Díez, Chia-Cheng Lee, Chia-Jung Hsu, Chin Lin, Chiu-Ling Lai, Christopher P. Hess, Colin Compas, Deepeksha Bhatia, Eric K. Oermann, Evan Leibovitz, Hisashi Sasaki, Hitoshi Mori, Isaac Yang, Jae Ho Sohn, Krishna Nand Keshava Murthy, Li-Chen Fu, Matheus Ribeiro Furtado de Mendonça, Mike Fralick, Min Kyu Kang, Mohammad Adil, Natalie Gangai, Peerapon Vateekul, Pierre Elnajjar, Sarah Hickman, Sharmila Majumdar, Shelley L. McLeod, Sheridan Reed, Stefan Gräf, Stephanie Harmon, Tatsuya Kodama, Thanyawee Puthanakit, Tony Mazzulli, Vitor Lima de Lavor, Yothin Rakvongthai, Yu Rim Lee, Yuhong Wen, Fiona J. Gilbert, Mona G. Flores & Quanzheng Li

(Submitted on 15 Sep 2021)

Comments: Nature Medicine

code:

The images used in this article are from the paper, the introductory slides, or were created based on them.

Abstract

Federated Learning (FL) is a technique used to train artificial intelligence models using data from multiple sources while maintaining the anonymity of the data. This removes many of the barriers to data sharing. For example, it is not easy to share medical data between hospitals as in this paper. With federated learning, we can train machine learning models without collecting patient data in a single lab (i.e., without sending the information outside the hospital).

In this paper, we use coalition learning to estimate the future oxygen dosage requirements of COVID-19 patients. As a result, we achieve an AUC of 0.92, which is a 16% improvement in AUC over models trained by a single institution. This study shows that federated learning can enable rapid data science collaboration without data sharing.

main

During the COVID-19 pandemic, the scientific, university, medical, and data science communities faced data ownership and privacy issues that are common in collaborative research. However, researchers have come together in the face of the international crisis and have rapidly promoted open and collaborative approaches, including open-source software, data repositories, and the publication of anonymized datasets.

The authors had previously developed a clinical decision support (CDS) model for SARS-COV-2. It has been described in other papers that this CDS model can be used to predict the outcome of COVID-19 patients. The inputs of the CDS model are chest x-ray images, vital signs, anthropometric data, and clinical laboratory values, and the output is a score correlated with oxygen administration called CORISK.

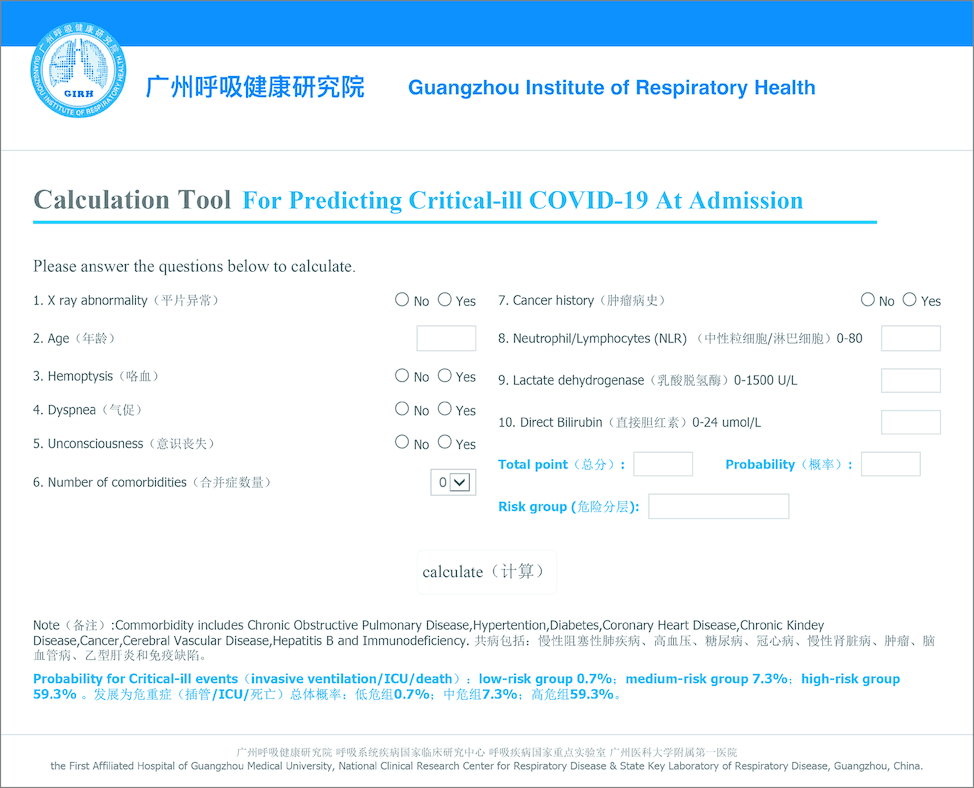

The above is from a reference article ( Development and Validation of a Clinical Risk Score to Predict the Occurrence of Critical Illness in Hospitalized Patients With COVID-19 ) Citation. An input form is shown.

Since healthcare professionals prefer models validated with their data, many AI models, including the CDS model, have been criticized for overtraining and poor generalizability. Therefore, to solve this problem while considering personal information, it is necessary to train using various data collected from multiple medical institutions without centralizing the data.

Coalitional learning improves data tracking and allows for rapid, centrally coordinated experimentation while assessing changes in algorithms and their impact. In a client-server approach, which is one form of coalitional learning, untrained models are sent to other servers (nodes), where training takes place. The results are then integrated (federated) on a central server and iterated until the training is completed. Only the weights and gradients of the model are communicated between the client and the server.

In this study, the CSD model was trained using a coalitional learning approach, and an FL model named EXAM was developed.

Results

EXAM is based on the CDS model described above. The input is a set of 20 features and the output is the patient's oxygen dose 24 and 72 hours after admission to the emergency department.

The table above details the input and output data. Chest x-ray images, blood test results, and oxygen saturation are listed.

The output, Outcome, is the amount of oxygen administered after 24 or 72 hours. In practice, the numbers are assigned to the most intense treatment the patient received. Oxygen therapy is divided in order of weakness into room air (RA), low-flow oxygen (LFO), high-flow oxygen (HFO)/noninvasive ventilation (NIV), and mechanical ventilation (MV). The values are 0, 0.25, 0.50, and 0.75, respectively. If the patient dies within 72 hours, a value of 1 is assigned. This results in five values for Ground Truth (the model itself performs regression rather than classification, so the output values are 0~1).

EXAM uses a neural network (ResNet34) consisting of 34 layers to extract features from chest x-ray images and integrates other inputs with Deep & Cross network. The output is a risk score, called EXAM score, which is a continuous ground of 0~1.

Federating the model

EXAM is the first federated learning model for COVID-19 trained on 16,148 datasets. It is also a very large and multinational project in clinical AI.

Above are the countries that participated in this study.

The figure above compares the locally trained model with the global federated learning model using test data from each client. In all examples, the federated learning model has a higher AUC, with an average improvement of 16%.

In addition, as shown in the figure below, the coalition learning model showed a 38% improvement in generalizability.

The figure above illustrates the generalizability of a coalition learning model. For example, a model trained on 1,000 cases from a single hospital has a lower average AUC (for the rest of the data not used for training) than a coalition learning model trained on 1,000 cases from multiple institutions. In particular, the local model trained on only mild cases benefited significantly from the coalitional learning. This local model was more accurate in its predictions for severe cases.

The ROC plot above is an example of the comparison between the local model and coalition learning. In this plot for moderate to severe cases with t≥0.5, we can see that the true positive rate is greatly improved in the coalitional learning model. Other examples are shown below.

In any case, the coalition learning model shows a significant improvement over the local model in generalizability.

Validation at independent sites

In this study, we only validated the model at three institutions. Among them, the validation results at Cooley Dickinson Hospital (CDH) in Massachusetts, USA, which has the largest data set, are shown below.

The figure above shows the results for the predicted EXAM score after 24 hours.

The figure above shows the results for the EXAM score after 72 hours.

For example, EXAM achieved a sensitivity of 0.950 and a specificity of 0.882 in predicting MV treatment (or death) at 24 hours in that hospital.

Use of differential privacy

The primary motivation for healthcare organizations to use federated learning is to protect the security and privacy of data and to ensure compliance. However, even in a federated learning model, there are potential risks. In this study, we decided to reduce the percentage of weight sharing as a risk mitigation measure in case the communication between the server and the client is intercepted. As a result, we found that the coalitional learning model can perform equally well even if only 25% of the weight updates are shared.

discussion

In this research, we have succeeded in rapidly and collaboratively developing the AI models needed in the medical field. The result is a model that is more robust and accurate than training on local data from each hospital. In particular, clients with only relatively small datasets have benefited greatly from coalitional learning. This means that the benefits of participating in a collaborative study of federated learning are significant.

This study also predicts oxygen delivery (risk), a feature that differentiates it from the more than 200 papers published to date that predict diagnosis and mortality; it does not require PCR data to be fed into the model as input, making it a useful model for real-world clinical practice.

However, because the data is not centralized, access to the data is limited. As a result, the analysis of the model output results is limited.

Also, in the early stages of a pandemic, many patients receive prophylactic high-flow oxygen, which may skew EXAM predictions.

method

Ethics approval

All procedures are by the Declaration of Helsinki and Good Clinical Practice guidelines. They have also been approved by the ethics committees within each institution.

Study setting

In this study, we used data from 20 institutions. The model is available at NVIDIA NGC (downloadable).

Data collection

A total of 16,148 data were prepared from 20 institutions from December 2019 to September 2020.

Patient inclusion criteria

Patient participation criteria were that (1) the patient presented to the emergency department (or equivalent), (2) the patient had PCR testing before discharge, (3) the patient had chest radiography, and (4) the patient's data contained at least 5 of the previously listed features (all obtained in the emergency department) These are.

Model input

Twenty-one features were used as inputs to the model. Outputs are oxygen doses 24 and 72 hours after arrival in the emergency room.

EXAM model development

This model is not approved by any regulatory body at this time and should be used for research purposes only.

FL details

The most common form of federated learning is the Federated Averaging algorithm proposed by McMahan et al. This algorithm can be implemented in a client-server fashion, with each hospital acting as an academician. Federated learning can be thought of as a method that aims to minimize global losses by reducing the losses of each client.

can be thought of as a method that aims to minimize global losses by reducing the losses of each client.

Each client learns locally and shares updates to the model weights with a central server that aggregates the contributions using secure socket layer encryption and communication protocols. The server sends the updated weights to the writing clients after aggregation, and each client resumes learning locally. This is repeated many times until the model converges.

Locally, the number of epochs is 200, and the optimization function is Adam (Adam is also used on the central server). The initial learning rate is 5 x 10-5, which is halved every 40 epochs. During training, we also applied data enhancements such as rotation, translation, shear, scaling, and noise.

Data availability

The datasets of the 20 institutions that participated in this study are under the control of their respective institutions. It is not shared with other participating institutions or collaborating servers and is private.

Code availability

All of the code and software used in this study are available at NGC. Trained models, data preparation guidelines, training code, model validation code, installation guides, etc. are also available at NGC.

Categories related to this article