![[Infinite Nature] Automatic Generation Of Image Video From A Single Image! What Is Google's Outrageous Research?](https://aisholar.s3.ap-northeast-1.amazonaws.com/media/February2021/ezgif.com-gif-maker_4_.gif)

[Infinite Nature] Automatic Generation Of Image Video From A Single Image! What Is Google's Outrageous Research?

3 main points

✔️ Google Research publishes research on generating image-video-like videos from a single image

✔️ Achieved by combining knowledge from the two domains of video synthesis and image synthesis

✔️ Pioneered a new genre of "perpetual view generation" that continuously generates new views

Infinite Nature: Perpetual View Generation of Natural Scenes from a Single Image

written by Andrew Liu, Richard Tucker, Varun Jampani, Ameesh Makadia, Noah Snavely, Angjoo Kanazawa

(Submitted on 17 Dec 2020 (v1), last revised 18 Dec 2020 (this version, v2))

Comments: Accepted to arXiv

Subjects: Computer Vision and Pattern Recognition (cs.CV); Graphics (cs.GR)

Outline

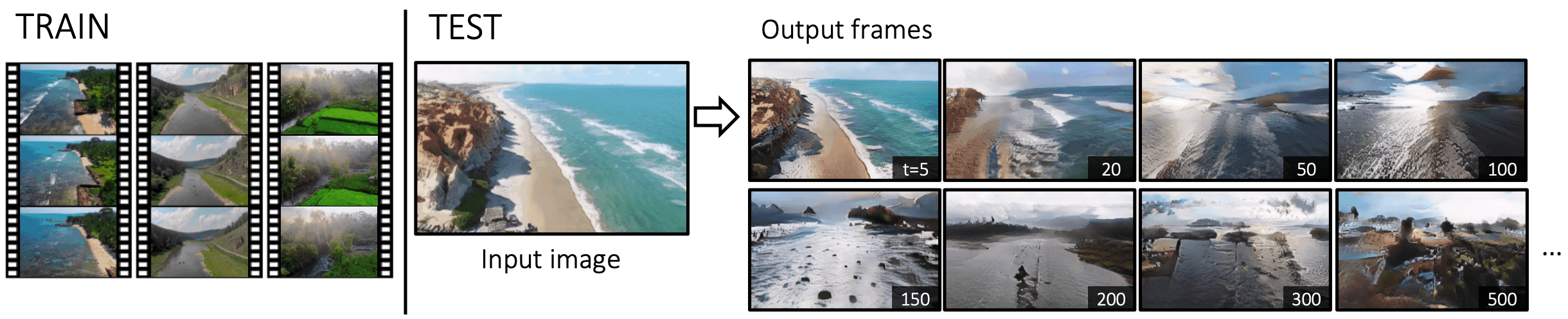

Google Research has published a study on permanent view generation. The idea is to automatically generate an image video from a single image, as shown in the figure below.

Achieving this kind of generation requires extrapolating new content for unseen regions and synthesizing new details for existing regions as the camera approaches. Building infinitely generative scene models have potential applications in content creation, novel photo interactions, and methods that use learned models such as model-based reinforcement learning.

However, generating a long video from a still image is considered to be very difficult in two ways: video synthesis and image synthesis.

Modern video synthesis methods have a limited number of new frames, even when trained with large computational resources. These methods apply to the time domain or rely on recurrent models. However, these methods are insufficient as they often ignore important elements of the video structure. In reality, video is a function of both the underlying scene and the camera geometry. Proper geometry is critical to synthesizing a video camera sequence.

In addition, many view synthesis methods use geometry to synthesize high-quality views. However, these methods only work within a limited range of camera motion, and if the camera is too far away, the view will collapse. Successfully generating a distant view requires painting of hidden areas, extrapolation of unseen areas beyond the boundaries of the previous frame (outpainting), and adding detail in areas that have moved closer to the camera over time (super-resolution).

To address these challenges, we propose a hybrid framework that leverages both geometry and image composition techniques. Specifically, we encode a geometric scene using a disparity map and decompose the permanent view generation task into a render-refine-and-repeat framework. First, we render the current frame from a new viewpoint, using the parallax to ensure that the scene content moves in a geometrically correct manner. Next, we refine the recalculated image and geometry. This step involves inpainting, outpainting, adding details in areas that require super-resolution, and compositing the new content. Because we refine both the image and the parallax, the entire process can be repeated in an auto-regressive fashion, allowing for the persistent generation of new views.

To read more,

Please register with AI-SCHOLAR.

ORCategories related to this article