A Big Part Of The Latest AI Technology! Japan's Technological Strength

There is no doubt that 2020 was the year of AI, with many AI technologies being announced, implemented in society, and developed for implementation. The latest AI papers don't stop at being papers, and companies all over the world are looking for possibilities of social return and business opportunities.

This is where the "edge" comes into play in social implementation. I think many people have heard the term "edge AI" more often since 2020. If we can get all sorts of benefits from using edge AI type appliances, our lives will be richer!

There is a huge challenge here! In the cloud, it is possible to run large models. However, when processing at the edge, there is a limit to the size of the model that can be implemented, and if the cost of implementation is also high, social implementation is expected to be difficult.

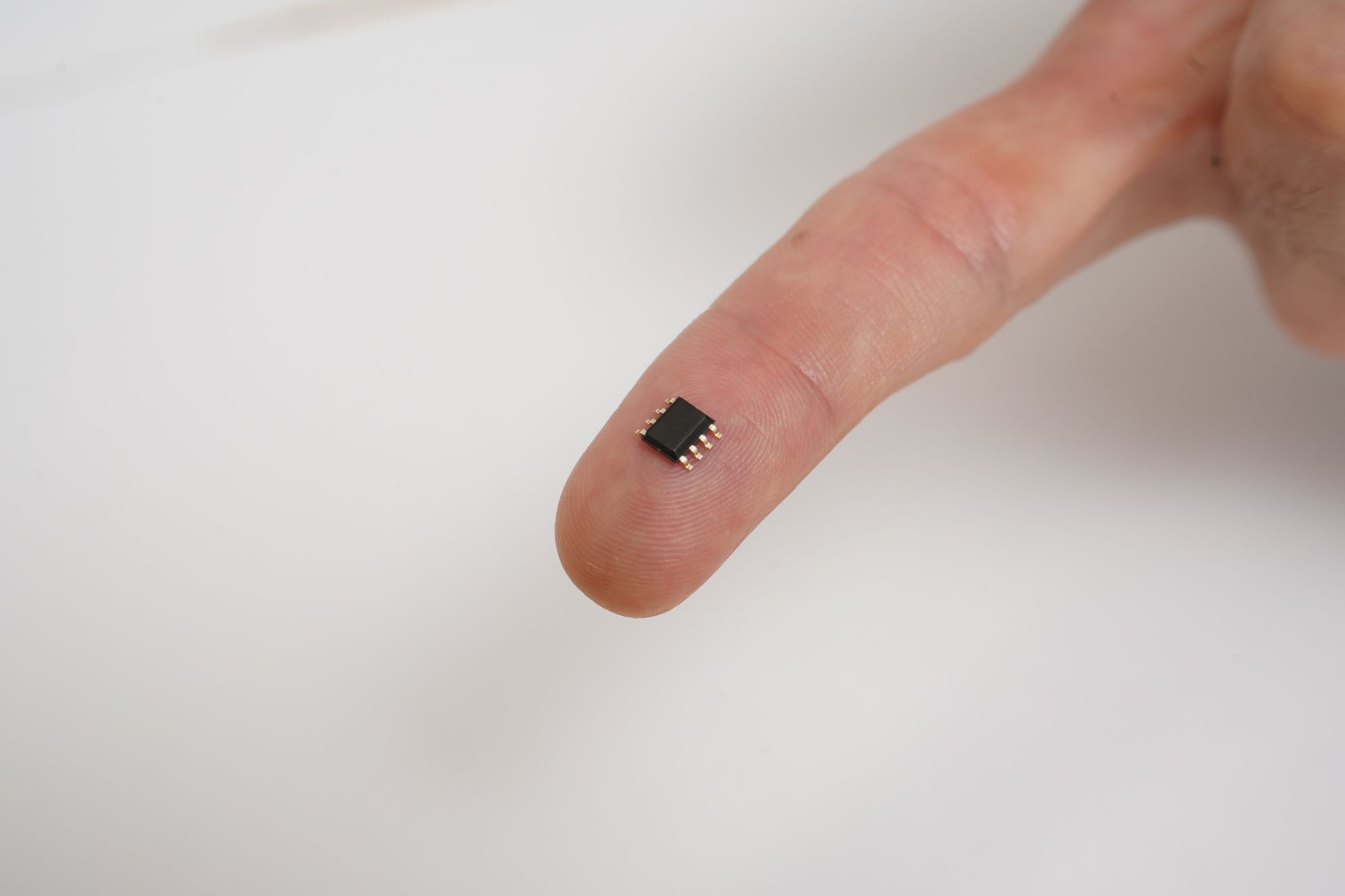

(AISing Ltd.), an edge AI startup that has developed a fingertip-small edge AI algorithm called "Memory Saving Tree (MST)" that supports AI technology that even GAFA has not yet developed. (AISing Ltd.), an edge AI startup that has developed a fingertip-sized memory saving tree (MST).

If you're not familiar with the technical part, you can read it here for a better understanding.

About Us

AISing is an AI venture founded in 2016.

Asing's proprietary AI algorithm, DBT (Deep Binary Tree), has won numerous startup awards by enabling sequential learning without the need for edge learning or tuning, which was impossible with existing AI algorithms such as Deep Learning. DBT (Deep Binary Tree) has won many start-up awards.

We were selected as one of 92 candidates for Japanese unicorn companies (unlisted companies with a market capitalization of 100 billion yen or more) in J-Startup, a development program of the Ministry of Economy, Trade, and Industry.

In the business phase, we have completed the procurement of several hundred million yen so far, and we are concluding alliances with major companies in Japan and abroad, and we are promoting our business toward the listing.

As a specialist in edge AI, Asing is revolutionizing the industry.

What is a Memory Saving Tree (MST)?

It is a novel machine learning algorithm that is so ultra-memory-saving that it can be placed on your fingertips.

Image of the microcontroller with MST

This technology also inherits the characteristics of our flagship Deep Binary Tree (DBT) technology, the AI in Real-time (AiiR) series that we have been developing for a long time.

What are the same characteristics?

The same characteristics are what we call online machine learning, which allows for so-called additional learning.

It's not that no one is working on this learning technology worldwide, but in the end, offline machine learning is more accurate, so even if you say you can grow it online, the original accuracy is not very good, so this field of machine learning technology has not been very active.

So you're saying that people didn't pay attention to it because it's so accurate offline, even if they don't bother to learn it online.

On the contrary, the reason why our company DBT is highly evaluated in this area is that we were able to develop the technology to bring the accuracy to almost the same or even better than offline online, which is the technology that triggered the establishment of our company.

DBT is also a lightweight implementation, but how lightweight depends on the standard you set. for example, the Raspberry Pi*1 was able to achieve a lightweight implementation that used several tens of MB of memory.

1 It is a one-board computer developed for educational purposes by the Raspberry Pi Foundation in the UK in 2012. With a low price of about $30, a useful library, and many examples shared on the Internet by people around the world, it has become one of the leading platforms for the IoT era.

It's difficult to implement because of the CPU and memory issues. I think it's also difficult at present.

This new technology has almost the same accuracy as that achieved by DBT and our online machine learning, but with a memory size on the order of a few hundredths to a few thousandths of a kilobyte! As the name suggests, this is the key selling point of the technology.

A kilo? Kilo...

What will change with this technology, and what is the scope of application?

Our existing DBT technology, as well as other technologies such as random forests and deep learning, require a certain amount of computational power and consume a certain amount of memory. So when it comes to the Raspberry Pi Zero, I think there's a problem that implementation becomes quite difficult.

For example, if you want to put deep learning on it, you've cleared the memory requirements, but you still need FPGAs and GPUs, and you have to finish all the calculations within a few milliseconds, so the hardware specifications end up being expensive, so you have to make an AI chip. If that's the case, it would be the same as GAFA's or Huawei's level-headed technology strategy, so it would be difficult to develop it independently.

I think Random Forest can handle parallel computation, even if it's thin.

It's true that random forests can be used for parallel computation, but even if the computational cost can be met by the CPU, the memory will still be tens to hundreds of MB, and in the end it won't be used for low-end sensor devices that are even smaller than so-called smartphones.

On the other hand, you want to make low-end sensors more intelligent, don't you?

I'm sure there's a need for intelligence in these areas as well, but we've made it possible to put it on a wide range of devices where it wasn't possible before due to hardware limitations, and that's the biggest business point of this project.

The scope of our company is more low-end, and there's a point where the scope of application has expanded to areas where there's no reason to use AI!

That's right. The point is that you can use existing hardware.

For example, a microcomputer that's used in a customer's air conditioner or humidifier, for example, can be used in terms of performance.

Some people may misunderstand me, but it does not mean that I am able to adapt to small things. In other words, it means that we can adapt to small things. Also, we have confirmed that it can be implemented in Arm's smallest CortexM0 series, so it will be on about 80~90% of the world's products.

For example, if it's implemented on a device with a high CPU specification, it can be implemented with extra computing power, so things that would take milliseconds to process on a device with a low CPU specification can now be done in microseconds.

What was originally high performance has the potential to become an even higher performance, right? Yes!

What strengths did you have in developing this technology?

The strength of our company is that we have researchers in deep learning, such as CNN, RNN, and other new algorithms, as well as professionals in various fields such as mathematics, statistics, and embedded implementation. That's our strength. In January 2020, we established the Algorithm Development Group (ADG), which has now become Corona, and we meet on the Web every other week to discuss this and that. So the driving force behind this is an overwhelming aversion to losing!

How did you come to develop this technology?

I know it sounds funny, but it's true.

Three years ago, there was no word for DBT as edge AI, so we marketed it as EmbeddedAI (embedded AI). At the time, deep learning was all the rage, and people were wondering what your technology was. One company called me and said, "Since it's embedded, you'll use 256, right? So I said, "Yes, I'll do it. At that time, I thought it was MB. I said, "256MB is plenty! He said, "No, no, it's like KB. It's like, "Isn't it 1/1000! No, that's impossible.

Embedded implementation technology is also one of our strengths, so we were a little frustrated. So we decided to solve this problem, and after about a year and a half or two years of research and development, we were able to develop it. It took us about a year to a year and a half to come to the point where we were able to create a new algorithm.

Are there any problems with the online model?

I'm glad to see that the performance of the system can be improved by learning online. But on the other hand, is there any negative impact on performance?

And that's where our company excels!

All algorithms, from CNN's to RNNs, can be built from scratch in C while considering memory management and embedded systems. However, it's not good enough. If you replace the learned model with conventional control, you will have problems like the question. Like myself, I'm originally a professional in machine control. Our company specializes in the combined domain of embedded systems, AI, and control, so we actually have a solution to the problem you are asking about.

In fact, I think that's the reason why OMRON and other companies have actually implemented this system and issued joint press releases. With our know-how, it's actually possible to implement conventional quality assurance or conventional control safety measures.

To put it simply, we have the know-how to add on to the existing control, so we can make it no worse! It gets better, but not worse. That means we have the know-how. To tell you the truth, the combined area of AI and machine control is quite a hole, and no one else in the world is doing it. Because we have been focusing on this area, we have been able to create intellectual property and technological superiority.

I know AI, but I don't know control. This causes conflicts because we implement control in a relationship where we know control but not AI. We fail because we try to leave the conventional control to the AI. We're able to do this because we understand the itchy parts of control that control engineers have.

That's the advantage of being able to cover the combined domains of control and AI, isn't it?

In reality, it's not such a big deal, it's just the difference between literacy and the world's literacy.

MST Explanatory Video

Categories related to this article