![[Materials Informatics] Successfully Exploiting The Potential Of Deep Learning Through Individual Residual Learning!](https://aisholar.s3.ap-northeast-1.amazonaws.com/media/June2021/individual_residual_learning-min.png)

[Materials Informatics] Successfully Exploiting The Potential Of Deep Learning Through Individual Residual Learning!

3 main points

✔️ Improve the gradient vanishing and explosion problem in deep learning by Individual Residual Learning (IRNet)

✔️ Apply IRNet to a deep learning model that predicts the properties of a substance based on its chemical composition, etc.

✔️ Avoiding the gradient vanishing/explosion problem simply improves prediction performance when the amount of data is above a certain level and the number of layers is large.

Enabling deeper learning on big data for materials informatics applications

Written by Dipendra Jha, Vishu Gupta, Logan Ward, Zijiang Yang, Christopher Wolverton, Ian Foster, Wei-keng Liao, Alok Choudhary & Ankit Agrawal

(Submitted on 19 February 2021)

Comments: Accepted by Scientific Reports (2021) 11:4244

Subjects: Artificial Intelligence (cs.AI); Machine Learning (cs.LG)

Towards the application of deep learning to materials informatics...

In recent years, materials informatics (MI) has attracted much attention among materials researchers, which utilizes machine learning models to search for unknown materials with desired properties. In this MI, data obtained from actual manual experiments and DFT calculations The MI uses as its data set the data obtained from actual manual experiments and the huge amount of computational results calculated by DFT calculations.

(This DFT calculation is called "first-principles calculation based on density functional theory (DFT)," which is often used in the field of solid-state materials science. It is based on quantum mechanics and quantum chemistry, and theoretical calculations are used to obtain knowledge about the states and properties of materials. People outside the field of materials science may not be familiar with it, but it is something that comes up often in the field of MI, so it is good to know it lightly)

By using machine learning, for example, we can efficiently find correlations between the chemical composition and structure of a substance (Input) and the properties of the substance (Output). This allows us to understand that if we want to make a substance with such and such a property, we should make a substance with such and such a composition and structure. In MI, conventional machine learning models such as random forests, support vector machines, and kernel regression are often used, but the use of deep learning is still limited.

It is known that the more layers a neural network (NN) has in deep learning, the better the prediction performance can be, but the prediction performance decreases when the gradient vanishing/gradient explosion problem occurs. The problem of gradient vanishing and gradient explosion has been improved by changing the activation function and setting the initial weights, but it is still a problem in complex NNs with deep layers.

Therefore, in this paper, we focus on improving the deep learning model itself in order to obtain better accuracy and robustness in deep learning using big data of the matter. In this paper, we construct a deep NN (DNN) with 10 to up to 48 layers that use each feature of vector-based matter as input to predict the properties of matter. In its construction, we use "Individual Residual learning (IRNet) = Individual Residual Learning".

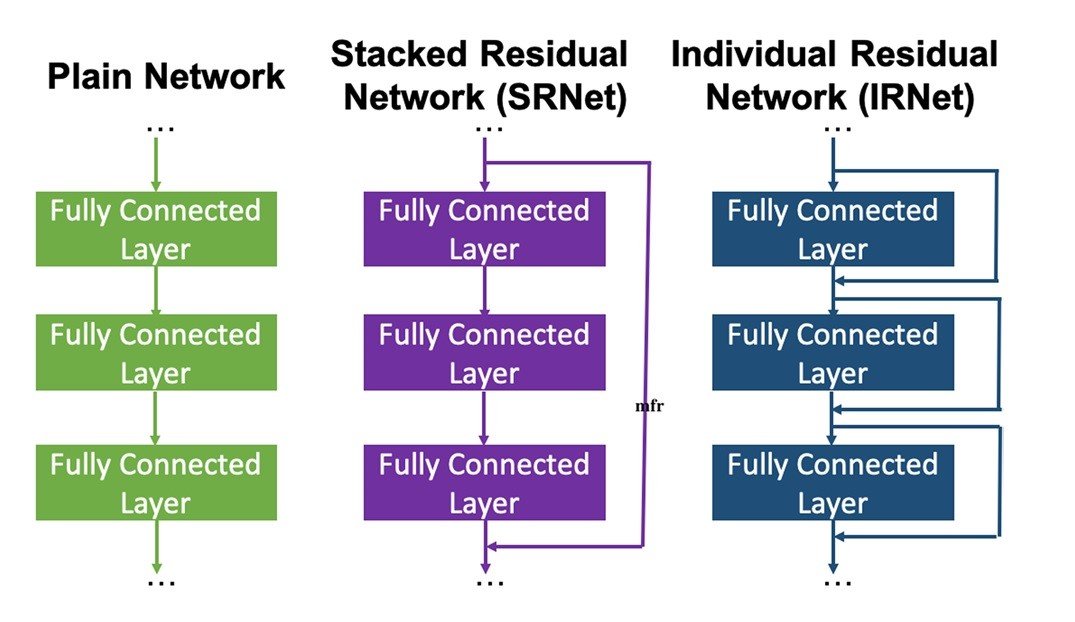

In residual learning, skip connections are connected to each of the several layers of the DNN to avoid the gradient vanishing and gradient exploding problems and to facilitate training and convergence. Traditionally, this method has been used for text and image classification, but in this work, we apply it to a vector-based regression problem used to predict material properties. Furthermore, while conventional residual learning places skip connections at every few layers, in this work we place skip connections at every individual layer to further improve the gradient problem and further enhance the prediction performance.

data set

As input for the substances, we used calculated data from the following databases.

- OQMD (Open Quantum Materials Database) → 341443 chemical compositions and physical properties (enthalpy of formation, bandgap, energy per atom, energy per unit volume, etc.) obtained by DFT calculations are used. In addition, the compositions and crystal structures of 435582 compounds from OQMD-SC are used.

- AFLOWLIB (Automatic Flow of Materials Discovery Library) → Uses composition, formation energy, volume, density, energy per atom, and bandgap for 234299 compounds.

- MP (Materials Project) → Uses the composition, bandgap, density, volume, energy per atom, the energy of the outer shell, and magnetization of 83989 inorganic compounds.

- JARVIS (Joint Automated Repository for Various Integrated Simulations) → Uses compositions and formation energies, bandgap energies, volume, and shear moduli for 19994 compounds. (Download through Matminer)

- Matminer (An open-source materials data mining toolkit) → Uses the band gaps of 10956 semiconductor compounds.

model building

In this work, we use a fully connected NN with material descriptors as inputs. Each fully connected layer is followed by batch normalization and processing with the ReLU activity function.

However, since a full-connection NN contains a huge number of parameters, we connected skip connections to each layer for individual residual learning to improve the gradient flow and facilitate training and convergence in GPU memory. With this IRNet, we predicted a physical property called "enthalpy of formation" (the heat generated by the reaction that produces the substance, which tells us how easy it is to produce the substance under certain conditions) based on the features of the substance. The feature values consist of 145 pieces of information derived from chemical composition and 126 pieces of information derived from chemical structures such as crystal structures.

The dataset was divided into 9: 1, one for Training and one for Validation.

We also experimented with the "Plain network", which does not contain any shortcuts, and the "SRNet (Stacked residual network)", which places skip connections in each of the several layers currently in use, as training models for comparison.

To determine the optimal hyperparameters, we used "Adam" as the optimizer, with a mini-batch size of 32, a learning rate of 0.0001, and a mean absolute error as the loss function. And in the last regression layer, the activation function was not placed.

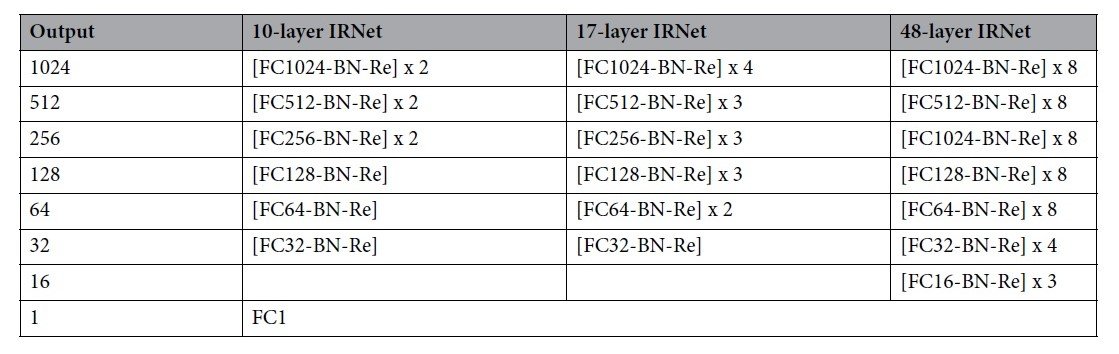

IRNet was built with 10, 17, and 48 layers. Its configuration is as follows.

(FC: Fully connected layer, BN: Batch normalization, Re: ReLU activation function)

Results and Discussion

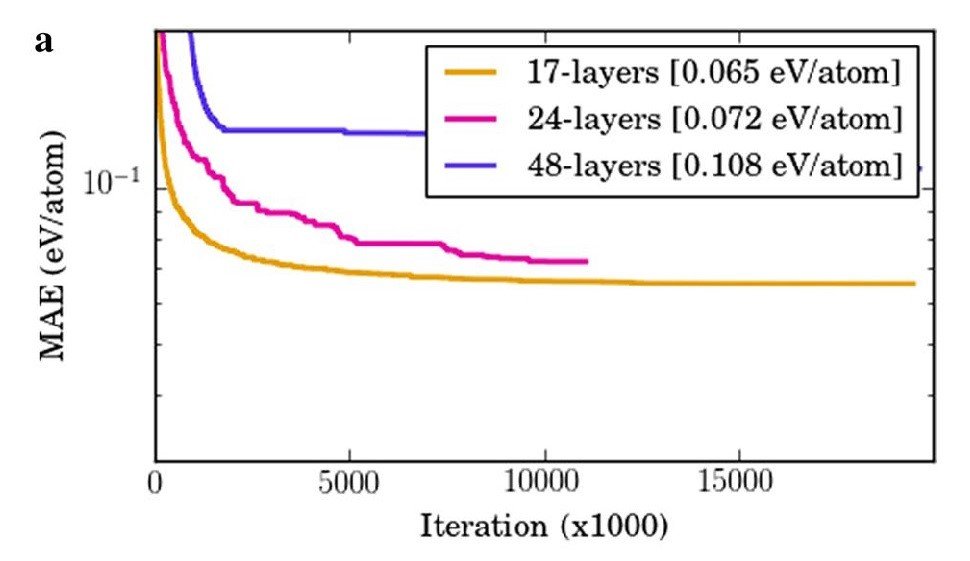

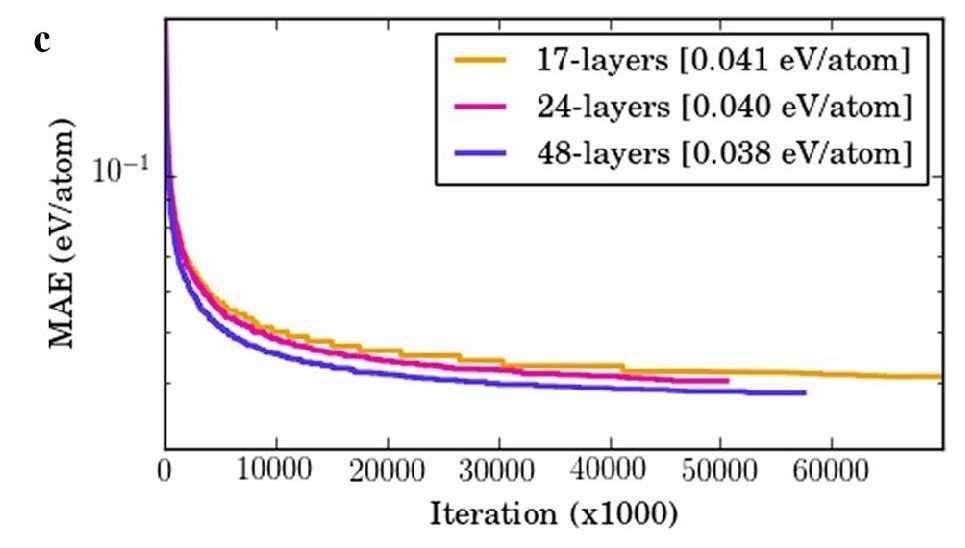

The results of training each network (Plain, SRNet, and IRNet) to predict the enthalpy of formation of matter are shown below.

a) Using the Plain network, despite batch normalization and using ReLU, the deeper the layer, the Mean Absolute Error (MAE) became larger.

This is due to the gradient disappearance and explosion problem.

b) However, in SRNet, contrary to Plain, the deeper the layer, the smaller the MAE.

c) Furthermore, IRNet outperformed SRNet in terms of performance.

Therefore, we believe that gradient disappearance and gradient explosion can be effectively avoided by using residual learning and placing skip connections finely.

We also compared the prediction performance with traditional machine learning algorithms (linear regression, SGD regression, ElasticNet, AdaBoost, ridge regression, RBFSVM, decision tree, random forest, etc.) and found that the best performing random forest had an MAE: 0.072 eV/atom. IRNet with 48 layers had an MAE of 0.0382 eV/atom, which means that IRNet was able to reduce the prediction error to less than 47% compared to traditional machine learning models.

By the way, "chemical composition" and "chemical structure (crystal structure, etc.)" are important features in predicting the properties of materials. Therefore, we investigated how the prediction performance of various physical properties changes when the features related to "chemical composition" and "chemical structure" are given by using various databases.

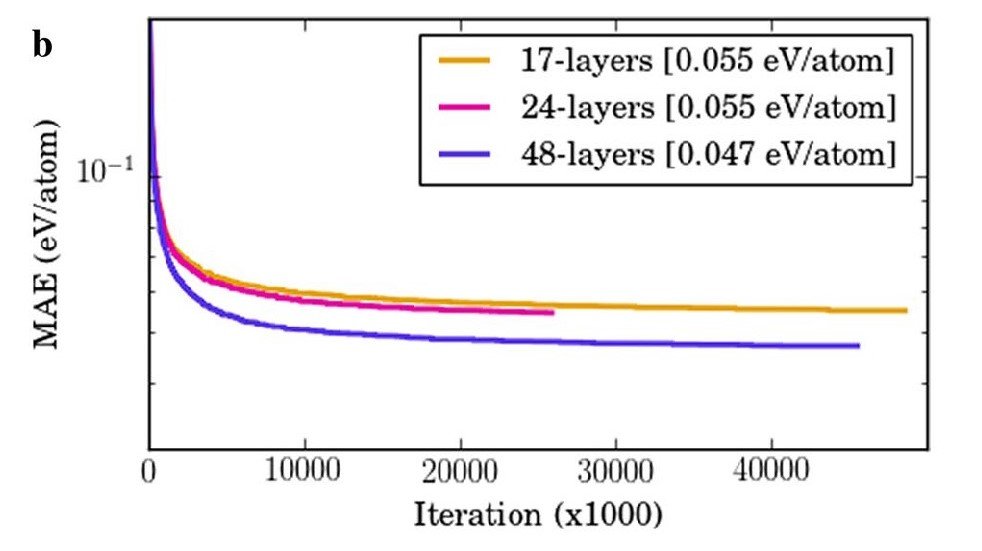

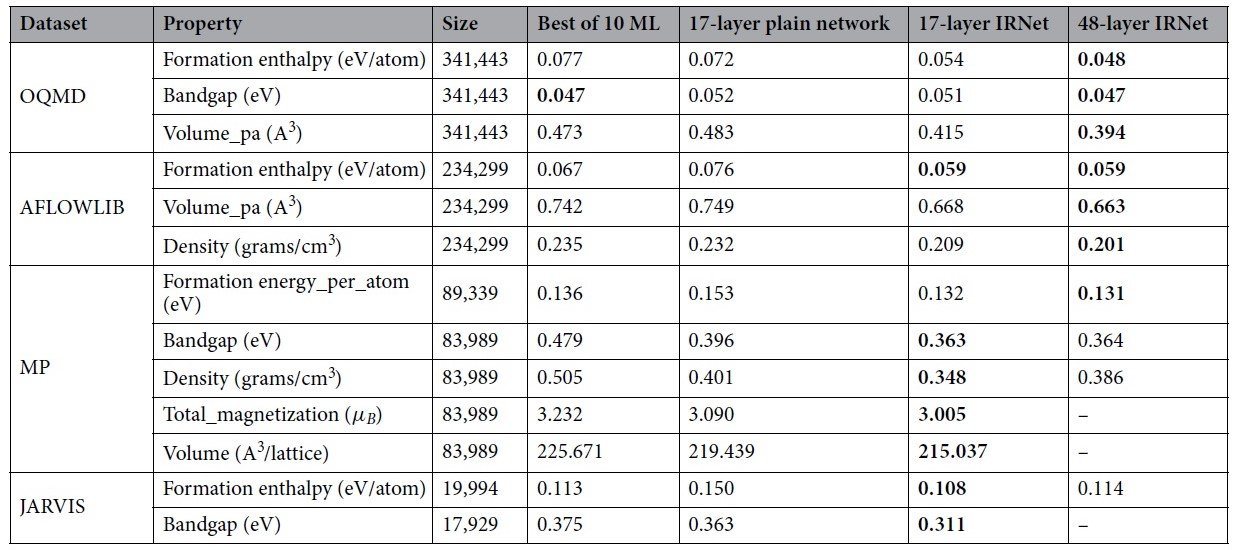

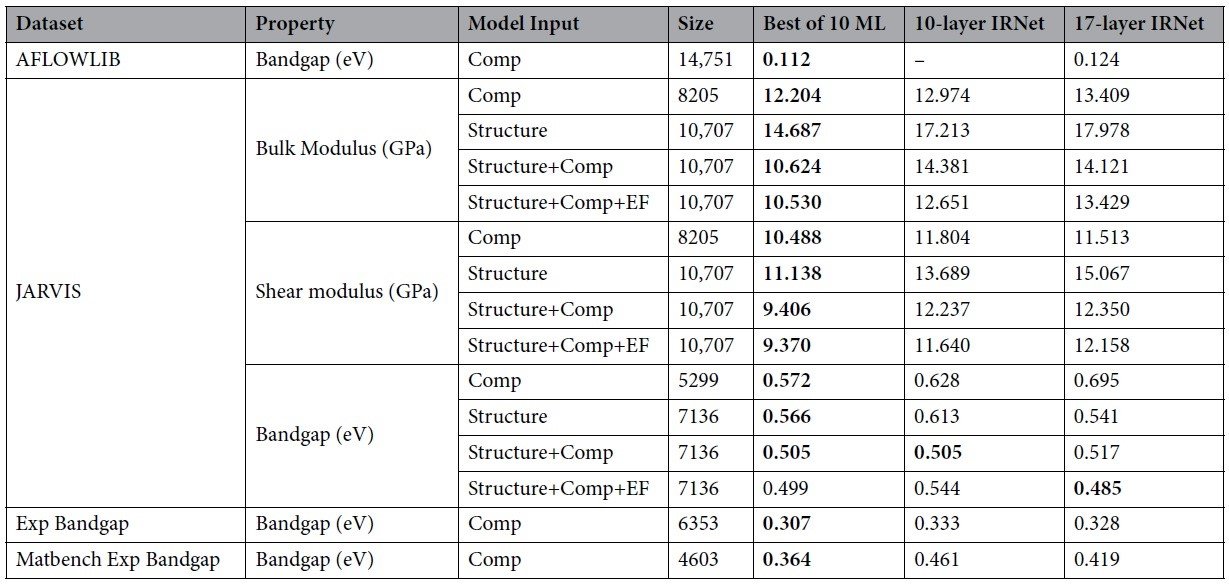

First, the results for the case where the feature value is "chemical composition" are shown below.

We compare the MAE of PlainNet, IRNet, and the best performing of various traditional machine learning methods. Bold text indicates the best performance.

For any property value and data set, IRNet with 17 layers performs better than PlainNet and conventional methods, which shows the "power" of deep learning and the importance of incorporating skip connections. Basically, the deeper 48 layers are better than the 17 layers, but when using MP and JARVIS with smaller dataset sizes, the 17 layers outperformed. This may be because 48 layers have too many parameters compared to the number of data and overtrain.

Therefore, we can say that the number of data should be taken into account when deciding the depth of the deep learning layer. It can also be said that the potential of deep learning can be exploited by using larger big data.

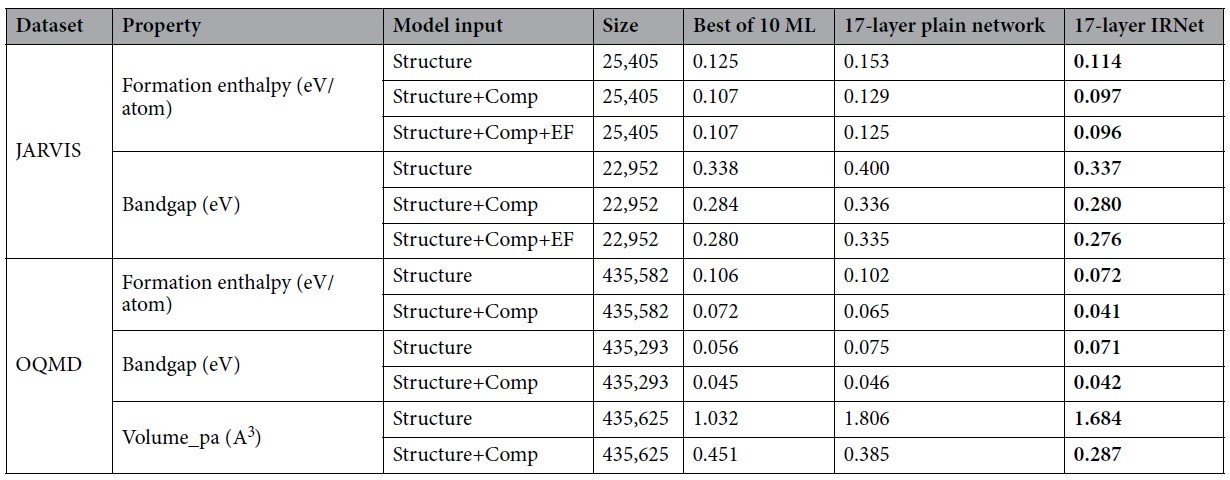

Next, the results for the cases where the features are "chemical structure" and "chemical composition" + "chemical structure" are shown below.

We compare the MAE of PlainNet, IRNet, and the best performing of various conventional machine learning methods. Bold indicates the best performance; EF is considered to be a feature derived from the type of element used in the material.

The performance of a chemical structure (Structure) alone is not very good, but adding a chemical composition (Comp) to the chemical structure dramatically improves the performance.

We also find that PlainNet is inferior to the conventional method when using JARVIS, which has a smaller data set size. However, the performance of IRNet is better than that of the conventional method, suggesting that IRNet has a better ability to capture the performance of a substance from its features.

In addition, we show below the results when the dataset size is even smaller than the one used in the above experiments.

When the dataset size is small, we can see that IRNet is inferior to the traditional method in performance

After all, in order to predict physical properties using deep learning and IRNet, we need big data with a large enough dataset size.

Summary

In this paper, we proposed a method to effectively avoid the gradient vanishing and explosion problem by using an IRNet with finely tuned skip connections in a deep NN. This IRNet is used for IRNet is applied to the prediction of physical properties of materials in materials informatics, and it is shown that deep learning can be applied to the prediction. In addition, we found that the larger the dataset size and the deeper the layers, the smaller the error in predicting the physical properties.

In the future, the use of deep learning such as IRNet is expected to make it possible to screen a vast number of candidate substances in a much shorter time.

Categories related to this article

.jpg)