UI-Diffuser" UI Prototyping Using Generative AI

3 main points

✔️ Generate UI images using Generation AI to streamline UI design

✔️ Automatically generates UI from UI components and text

✔️ Useful as a tool to provide ideas to UI designers at this time

Boosting GUI Prototyping with Diffusion Models

written by Jialiang Wei, Anne-Lise Courbis, Thomas Lambolais, Binbin Xu, Pierre Louis Bernard, Gérard Dray

(Submitted on 9 Jun 2023)

Subjects: Software Engineering (cs.SE); Artificial Intelligence (cs.AI); Computer Vision and Pattern Recognition (cs.CV)

Comments: Accepted for The 31st IEEE International Requirements Engineering Conference 2023, RE@Next! track

code:

The images used in this article are from the paper, the introductory slides, or were created based on them.

summary

This paper proposes a mobile UI generation model.

Mobile UI is an important factor that affects engagement, including the familiarity and ease of use of the app to the user. If you have similar apps, you would probably choose an app with a modern, good-looking design over one with an old design. However, it takes a lot of time and effort to figure out what kind of UI is best from scratch when developing an app.

Therefore, to support UI design, this paper proposes a "UI-Diffuser" that automatically generates UI prototypes by applying generative AI (Stable Diffusion), which has been remarkably developed recently.

UI-Diffuser generates a UI design for you by simply entering a simple text prompt and a UI component, and as you may have realized if you have used ChatGPT, a generative AI that has been in the news since 2022, having a generative AI generate several candidates and then modifying them is much easier and easier than coming up with ideas from scratch. You will find that it is much easier and effortless to have it generate them and then modify them than to come up with ideas from scratch.

This article describes how this "UI-Diffuser" works and its usefulness.

What is "UI-Diffuser"?

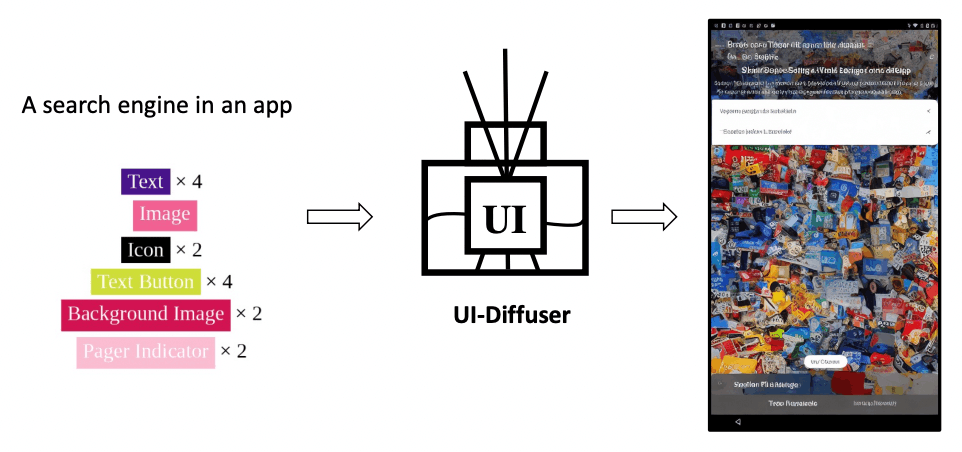

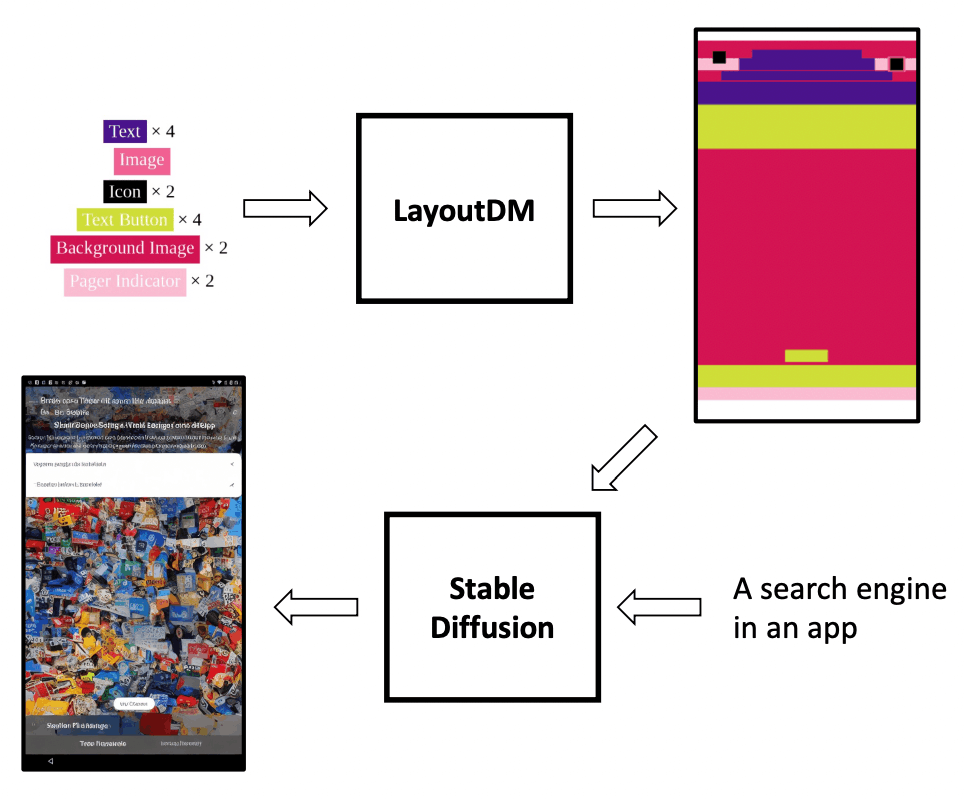

The overview of "UI-Diffuser" is shown in the figure below, which consists of two steps: In the first step, you enter a UI component (text, icon, button, image) and Stable Diffusion generates a plausible layout from the UI components. In the next step, Stable Diffusion generates the UI design by combining the layout generated in the first step with a text prompt ("A search engine in an app" ).

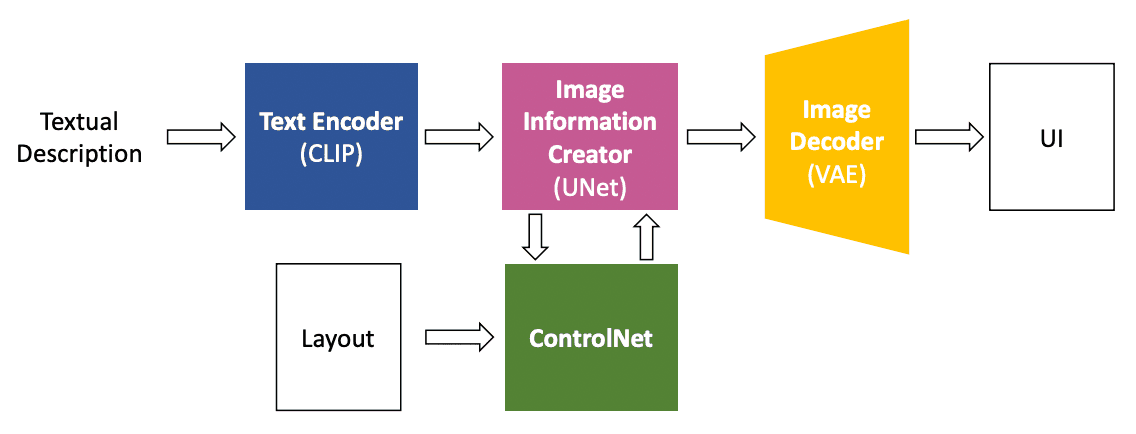

The first step, generating the layout, uses a technique called "LayoutDM".This is presented by CyberAgent in 2023(arXiv ,blog ). Based on the specified comportment, it generates a structured UI layout, taking into account various factors such as category, size, position, and relationship between components, etc. For the second step, UI design generation, the following architectures have been proposed:Text Encoder ( CLIP), Image Information Creator (UNet), Image Decoder (VAE), and ControlNet.

Once the layout and text prompts generated by LayoputDM are entered, an image of the UI design is generated. First, the text prompt is converted into a Token Embedding by the Text Encoder (CLIP), then the Image Information Creator (UNet)generates anImage Embeddingbased on the Token Embedding, and finally the Image Decoder (VAE) generates the image of the UI design. To support additional input conditions such as layout, ControlNet is integrated into UNet; the layout generated by LayoputDM is input into this UNet-integrated ControlNet.

How useful is the UI-Diffuser?

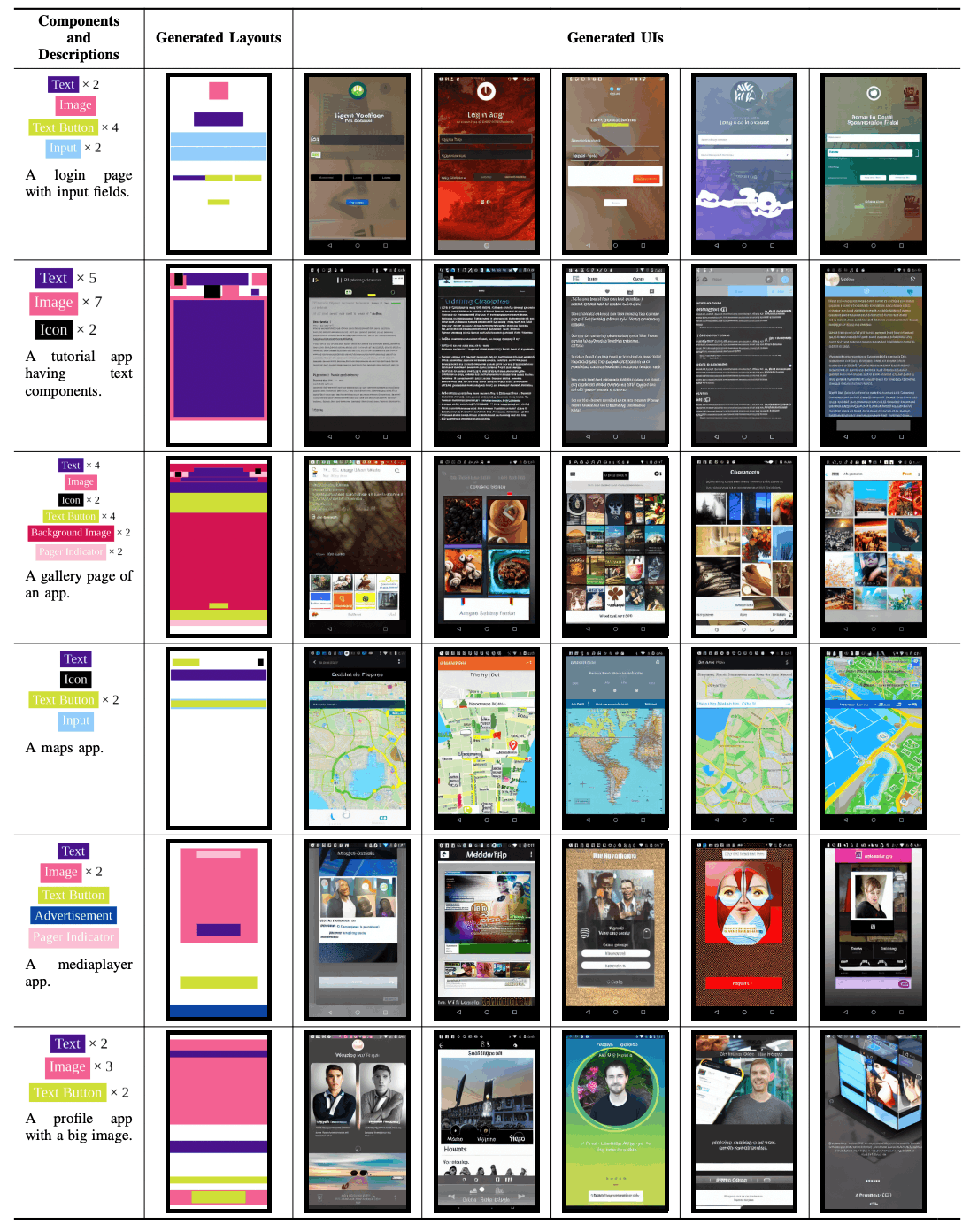

The table below shows a sample UI design generated by "UI-Diffuser." The first column, "Components and Descriptions," shows the UI components and text prompts entered into " UI-Diffuser"; the second column, "GeneratedThethird column, "Generated UIs," is asample of thefinalUIdesign. The third column, "Generated UIs," is a sample of the final UI design.

At first glance, the UI designs generated by "UI-Diffuser" appear to be of high quality, but a closer look reve als that some UI components are missing and others are somewhat poorly designed. For example, the "Advertisement" component is ignored in the "Generated UIs" in line 5 of the table above. This samplesuggests that the UI design created by "UI-Diffuser" is more suitable as a tool that can provide ideas and inspiration to UI designers than as a fully functional UI prototyping tool.

summary

This paperproposesUI-Diffuser, which automatically generates images of UI designs with UI components and simple text prompts. Demonstrations have shown its usefulness, and while it is difficult to completely replace UI design with this tool at this time, it has shown potential as a prototyping tool thatcan provide ideas and inspiration to UIdesigners. However, the tool does show some problems, such as the input UI components not being included in the generated images and poor design quality, and further improvements are required.

The research teamintends to conduct a comprehensive evaluation of UI-Diffuser by first building a benchmark for improvement and theninvestigating three key elements: designability of the generated UI, compatibility with UI components, and compatibility with text descriptions.

In addition , to enhance UI-Diffuser, the company plans to work on"developing datasets that include high-quality screenshot descriptions," "clipping components from generated UI images," and "generating code from generated UI. For example, with regard to "clipping components from generated UI images,"generated UI images are useful as ideas for UI design, but usually cannot be edited or directly reused.

To overcome this limitation, they are also considering a method to trim each component of the generated UI image based on its absolute position in the generated layout image. For example, since UI components may overlap each other and trimming the upper component may result in blank spaces in the lower component, they are considering ways to fill the blank spaces with the color of the lower component in such cases.

As for "code generation from generated UI," the company is also considering the ability to generate code corresponding to a design from the generatedUI design. the layout image generated by UI-Diffuser contains the category, size, and position of the component, so the corresponding code can be generated.

Existing design tools such as Figma have the ability to search for designs and check the code of created designs, butthis is a surprisingly time-consuming and difficult task. If automated with a generative AI, it would be possible to generate a variety of designs more flexibly and in a more intuitive and natural language. In the future, it is expected that anyone will be able to create UI design prototypes more easily, and application development will become even more efficient.

Categories related to this article