Introduction To The Reinforcement Learning Environment!

3 main points

✔️ Introduction to the environments used to train various reinforcement learning

✔️ Different environments have different evaluation objectives, including those for complex and long-horizon tasks

✔️ It is important to choose the right environment according to what you want to evaluate

RL environment list

Contributors by Andrew Szot Joseph Lim Youngwoon Lee dweep trivedi Edward Hu Nitish Gupta

First of all

With recent advances in reinforcement learning research, various environments for training reinforcement learning agents have been proposed. So, which environment should we choose for the agent we want to train? In this article, we will introduce some of the most frequently used environments among the various reinforcement learning environments, to help you choose the right environment for your future experiments using reinforcement learning.

Robotics

In this chapter, we introduce the environment for using reinforcement learning for robotics.

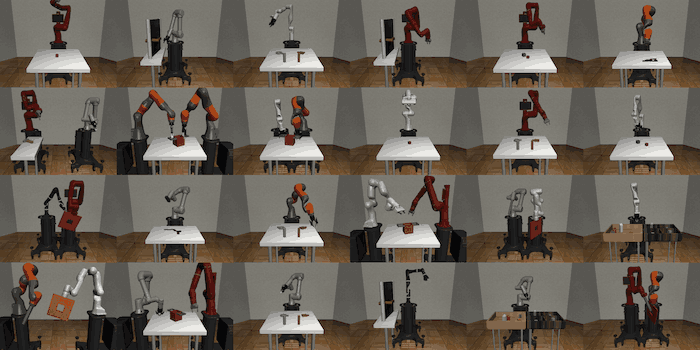

Robosuite

Robosuite is an environment that provides basic manipulation tasks (Lift, Assembly, etc.). It can also be used to evaluate a variety of controllers and robots, and currently provides models for Panda, Sawyer, IIWA (KUKA), Jaco, Kinova3, UR5e, and Baxter. In addition, this environment provides not only tasks solved by a single robot, but also tasks solved by multiple robots, as shown in the figure below. The beauty of this environment is that it is relatively easy to create a new environment yourself, making it a very useful benchmark environment.

robosuite: A Modular Simulation Framework and Benchmark for Robot Learning

IKEA Furniture Assembly

It is an environment for assembling furniture using robots and provides a long-horizon task that is very complex and requires the agent to run for a long period of time. The environment provides over 80 pieces of furniture, and the background, lights, and textures of the environment can be easily changed. Currently, models of the Baxter and Sawyer robots are available. The environment also provides access to depth and segmentation images.

IKEA Furniture Assembly Environment for Long-Horizon Complex Manipulation Tasks

Meta-World

Fifty different Sawyer robot manipulation tasks are provided. This environment is mainly used in Multi-Task Learning and other applications, where there are various modes of evaluation with different numbers of tasks used for learning and testing.

Meta-World: A Benchmark and Evaluation for Multi-Task and Meta Reinforcement Learning

RLBench

RL Bench is also an environment that provides various manipulation tasks as well as the environment introduced above. However, this environment is also designed for research fields such as geometric computer vision. In this environment, there are also simple tutorials on various research fields such as Few-shot learning, Meta Learning, Sim-To-Real, and Multi-Task Learning.

RLBench: The Robot Learning Benchmark & Learning Environment

Game

In this chapter, we present several environments for applying reinforcement learning to games.

Gym Retro

Classic video games have been adapted to the Gym environment, and there are about 1000 different games available.

Gotta Learn Fast: A New Benchmark for Generalization in RL

VizDoom

VizDoom is a shooting game simulator called Doom, which can be used to learn reinforcement learning using image information. There are several tasks available in this environment as well, such as defeating enemies, collecting first aid kits, etc.

ViZDoom: A Doom-based AI Research Platform for Visual Reinforcement Learning

StarCraft 2

It is an interface that allows you to run the StarCraft2 game, receive observations through this interface, and send actions to the game through the interface. This is the environment that was primarily used in the DeepMind study, among others.

StarCraft II: A New Challenge for Reinforcement Learning

Minecraft

is a simulator that allows you to play a game of MineCraft.

Suites

This chapter introduces the so-called Suites, which are a set of tasks and environments of various types.

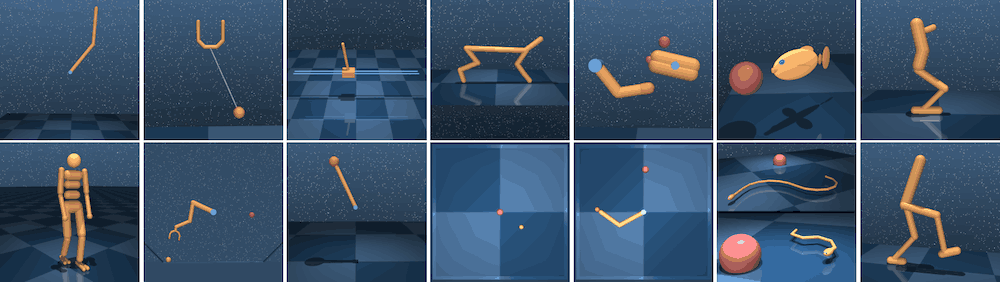

DeepMind Control Suite

In the environment provided by DeepMind, a variety of control tasks are available. Typical examples include Walker, a task that aims to make the agent walk, and Reacher, a task that aims to make the agent walk. In a 2D environment This environment is used as a benchmark in various studies. This environment is often used as a benchmark in various studies, and it is possible to easily switch whether the agent receives an image observation or a low-dimensional state.

Open AI Gym Atari

This is the environment provided by OpenAI, where 59 Atari games are available and images are given as observations.

Open AI Gym Mujoco

This is an environment that provides tasks related to continuous control and uses a very fast physics simulator called MuJoco. In this environment, a low-dimenstional state is basically given as observation.

Open AI Gym Robotics

ShadowHand (bottom left) and Fetch (bottom right) This is an environment in which tasks are provided such that a goal can be given regarding two of the robots.

Multi-Goal Reinforcement Learning: Challenging Robotics Environments and Request for Research

Navigation

In this chapter, we will introduce the Navigation task.

DeepMind Lab

The environment is provided by DeepMind and offers tasks related to difficult 3D Navigation. It also offers a variety of tasks, such as puzzle-solving tasks.

gym-minigrid

It is a grid-based, lightweight, and fast environment that is mainly used for simple experiments. This environment is also easy to use because it is easily modifiable and extensible.

AI2THOR

AI2THOR is a simulation of Home Navigation, which allows you to interact with various furniture and objects. The agent's actions are basically discrete, and can mainly be used for long-horizon tasks.

AI2-THOR: An Interactive 3D Environment for Visual AI

Gibson

Gibson, like AI2THOR, is an environment that provides tasks related to indoor navigation, but in Gibson the robot is mainly moved by continuous control.

Gibson Env: Real-World Perception for Embodied Agents

Habitat

Habitat is a simulator provided by Facebook and is a photorealistic environment. Therefore, it is a simulator that can be considered effective for sim2real and other applications.

Habitat: A Platform for Embodied AI Research

Multi-Agent

Multi-agent Particle Environment

A simple environment in which Multi-Agent RL can be trained, where continuous values are given as observations and actions are represented discrete.

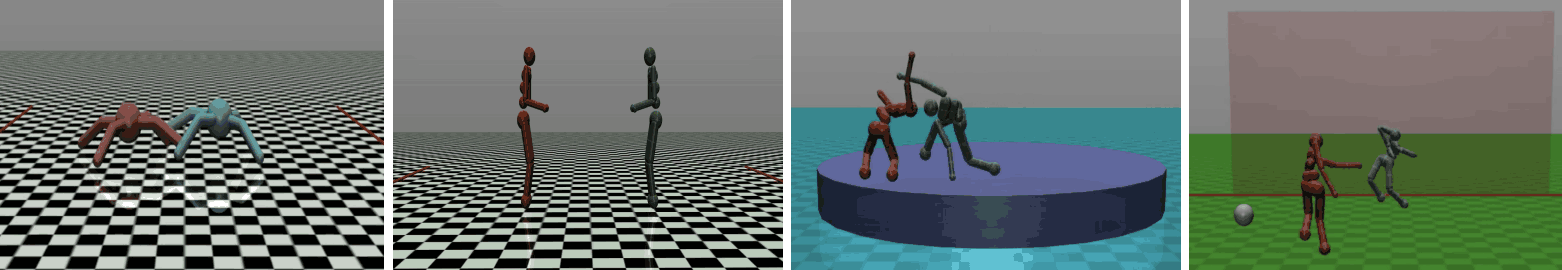

OpenAI Multi-Agent Competition Environments

As shown in the figure below, it offers a variety of Multi-agent tasks for continuous control and is mainly focused on competition in Multi-agent.

Emergent Complexity via Multi-Agent Competition

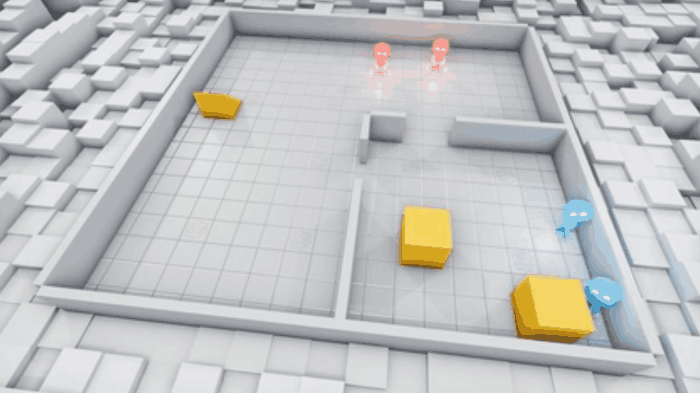

OpenAI Multi-Agent Hide and Seek

It is a Multi-agent environment. It is a multi-agent environment, and it also focuses on learning how to use a given tool (object).

Safety

Reinforcement learning has attracted much attention in the field of safety research. In particular, in situations where robots are operated, choosing the wrong action can lead to accidents. In this chapter, we introduce an environment in which the safety of reinforcement learning can be evaluated.

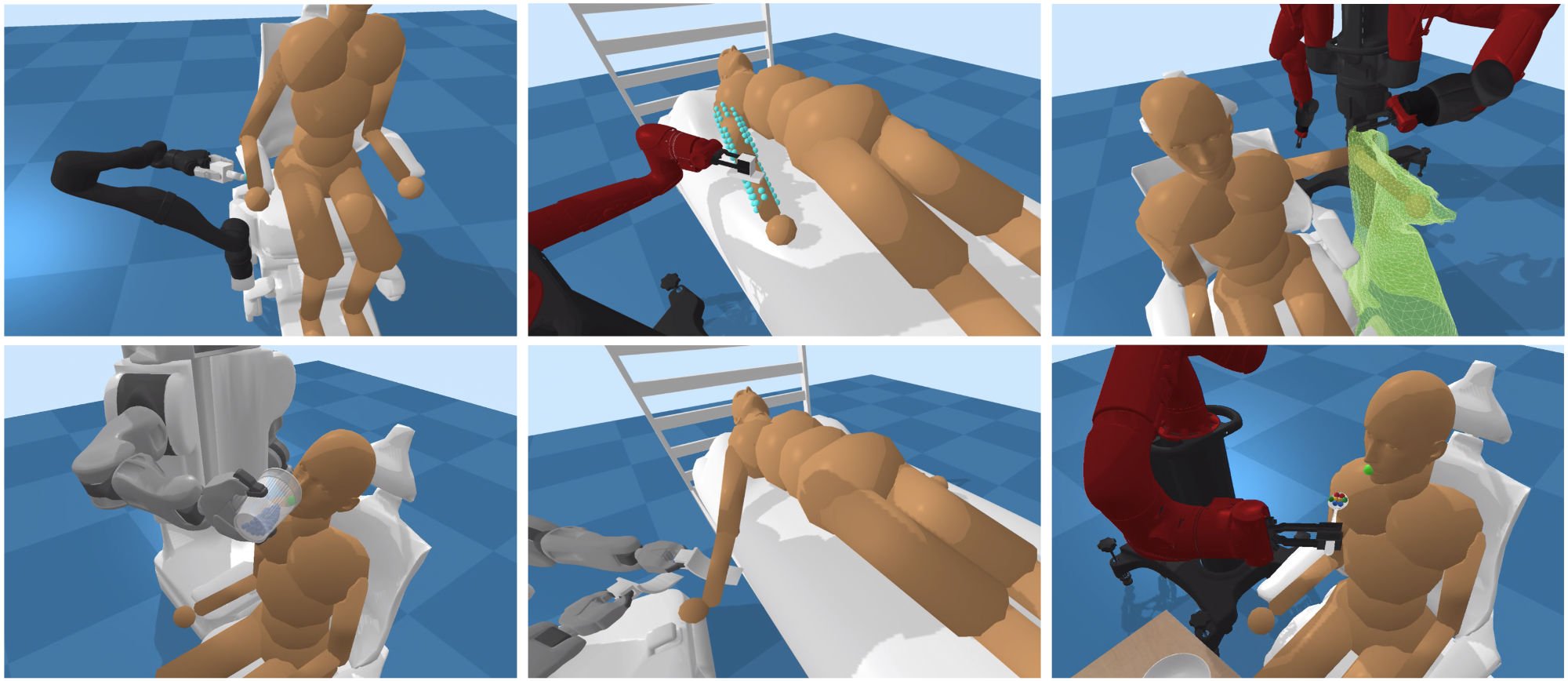

Assistive-gym

A total of 6 assistive tasks (ScratchItch, BedBathing, Feeding, Drinking, Dressing, ArmManipulation) and 4 types of robots (PR2, Jaco, Baxter, Sawyer) are provided in the Environment. Humans exist in two states: one in which they do not move, and one in which they move with the action of another measure. In addition, humans can use either the male or female model, which is made up of as many as 40 joints.

Assistive Gym: A Physics Simulation Framework for Assistive Robotics

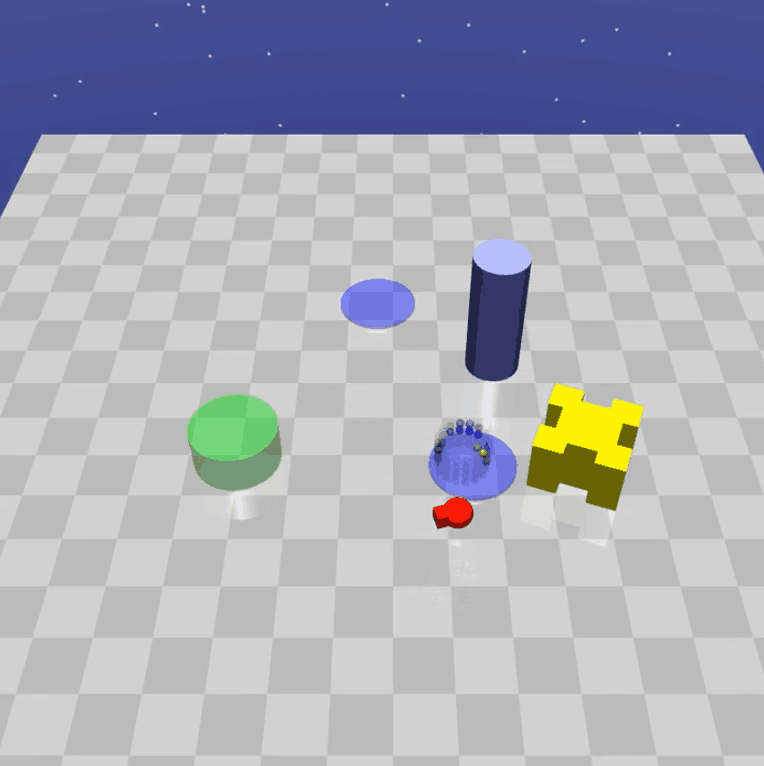

Safety Gym

This environment has a number of obstacles as shown in the figure below, and is mainly used to study safety during reinforcement learning exploration.

Benchmarking Safe Exploration in Deep Reinforcement Learning

Automatic operation (machine)

Autonomous Vehicle Simulator

This is a simulator for automated driving from Microsoft AI & Research and is based on Unreal Engine / Unity.

CARLA

CARLA is an environment where automated driving can be trained and evaluated, and the provided APIs can be used to set various environmental conditions such as traffic conditions, pedestrian behavior, and weather. It also has access to a variety of sensor data, including LIDARs, multiple cameras, depth sensors, and GPS. Furthermore, users can create their own maps.

CARLA: An Open Urban Driving Simulator

DeepGTAV v2

In GTAV (Grand Theft Auto V), it is a plug-in that can do the learning of the automatic driving as an image input.

Summary

In this article, we have introduced various environments for reinforcement learning. Since you need to choose an environment depending on what you want to evaluate, it is very important to have some knowledge of what each environment can do or what you can evaluate. It is also interesting to think about what kind of new environment is needed if there is something that cannot be evaluated in the environment that exists now.

Categories related to this article