It's Out At Last! Truly DETR! An Innovative Paradigm For Object Detection

3 main points

✔️ Applying the Transformer to object detection

✔️ End-to-end models to reduce manual design

✔️ Redefining object detection as a direct set prediction problem

End-to-End Object Detection with Transformers

written by Nicolas Carion, Francisco Massa, Gabriel Synnaeve, Nicolas Usunier, Alexander Kirillov, Sergey Zagoruyko

(Submitted on 26 May 2020 (v1), last revised 28 May 2020 (this version, v3))

Comments: Published by arXiv

Subjects: Computer Vision and Pattern Recognition (cs.CV)

Code

Introduction

It's no longer a commodity, and models like object detection, Yolo/SSD, etc. used in various scenarios have been quantized and are now running on computers as small as a Raspy.

Here is a new paradigm using the Transformer that has taken language processing by storm.

DETR = DEtection TRansformer

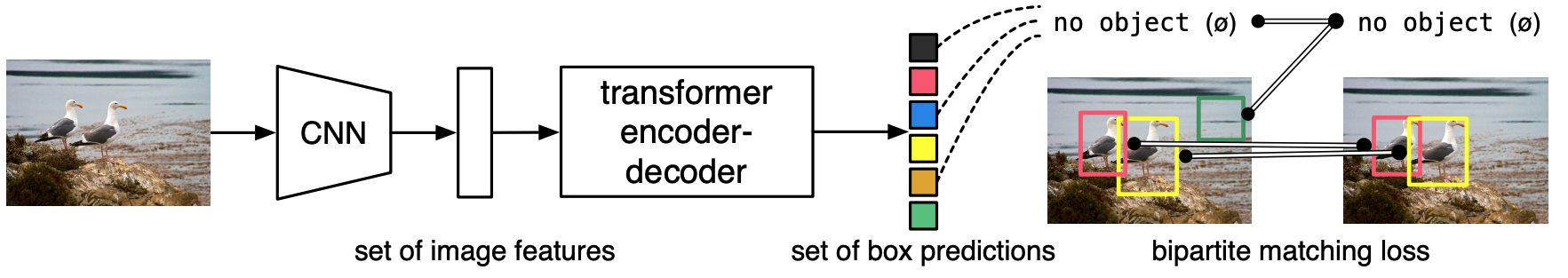

Structurally, it is a CNN body and a Transformer connected to a CNN body.

Structure of DETR from paper Fig. 1

At first glance, it seems to be a simple connection between CNN and a Transformer, but it is an excellent paper with solid experimental comparisons and a lot of discussion. There was a lot of know-how in both the object detection and the transformer, and there was a lot to be gained from this paper.

In addition, the implementation is now available on github and a trained model is available for you to try out right away. With more than 3.1k stars already (as of June 2020), this newcomer is a hot newcomer.

Let's take a look at that DETR, and the key points worth noting.

Point 1: End-to-End Philosophy

While this "end-to-end philosophy" has led to major breakthroughs in machine translation and speech recognition, it is significant that it is an effective solution for object detection, where the human design factor still determines performance.

Examples of human design elements in object detection

To read more,

Please register with AI-SCHOLAR.

Sign up for free in 1 minute

OR Log in

Categories related to this article

![[PETRv2] Estimates T](https://aisholar.s3.ap-northeast-1.amazonaws.com/media/November2023/petrv2-520x300.png)