Increasingly Sophisticated AI Attacks! There Are Attacks On The AI Without Your Knowledge!

3 main points

✔️ An exhaustive survey paper on backdoor attacks that pose a threat to AI systems

✔️ When a backdoor attack is launched, incidents as serious as a car crash occur.

✔️ A variety of attack methods have been proposed, making them very difficult to prevent

Backdoor Attacks and Countermeasures on Deep Learning: A Comprehensive Review

written by Yansong Gao, Bao Gia Doan, Zhi Zhang, Siqi Ma, Jiliang Zhang, Anmin Fu, Surya Nepal, Hyoungshick Kim

(Submitted on 21 Jul 2020 (v1), last revised 2 Aug 2020 (this version, v3))

Comments: Accepted at arXiv

Subjects: Cryptography and Security (cs.CR); Computer Vision and Pattern Recognition (cs.CV); Machine Learning (cs.LG)

Introduction

In this article, we will continue to discuss various backdoor attacks as we did in our previous article. If you don't know what a backdoor attack is in the first place, it will be difficult to understand it, so we recommend you read the previous article before reading this one.

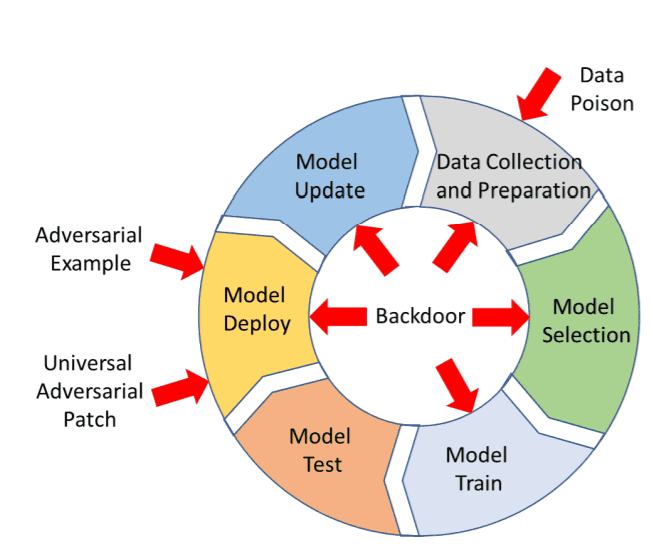

The Big Picture of Backdoor Attacks

This diagram is the one I included in the previous article. A backdoor attack differs from the well-known Adversarial Attack in that it doesn't only attack when the model is deployed, but also applies to things like data collection and training. This should give you an idea of the breadth of application of backdoor attacks.

There are six major attack configurations for backdoor attacks, as follows

A. Outsourcing Attack

B. Pretrained Attack

C. Data Collection Attack

D. Collaborative Learning Attack

E. Post-Deployment Attack

F. Code Poisoning Attack

In this article, I will introduce the typical attack techniques of D~F.

Introduction of each attack

This chapter begins with an overview of each attack, followed by an introduction to the typical techniques of each attack.

To read more,

Please register with AI-SCHOLAR.

ORCategories related to this article