Top PV Articles Published In 2021

In 2021, things developed tremendously around Transformer. And yet, the performance goes up like this! Can we just pooling from these theories in the first place? and it was a year where we were still in the process of understanding the theory.

In the field of AI, which is still in the midst of progress with Transformer, unsupervised learning, and NeRF, here are the top 5 most read articles in 2021 (filtered by articles published in 2021). This article is a summary of Data Augmentation, but I think it can be said that many people worked hard to study it in 2021.

No.1: Graphs are so awesome! A review of the integration with deep learning

A survey paper on the integration of deep learning and GNN theories was the most read article this year, and this paper, which covers the fundamentals and development of GNNs, was the most read. This article was ranked first.

No.2: What's happening in quantum machine learning?

The second place was an article about quantum machine learning. The second place was an introduction to a paper that summarizes the actual situation of quantum machine learning, and we should expect it in 2022.

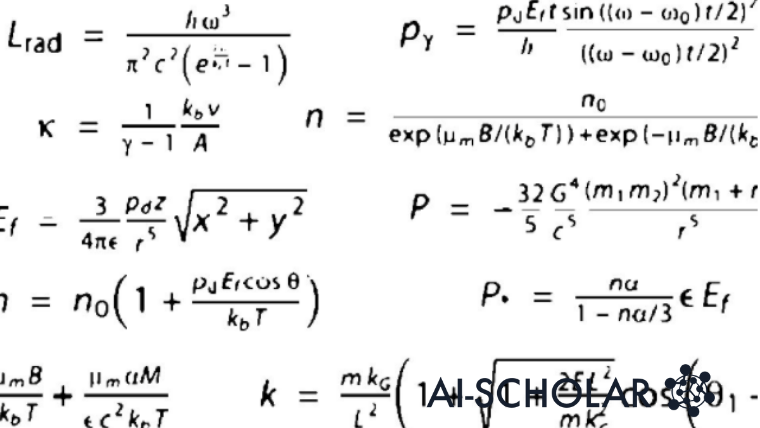

No.3: Discovering Feynman's Physics Equations with Machine Learning: AI Feynman

Discovering hidden simplicity such as symmetry and separability in mystery data, and recursively splitting difficult problems into simpler problems with fewer variables, this paper was a romanticized version of AI discovering equations.

No.4: [GAN] NVIDIA achieves highly accurate GAN even on extremely small datasets! What is Augmentation ADA without overtraining!

This paper published by NVIDIA is a technique to train GANs with a small amount of data, and it seems that NVIDIA is a company that has developed or is attributed to GAN technology very much. I feel that NVIDIA researchers will play an important role in the development of GANs in the future.

No.5: [New champion NFNets without batch normalization is here! Image task top performance!

Batch regularization is used as a matter of course in most tasks. Batch regularization has been shown to smooth out loss surfaces and provide a regularizing effect on networks, allowing them to be trained in larger batches. This kind of research with doubts about the obvious will be important for the future development of AI. That is why I would like to pursue more possibilities without forgetting the year 2021, which is the result of the stereotypically impossible use of Transformer for images.

summary

After all, AI is still a developing technology. There are still some phenomena that we can't catch up with, and it has been a year in which many theories have been overturned. Although there are still some areas that are far from being implemented in society, such as improving the accuracy of unsupervised learning and how to respond effectively to unknown data, there are companies that are using AI for dexterity and producing results.

Let's look forward to future development and welcome the next year 2022! Thank you all for your hard work for one year.

Categories related to this article