Feature-limiting Gates To Make One Filter Responsible For One Category

3 main points

✔️ Control of class-specific filters.

✔️ Improved filter interpretability without loss of accuracy

✔️ Applicable to object positions and hostile samples

Training Interpretable Convolutional Neural Networks by Differentiating Class-specific Filters

written by Haoyu Liang, Zhihao Ouyang, Yuyuan Zeng, Hang Su, Zihao He, Shu-Tao Xia, Jun Zhu, Bo Zhang

(Submitted on 16 Jul 2020)

Comments: Accepted at arXiv

Subjects: Computer Vision and Pattern Recognition (cs.CV); Machine Learning (cs.LG)

Paper Coming soon COMM Code

Introduction

Convolutional Neural Networks (CNNs) have shown a lot of high accuracy in visual tasks. However, even such powerful CNNs are still difficult to interpret. If we discuss the differences in interpretation between humans and AI, and the need for them, we are better off being able to interpret them, although there are many different ideas. Differences are new insights for humans, and if interpretations can be obtained, then it's obviously better to have them. Once these are obtained, their reliability in automated driving and medical diagnostics can be confirmed.

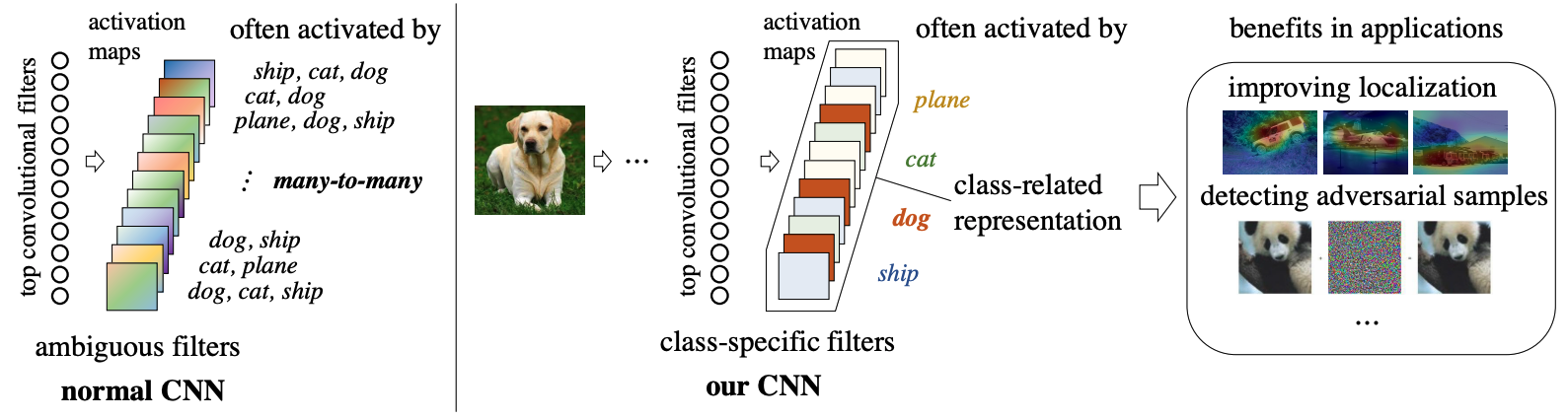

One of the causes that hinder this interpretability potential is filtered class entanglement. The word entanglement doesn't sound familiar, but if you look at the figure below, you can compare it to the paper I'm presenting here, and you can intuitively see how it can improve interpretation.

On the left is traditional CNN. One filter You can see that it's hard to interpret the various things that are corresponding to the ship, cat, dog, etc., As you can see in the one proposed on the right, there is one filter for each category, such as cats and dogs, which makes it easier to interpret. The paper I'm going to introduce this time is about improving interpretability by using one filter for each category. In fact, this idea may make sense. In our previous work, we found that there are redundant features between different filters, and the possibility of specialized filter learning was presented in CVPR 2019.

To read more,

Please register with AI-SCHOLAR.

ORCategories related to this article