SOTA With Contrastive Learning And Clustering! (Representation Learning Of Images Summer 2020 Feature 3)

3 main points

✔️ Avoiding Difficulties of Contrastive Learning Negative Sampling with Clustering

✔️ Combining EM Algorithms and Clustering Method PCL

✔️ SwAV, a method to further improve performance by learning for clustering consistency

Prototypical Contrastive Learning of Unsupervised Representations (PCL)

written by Junnan Li, Pan Zhou, Caiming Xiong, Richard Socher, Steven C.H. Hoi

(Submitted on 11 May 2020 (v1), last revised 28 Jul 2020 (this version, v4)])

Comments: Published by arXiv

Subjects: Computer Vision and Pattern Recognition (cs.CV); Machine Learning (cs.LG)

Paper Official Code COMM Code

Unsupervised Learning of Visual Features by Contrasting Cluster Assignments (SwAV)

written by Mathilde Caron, Ishan Misra, Julien Mairal, Priya Goyal, Piotr Bojanowski, Armand Joulin

(Submitted on 11 May 2020 (v1), last revised 28 Jul 2020 (this version, v4)])

Comments: Accepted at NeurIPS 2020

Subjects: Computer Vision and Pattern Recognition (cs.CV)

Paper Official Code COMM Code

The writer's special project entitled "Learning to Express Images Summer 2020" introduces various methods of unsupervised learning.

Part 1. Image GPT for domain knowledge-free and unsupervised learning, and image generation is amazing!

Part 2. Contrastive Learning's Two Leading Methods SimCLR And MoCo, And The Evolution Of Each

Part 3. SOTA With Contrastive Learning And Clustering!

Part 4. Questions For Contrastive Learning : "What Makes?"

Part 5. The Versatile And Practical DeepMind Unsupervised Learning Method

Having survived two AI winters and gaining expressive power with the massive image dataset ImageNet, AI in images blossomed in 2012 in a big way. However, this required significant costs for the human labeling of images. In contrast, BERT, which made such a huge social impact in 2018 that natural language processing became a concern for fake news, is also a major feature of the vast amount of data available as it is.

Contrastive learning is a form of unsupervised learning that uses a mechanism for comparing data to each other instead of costly labeling and is capable of training large amounts of data as is. It has been successfully applied to images and has already surpassed the performance of ImageNet-trained models and, like BERT, is expected to have a future impact in the imaging field.

In my last article, we looked at this Contrastive Learning and its two major laws SimCLR and MoCo.

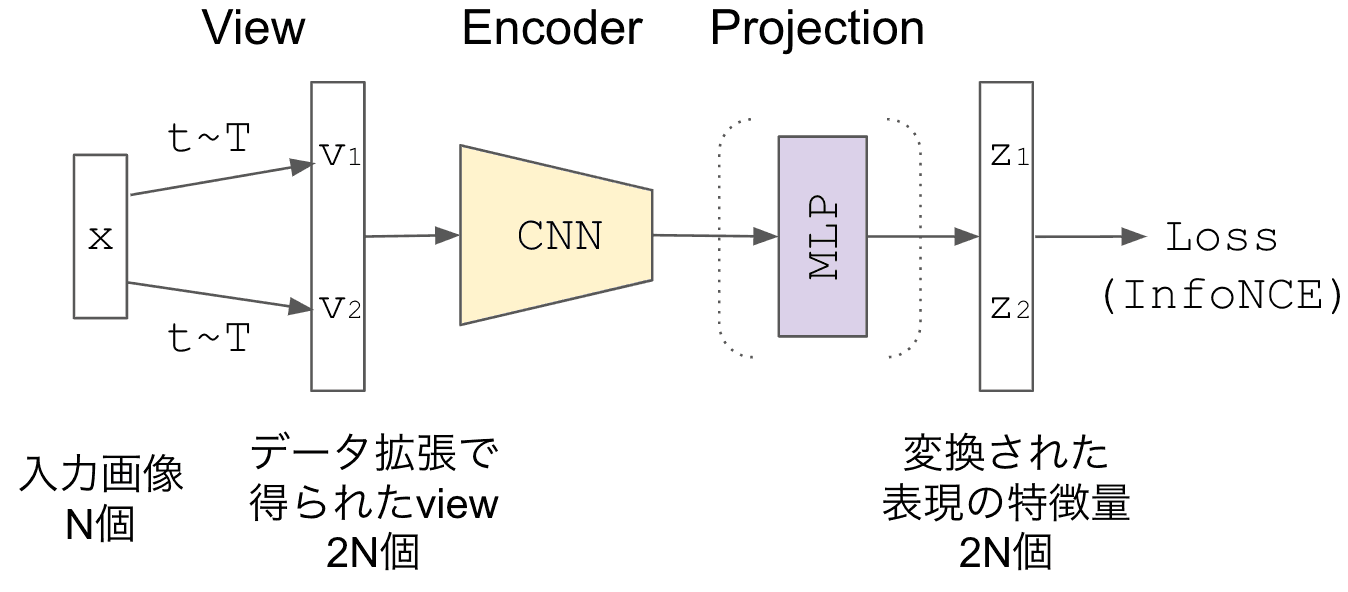

Overall Contrastive Learning learning (created by the writer)

Overall Contrastive Learning learning (created by the writer)

- SimCLR is a straightforward implementation with high performance, but the batch size was huge to increase the number of negative samples.

- To compensate for its shortcomings, MoCo has worked out a solution to queue negative samples on the fly with a Memory mechanism.

Besides the MoCo-like solution, there is another way to prepare a representative sample "prototype" in clustering and substitute that representative for a large number of negative samples.

In this article, I would like to focus on a method that utilizes that clustering.

- PCL is a solid method of repeated "clustering" and "optimization" within the framework of the EM algorithm.

- SwAV improves performance by replacing clustering consistency with tasks.

To read more,

Please register with AI-SCHOLAR.

ORCategories related to this article

![[Swin Transformer] T](https://aisholar.s3.ap-northeast-1.amazonaws.com/media/February2024/swin_transformer-520x300.png)