Transformer Without Attention: GMLP Will Be Active!

3 main points

✔️ Modifying the transformer architecture to use only MLPs.

✔️ Works equally well on vision and NLP tasks.

✔️ Performance is better than or on par with current transformer models.

Pay Attention to MLPs

written by Hanxiao Liu, Zihang Dai, David R. So, Quoc V. Le

(Submitted on 17 May 2021 (v1), last revised 1 Jun 2021 (this version, v2))

Comments: Accepted by arxiv.

Subjects: Machine Learning (cs.LG); Computation and Language (cs.CL); Computer Vision and Pattern Recognition (cs.CV)

code:

Introduction

Transformers have seen widespread acceptance in the field of NLP and computer vision. They have successfully replaced RNNs and are possible replacements for CNNs in the near future. The self-attention mechanism lies at the core of Transformers. It provides the transformers an inductive bias because they can be dynamically parameterized based on input representations. Nevertheless, MLPs are known to be universal approximators and in theory, their static representations should be able to learn anything.

In this paper, we introduce a new species of attention-free transformers that make use of MLPs with multiplicative gating, called 'gMLP'. When tested on key vision and NLP tasks, these transformers are able to achieve performance that is comparable to state-of-the-art transformers. Just like transformers, the performance of these models improves with increasing model size, and a sufficiently large model can fit any data.

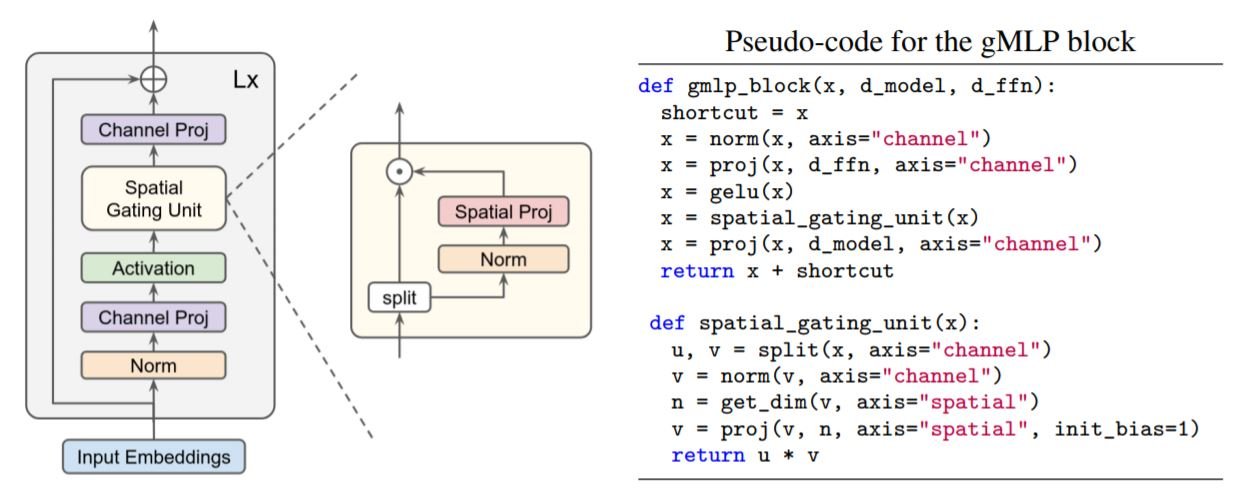

Proposed model: gMLP

The model consists of stacks of L identical blocks. Each block is given by:

Z = σ(XU)

Z' = s(Z)

Y = Z'V

Here, σ is an activation function like GELU, X ∈ Rnxd is the input representation where n is the length of the input sequence, and d is the hidden channel dimension. U and V are linear transformations. The function 's(.)' captures spatial interactions, and when it is an identity function, it acts as an FFN (Feed Forward Network).

We apply the same input and output format as BERT, with a similar<cls> token for classification tasks and similar padding. However, since the positional information will be captured inside 's(.)', positional embeddings are unnecessary in the case of gMLP.

Spatial Gating Unit (SGU)

The function s(.) should be chosen so as to maximize the interaction between the tokens. The simplest choice is a linear projection.

F = WZ+b

where W is a matrix of size nxn and n is the array length. The matrix Z is partitioned into two parts (Z1, Z2) along the channel dimension and, as in GLUs, the One part is transformed using F. The resulting projection is multiplied by the other partitioned part, which acts as a gate. It turns out that we need to initialize the weights W very close to 0 and b equal to 1. This allows s(.) will initially act as an identity operation.

image classification

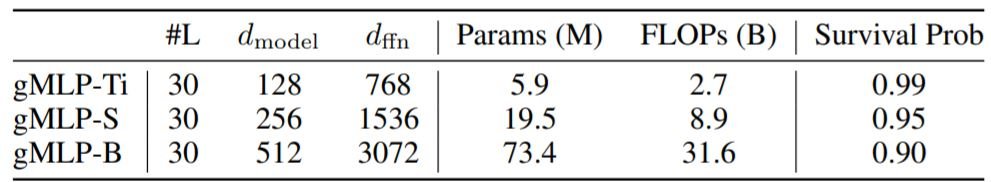

We trained gMLP on ImageNet without using any external data and compared it to recent vision transformers like ViT, DeiT, and several CNNs. We constructed three different architectures of different sizes as follows:

The images are converted into 16x16 patches similar to ViT and like DeiT, we use regularization to prevent overfitting. We do not do any excessive model tuning across our three models, except for tuning the survival probability of stochastic depth.

The above plot suggests that attention-free models can be as effective as self-attention models. With proper regularization, the accuracy depends more on the model size, rather than the use of attention.

Masked Language Modeling

We trained gMLP models using masked language modeling (MLM). The input/output formats were kept similar to BERT. Unlike BERT, the <pad> tokens need not be masked because the model can quickly learn to ignore them. Also, in MLM, any offset to the input sequence should not affect the outcome produced by the model (called shift-invariance). Using this constraint, the linear transformation in the SGU layer acts as a depthwise convolution, with a receptive field that covers the entire sequence. However, unlike depthwise convolutions, the layer learns the same parameters(W) across all channels. We found that gMLP automatically learns shift-invariance even without manually applying the constraints.

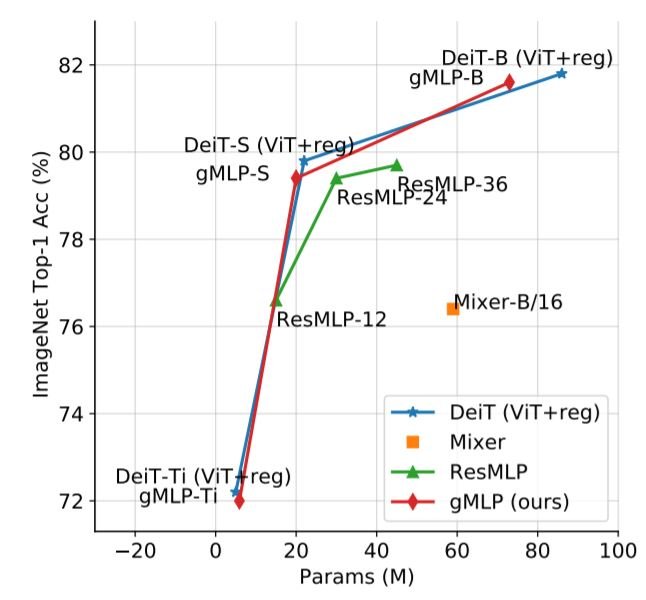

Importance of gating in gMLP

We conducted ablation studies with BERT variations, and by using several functions in the SGU layer.

We can see that the multiplicative split gMLP outperforms the other variations. Also, the performance of the strongest BERT model can be matched by scaling our model (discussed in the next section).

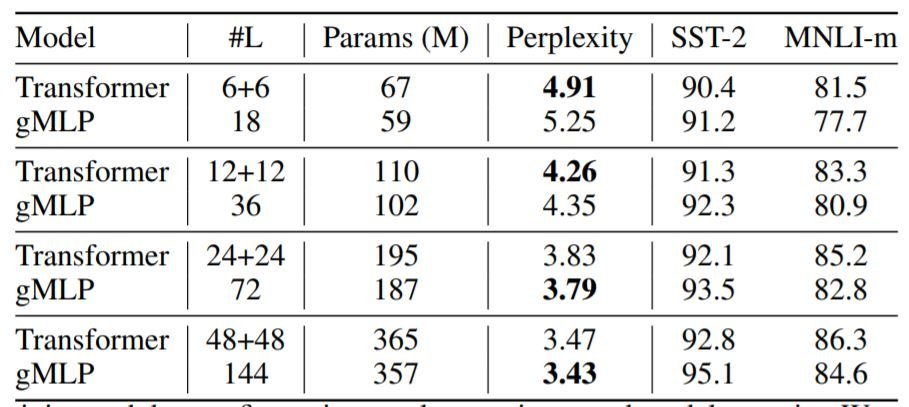

The behavior of gMLP with Model Size

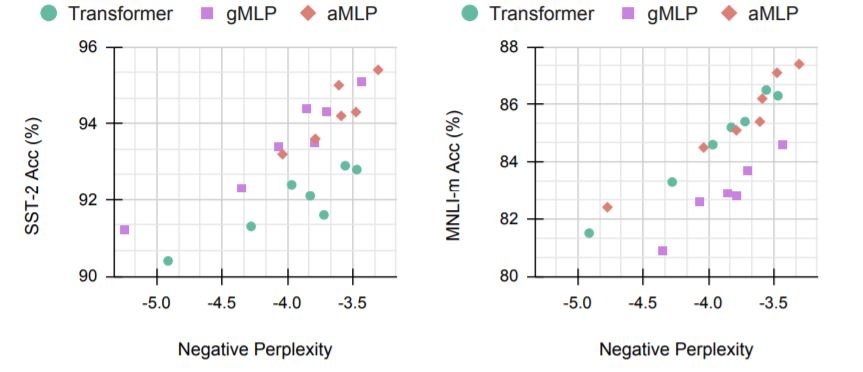

We conducted studies to see how the model size affected the performance. These models were then tested on two datasets: Multi-Genre Natural Language Inference(MNLI) and Stanford Sentiment Treebank(SST-2).

The table shows that sufficiently scaling gMLP gives on par or better performance than the transformer models in each case. In general, we found that for both transformers and gMLP, the perplexity and model params(M) follow a power law.

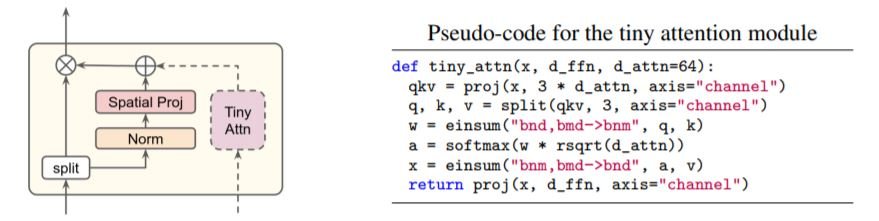

The usefulness of Attention in Fine-Tuning

The evidence from this paper so far suggests that self-attention is not necessary to perform well in MLM. Nevertheless, the table above shows that although the gMLP model performs well in single-sentence tasks like SST-2, it performs poorly when dealing with multi-sentence tasks like in the case of the MNLI dataset. Therefore, we suspect that self-attention is important in cross-sentence alignment.

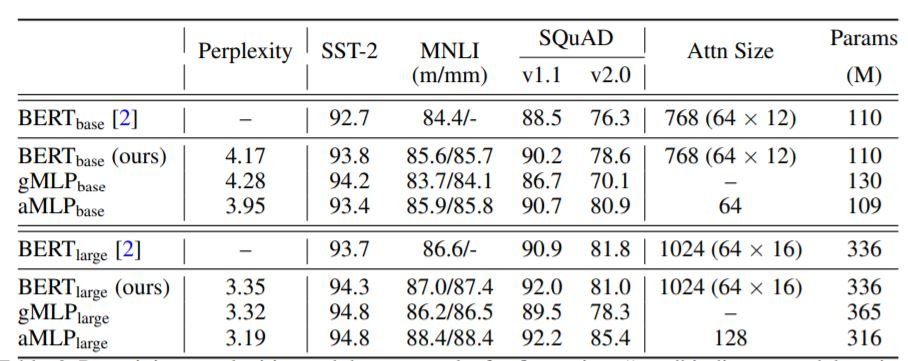

To confirm this suspicion, we created a hybrid model where a tiny self-attention block is attached to the gating function of our model. We call it tiny because unlike regular models with 12 attention heads and a total size of 768, it is a single-headed layer with a size of 64. We refer to this hybrid model as an aMLP (attention MLP).

As we can see, the tiny attention actually assists the model, allowing aMLP to outperform the transformer and gMLP.

The above table shows a final comparison of BERT, gMLP, and aMLP. We can see that for multi-sentence tasks like SQUAD and MNLI, while gMLP still performs quite well, aMLP is more beneficial. Just using small attention heads with sizes 64-128 works surprisingly well.

Summary

We have seen that a sufficiently larger gMLP can outperform transformers with self-attention. Moreover, adding some self-attention eliminates the need to scale the model. Despite the success of transformers, it is still unclear what makes them so effective. This paper reveals that attention is not really a big factor, and a tiny amount of attention can suffice. A better understanding of the essential components of the transformer will help build better models in the future.

Categories related to this article

![[MusicLM] Text-to-Mu](https://aisholar.s3.ap-northeast-1.amazonaws.com/media/October2023/musiclm-520x300.png)