Are Transformers Meant For Computer Vision?

3 main points

✔️ An extensive study on ViT models and variants with several image and architecture perturbations.

✔️ Comparison with SOTA ResNet models.

✔️ Understanding of better training techniques, and applications of vision transformers.

Understanding Robustness of Transformers for Image Classification

Written by Yicheng Liu, Jinghuai Zhang, Liangji Fang, Qinhong Jiang, Bolei Zhou

( Submitted on 26 Mar 2021)

Comments: Accepted to arXiv.

Subjects: Computer Vision and Pattern Recognition (cs.CV); Artificial Intelligence (cs.AI); Machine Learning (cs.LG)

code:.

Introduction

For a long time, Convolutional Neural Networks(CNNs) have been the de facto go-to models for computer vision. Recently, the Vision Transformer(ViT) showed that attention-based models can perform equally well or even better than state-of-the-art CNNs. ViT, a purely transformer-based network, surpassed the performance of ResNets, which are among the most widely used deep neural networks in computer vision. After the decade-long reign of CNNs, directing research work in vision towards transformers is a major leap.

In this paper, we study the performance of variants of the ViT transformer using several image perturbations. In addition, we also compare the performance of ViT to ResNets under similar training conditions. In doing so, we aim to provide a deeper understanding of how vision transformers work and help in determining their applications and potential avenues for improvements.

ViT Transformer and Variants

The ViT transformer is very similar to the original transformer model. It uses several blocks, each composed of a multi-headed self-attention layer followed by feed-forward layers. The only difference is in the initial image-processing layer. The image is divided into several non-overlapping patches, which are flattened and transformed using a learned linear layer. Eg: a 384×384 image can be broken into 16 × 16 patches each flattened to a dimension of 24x24=576. Also, a special CLS token is appended to the input sequence, whose representation is used for final classification. (For more information, please see this article.)

Robustness to Input Perturbations

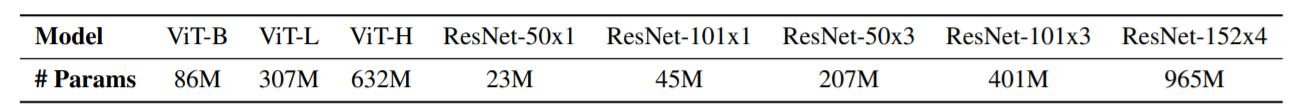

All the models were pretrained either on ILSVRC-2012, ImageNet-21k, or JFT-300M datasets and then fine-tuned on ILSVRC-2012. Then we tested the robustness of the models on three specialized benchmarks: ImageNet-C, ImageNet-R, and ImageNet-A.

The ImageNet-C benchmark is used to evaluate the robustness of ViT to natural image perturbations. There are a total of 15 types of image perturbations which include noise, blur, weather, and digital corruptions. Each corruption has 5 levels of severity, so there are a total of 5x15=75 categories. It was found that ViTs are less robust for smaller datasets compared to ResNets of similar size. However, as the dataset size increases(IMageNet-21k and JFT-300M), they become more robust. This trend was found to be true across all three datasets(C, R, A). Another interesting finding is that with smaller datasets, increasing model size does not necessarily improve performance in the case of ViT.

The ImageNet-R benchmark consists of renditions(art, cartoons, paintings, origami...)of ImageNet classes. The models perform worse than they did on the ImageNet-C benchmark but the trends of model size and pre-training dataset size are quite similar. The benefit of larger model size and larger training instances is quite clear for ViT.

The ImageNet-A model consists of real-world, unmodified, naturally-occurring examples that cause ML model performance(created using ResNets) to degrade significantly. Although ViT has a different architecture than ResNets, it was found to suffer badly on similar adversarial images.

Adversarial Perturbations

Most DNNs are greatly affected negatively by small, specifically crafted perturbations to the input image. We use two standard approaches to introduce such adversarial perturbations: the Fast Gradient Sign Method (FGSM) and Projected Gradient Descent (PGD), each with 8 iterations and a step size of 1/8 gray levels for PGD. We found that the adversarial patterns did not transfer across ResNet and ViT i.e. patterns that were effective for ResNet did not work for ViT and vice versa. We also observed that like ResNets, ViT models with smaller patch sizes (16x16) are robust to spatial attacks(translations and rotations), whereas ViT models that use a larger patch size are more susceptible to them.

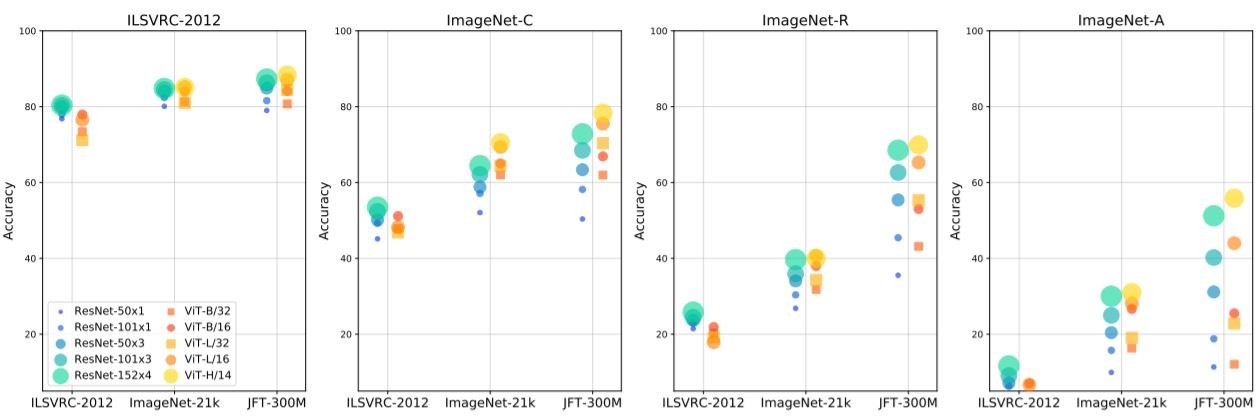

Texture Bias

CNNs are known to use texture for image classification(humans use shape). Reducing the texture bias has been found to make some models robust to unseen image distortions. So, we tested the texture bias of ViT on a special set of images with different texture and shape configurations. It was found that the larger-patched ViT models perform better in all cases, which can be attributed to the fact that larger patches preserve the shape more.

Robustness to Model Perturbations

In addition to evaluating the effects of the changes in training data, we also tried to understand the flow of information in the ViT models. They are discussed in the following three major subsections.

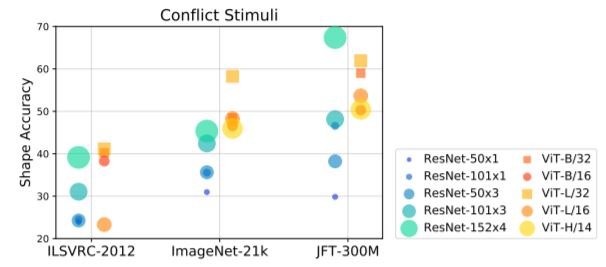

Layer Correlations

The two left-side diagrams show the correlation between the representations of different blocks of the ViT-L/16 transformer for 2 datasets. It can be seen that the representations of the later layers are highly correlated to the other layers and change only slightly. In ResNets, it is found that the downsampling layers separate the model into groups with different spatial resolutions. Despite lacking an inductive bias like ResNets, ViT organizes the layer into stages just like ResNets.The two right-side diagrams show the correlation between the representations of the CLS token after different blocks. The representation of CLS changes slowly at the beginning and rapidly later on in the network. This analysis shows that the later layers scarcely update the representations as a whole but focus more on consolidating information for the CLS token.

Lesion Study

Since the representations produced by different blocks are highly correlated, it makes us wonder whether some of the blocks are redundant and unnecessary.

So, we experimented by removing the multi-headed self-attention layer, feed-forward layer, or both from various transformer blocks of ViT. As we can see in the bottom row, as more blocks are removed, the accuracy of the transformer decreases proportionally. Models trained on larger datasets(JFT-300M) were found to be less robust to removal of layers. Also, removing the MLP feed-forward layer affects the model less than removing the self-attention layer highlighting the relative importance of self-attention.

Restricted attention

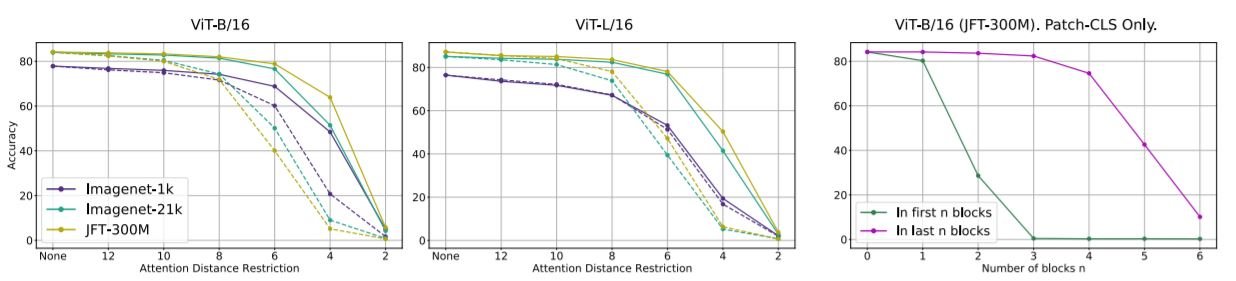

Next, we evaluate how much the ViT model relies on long-range attention. To do this, during inference, we test by using spatial-distance-based masks for attention between patches. Although ViT was trained with unrestricted attention, it was found that limiting the attention distance degraded the performance of the model.

The rightmost diagram shows the case when, for a variable number of blocks, attention is only computed with the CLS token and none other. Removing inter-patch attention completely at the end of the network has relatively little effect on accuracy while removing the initial blocks is detrimental. This is consistent with our earlier observations that the CLS token is primarily being updated in the final sections as compared to initial sections.

Based on the observations, we can also conclude that the ViT model is highly redundant and can be heavily pruned during inference.

Summary

ViTs are a favorable choice when there is an abundance of data and in several cases, they perform better when the model size is scaled. ViT is also robust to the removal of any single layer and seems to be highly redundant. Through the series of experiments presented in this paper, it is clear that vision transformers are on several occasions on par or better than CNNs. Although it is agreeable that transformers are meant for vision, future works could definitely improve the several drawbacks of ViT highlighted in this paper.

Categories related to this article

![[MusicLM] Text-to-Mu](https://aisholar.s3.ap-northeast-1.amazonaws.com/media/October2023/musiclm-520x300.png)